Final Presentation Slides

Jenny’s Status Report for 5/8/2021

For this past week, I have worked with my team to distribute the work for the final poster, paper, and video. I have completed my part of the final poster and will be in charge of filming a portion of the final video, where I will both explain the intro to our project and the flowchart and design specs of our final product. In the next few days, we will also begin working on the final paper as well.

Jenny’s Status Report for 5/1/2021

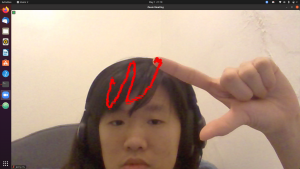

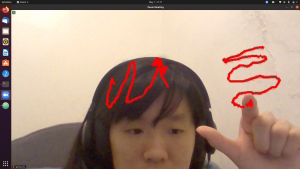

For this week, my main focus was to improve the gesture detection, because in our most recent demo, if the hand was tilted or angled in any way, there were a lot of small gaps in the drawing where the gesture was not detected even when it was supposed to be. I managed to make those issues much better after a lot of testing with different hand landmarks. In the end, I had to switch to using euclidean distance as opposed to just x or y distance of finger landmarks, and I changed up the threshold and which specific joints are used in the detection. Additionally, I tested other candidate hand gestures, such as the pinching gesture and the two finger point gesture, as alternatives, but they do not seem to be as easy to make nor as effective as our original one.

In the images below, we can see that even when the hand transitions naturally from the “proper” gesture to a tilted or angled version of it in the process of drawing, there are no gaps in the curves. The part at the end where there is more scribbling is simply due to me having trouble keeping my fingers absolutely still when trying to take a screen capture.

Additionally, I worked (and will keep working on) the final presentation slides with the rest of my team to have it ready by Sunday midnight.

Jenny’s Status Report for 4/24/2021

For this past week, our group decided to do a pivot to trying to integrate mediapipe’s handtracking into Whiteboard Pal. Because the c++ library is rather convoluted and lack any documentation, I first wrote all the gesture detection and finger tracking code in Python in a separate file. In the case that later we are unable to integrate the c++ mediapipe library, this Python file can also be used as a fallback to be integrated using ros icp or another method. The Python gesture detector I wrote has a near 100% accuracy when there is a hand on the webcam. The only issue is if there is no hand at all and you have a complicated background, it might detect some part of the background as a hand (but it’ll still detect it likely as no gesture).

In addition to the Python code I wrote, I also managed to get the c++ mediapipe hand tracking demo that’s provided by the library to build and run successfully. Currently, I’m in the middle of trying to use the same algorithm as my Python code, except turn it into a mediapipe calculator in c++ to be integrated with our Whiteboard Pal.

Jenny’s Status Report for 4/10/2021

For this past week, I successfully fully integrated my ML model that I made with Python into c++. So now it is a part of the pipeline and returns the classification as a boolean. Additionally, I also print out the classification to stdout for demo purposes.

To integrate, I had to create a different model, since it appears the Mat format in c++ had only 1 channel in grayscale. As a result, I changed around my python model a bit to have the input dimensions match with the c++ image dimensions, and the resulting one has 67% validation accuracy.

Right now my part is demo-ready, and I will further work on improving the accuracy of the model in the upcoming weeks.

Jenny’s Status Report for 4/3/2021

For this past week, I have been caught up with the housekeeping aspects of the project and integration. Since Zacchaeus and Sebastien both currently do not have Ubuntu as their version of Linux, and since Ubuntu is one of the most popular distributions, we wanted to ensure that our project will run on that. Thus, I installed Ubuntu on my Windows machine, enabled dual boot, and set up Whiteboard Pal on my Linux, which involved needing to build all the libraries again and ensuring Whiteboard Pal could find them, namely opencv and Tensorflow being the big ones.

I also have abandoned the path of using a third-party written library to import a keras model into c++, and instead, looked into importing protobuf files (.pb, which is the file type used by tensorflow when saving a model) into c++ through direct tensorflow library functions. I will be testing the different ways to import in the next three days, and will hopefully have found one that works by Wednesday meeting with Professor Kim.

Jenny’s Status Report 3/27/2021

(I also made a status report last week because I didn’t realize we didn’t need to write one last week. So to keep more up to date, also read that one from 3/20).

From last week, I trained a model that had a lot better accuracy with 73% test accuracy and 91% training accuracy. This was the model I ended up demo-ing on Wednesday to Professor Kim. I then started researching into how to import this model into c++ so I can integrate it into Sebastien’s pipeline and found several different libraries people have implemented (keras2cpp, frugallydeep). I was able to export the file properly with the scripts that were provided, but I was not yet able to figure out how exactly to format the webcam image input in C++ to a format that is accepted by the libraries. I will strive to have that figured out by Wednesday and have my model fully integrated into the pipeline.

Team Status Report for 3/20/2021

For this week, our team completed the design review paper. In addition, we met with Professor Kim and took into account his advice that we should first ignore any optimizations and get a fully working version of our product together. So, despite some suboptimal performance in portions of the pipeline, we will aim to first put it all together by the end of next week, then spend the rest of the time of this course optimizing it to a much more usable level.

As for the exact details of each portion, they can be found in the status reports of each of our members.

Jenny’s Status Report for 3/20/2021

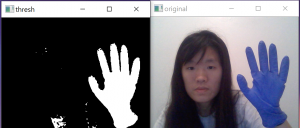

For this week, I worked with my group to complete our Design Review paper. Additionally, I made progress on gesture detection. As per Uzair’s advice, to ignore the face and background in webcam images, I wore a generic brand blue surgical glove and extracted only that to get only the hands, as pictured below:

Next, I started generating both training and testing datasets using this method, with two labels: gesture vs no_gesture. I currently have about 300 images for each of the labels for training, and about 100 each for testing. The images below are of the gesture label in the datasets folder:

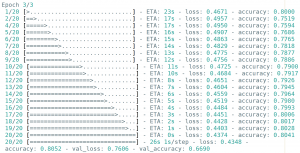

I then implemented the CNN training algorithm and model then began training. I tried to adjust parameters and tested which gave the best results in training and found that the highest testing accuracy (which varied from 65 to 69% validation accuracy, and about 80% training accuracy ) and I got was for a batch size of 15, number of steps of 20, and 3 epochs, of which the printout of the last epoch is here:

For the next week, I will first work on incorporating this into the overall pipeline and complete a finished version of the product before moving on to improving the model and accuracy. I suspect the accuracy will improve a lot if I do multi-category classification instead of binary, because there are a lot of nuances with the no_gesture label, since it incorporates everything else that is not a gesture.