This week I finished the pipeline in Rust, but unfortunately was having a lot of difficulty using existing wrappers around video4linux to output to the virtual camera device, so I ended up just rewriting it all in C++. Once there, I had to learn about various color pixel formats and figure out how to configure the output device with the right format, as the output from openCV is a matrix, but in the end the virtual camera device is a file descriptor – so I had to pick a format that openCV knew how to convert to but that also did not require re-ordering values in the matrix’s data buffer before writing it to file output. Now it works, so I can zoom into class through a virtual camera called “Whiteboard Pal” 😀

Zacchaeus’ Status Report for 3/20/2021

This week I worked to change the method for removing the background from the camera feed and making the hand detection much cleaner I accomplished that by using a blue glove and doing contour detection of that color. I am going to use the recommendations from Course staff to change the method I am using so that I won’t have to use a blue glove anymore. Along with that this week I added code to respond to Jenny’s model to make integration of the whole thing easier. In doing this I also added code so that I can now draw on the screen and do writing. I added controls to also allow the user to erase the screen as well. Right now everything is bound to the keyboard but all that needs to change is replacing that with signals from Jenny’s Model. For next week we should be able to fully integrate the pipeline after I port all my code to C++.

Jenny’s Status Report 3/27/2021

(I also made a status report last week because I didn’t realize we didn’t need to write one last week. So to keep more up to date, also read that one from 3/20).

From last week, I trained a model that had a lot better accuracy with 73% test accuracy and 91% training accuracy. This was the model I ended up demo-ing on Wednesday to Professor Kim. I then started researching into how to import this model into c++ so I can integrate it into Sebastien’s pipeline and found several different libraries people have implemented (keras2cpp, frugallydeep). I was able to export the file properly with the scripts that were provided, but I was not yet able to figure out how exactly to format the webcam image input in C++ to a format that is accepted by the libraries. I will strive to have that figured out by Wednesday and have my model fully integrated into the pipeline.

Team Status Report for 3/20/2021

For this week, our team completed the design review paper. In addition, we met with Professor Kim and took into account his advice that we should first ignore any optimizations and get a fully working version of our product together. So, despite some suboptimal performance in portions of the pipeline, we will aim to first put it all together by the end of next week, then spend the rest of the time of this course optimizing it to a much more usable level.

As for the exact details of each portion, they can be found in the status reports of each of our members.

Jenny’s Status Report for 3/20/2021

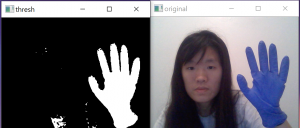

For this week, I worked with my group to complete our Design Review paper. Additionally, I made progress on gesture detection. As per Uzair’s advice, to ignore the face and background in webcam images, I wore a generic brand blue surgical glove and extracted only that to get only the hands, as pictured below:

Next, I started generating both training and testing datasets using this method, with two labels: gesture vs no_gesture. I currently have about 300 images for each of the labels for training, and about 100 each for testing. The images below are of the gesture label in the datasets folder:

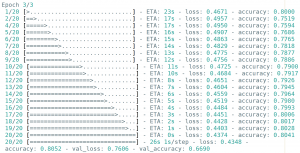

I then implemented the CNN training algorithm and model then began training. I tried to adjust parameters and tested which gave the best results in training and found that the highest testing accuracy (which varied from 65 to 69% validation accuracy, and about 80% training accuracy ) and I got was for a batch size of 15, number of steps of 20, and 3 epochs, of which the printout of the last epoch is here:

For the next week, I will first work on incorporating this into the overall pipeline and complete a finished version of the product before moving on to improving the model and accuracy. I suspect the accuracy will improve a lot if I do multi-category classification instead of binary, because there are a lot of nuances with the no_gesture label, since it incorporates everything else that is not a gesture.

Zacchaeus’ Status Report for 3/13/2021

The first part of this week I spent the majority of my time working on my teams design presentation, and presented on Monday. The second part of this week I spent my time getting my portion of the project off the ground. My goal was to get all of the finger tracking code in the method that I found online ported into C++. So far I have not been able to test any of the code written but I have been able to get OpenCV and have been able to do some basic things with it. This portion has been going a bit slower for me as the majority of my background is not in software.

Sebastien’s Status Report for 3/13/2021

This week I implemented a significant portion of the our system’s pipeline, which is more or less a wrapper of threads and synchronization around the CV and drawing code (the part that maintains a mask of pixels to be applied to every frame). I quickly discovered that build, dependency management, and a lot of other things are quite clunky and tedious when using C++ compared to Rust, a language I am more comfortable with, so I used Rust for this instead. But since OpenCV itself is written in C++, I wrote some foreign-function-interface (FFI) bindings and a well-defined (and typed 😀) function signature for our CV models, which will be written in C++, to implement. In other words, the pipeline code can simply call functions written in C++ that perform any CV / ML tasks using OpenCV and return their respective outputs. And we can use Rust’s wonderful build, dependency management, installation, and testing tool, cargo, to compile and link the C++ code as well without any makefiles or mess of headers.

During the process I gathered more specific details about the each of the system’s functional blocks, how exactly to do the thread synchronization, what parameters they take, and what data structures they use – which we can include in our design report, though all of these things may change in the future.

Right now the C++ model functions are just dummies that always return the same result, since right now my focus is making sure that we can get from a camera feed of frames to ((x,y), gesture_state) pairs to a virtual loopback camera, and to have it be fast and free of concurrency bugs. At this point I’ve got the FFI (mostly) working and a rough first pass at thread synchronization working as well. Next week I’ll be fixing that minor FFI bug and working on the loopback camera.

Team Status Report for 3/13/2021

For this week, our team presented our design review slides and looked over the feedback given by our faculty and peers. We also set out a work plan for completing the design review paper, which will include some changes that has been made as a result of feedback we have received throughout the last week.

In addition, our team has each individually made progress in the areas that we are responsible for completing, which is detailed more in our individual status reports.

Jenny’s Status Report for 3/13/2021

For this week, I looked over the feedback given by peers from the design review presentation and discussed some plan changes with my group. I have decided to train my gesture detection ML model in Python and then export it as a json or txt file, since training is done separately and doesn’t affect application speed. Then, in our actual application, we will be using c++ and using that to run a classifier with our model.

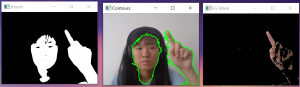

In terms of coding, I have been able to do 3 different types of maskings, one which does threshing by detecting pixels within a skintone range of colors, one that contours areas of skintone, and one that does dynamic background subtraction, essentially detecting changes in motion. I have taken a screenshot below for demo purposes:

For next week, I will be working on figuring out a way to combine information from the three to 1) filter out noise and 2) only focus on the hand and ignore the face.

Sebastien’s Status Report for 3/6/2021

This week I mostly spent time reading about how Linux and Mac handle virtual devices and mentally cataloguing OpenCV’s very large set of rather useful API’s. A lot of this was because we needed to make a precise decision about what we were doing and to some extent there wasn’t much of a consensus at first, so we all spent some time doing some more reading and learning about each of the possibilities and how much time they would take. In particular, I was looking into Professor Kim’s suggestion of being able to draw straight onto the screen and then pipe the result into zoom (or wherever in theory), which means a virtual camera interface. On linux, there’s actually a kernel module, a part of the Video 4 Linux project, that creates loopback devices, which are more or less a file that a program can write frame data to that has a corresponding file from which said data can be read by other programs. Mac has a dedicated library that has far less documentation and it seems far less simple to implement, so we decided to stick to Linux as our targeted platform and write the entire thing in C++. I also created an updated software architecture diagram for the system, which as of now will be a pipeline consisting of 4 threads that use message passing channels from the boost library for synchronization.