Over the past two weeks our project took a rather large change. The entire way we structured our project needed to change for us to use Mediapipe. Overall my part of the project was implementing the erase feature which I got done this past Thursday. Along with that I added multiple colors and cleaned up some of the code to make it more reliable and cleaner. Cleaner in terms of how it would react when the gesture turns on and off in the middle of it detecting stuff. At first the straight edge mode would not work unless the gesture was being detected already when the mode is entered.

Zacchaeus’ Status Report for 5/8/2021

This week I worked on presentation slides, the presentation video also made a couple tweaks and changes to the code base. Along with that I met with my partners to talk over how we were planning on doing the video and to portion out who does what. Along with that my team and I worked out on what the evaluation process should be. I finished my part of the video along with that I finished my part of the poster. All that is left for this week is finishing the final Paper.

Final Video Link

Final Presentation Slides

Sebastien’s Status Report for 5/8/2021

This week I worked primarily on the poster, presentation slides, and video while final improvements were being made by my team members – Jenny improved the gesture detection and Zacchaeus added an eraser feature and additional colors. For my part of the video, I talked about our evaluation process and tradeoffs we made as the semester unfolded and recorded it. This week I will also be adding those sections to our final paper after making the necessary updates and changes to our paper.

Jenny’s Status Report for 5/8/2021

For this past week, I have worked with my team to distribute the work for the final poster, paper, and video. I have completed my part of the final poster and will be in charge of filming a portion of the final video, where I will both explain the intro to our project and the flowchart and design specs of our final product. In the next few days, we will also begin working on the final paper as well.

Sebastien’s Status Report for 5/1/2021

Over the past two weeks I transitioned all of the existing code to use mediapipe instead of the pipeline I wrote from scratch using boost. Even though mediapipe is written almost entirely in C++, only the python api is documented, so a lot of the work was looking through the source code to figure out what was actually going on and figuring out how to pipe the existing hand pose estimator into our own drawing subsystem. I finally got everything together on tuesday, and since then I’ve added an input stream that reads key-presses so that we can add more drawing modes. When I did that, the pipeline came to a screeching halt. It turns out it was an issue with how mediapipe synchronizes the pipelines it is used to be built. After spelunking in the source again I figured out how to properly synchronize the graph so that problem was fixed. Finally I added a mode for drawing straight lines.

Jenny’s Status Report for 5/1/2021

For this week, my main focus was to improve the gesture detection, because in our most recent demo, if the hand was tilted or angled in any way, there were a lot of small gaps in the drawing where the gesture was not detected even when it was supposed to be. I managed to make those issues much better after a lot of testing with different hand landmarks. In the end, I had to switch to using euclidean distance as opposed to just x or y distance of finger landmarks, and I changed up the threshold and which specific joints are used in the detection. Additionally, I tested other candidate hand gestures, such as the pinching gesture and the two finger point gesture, as alternatives, but they do not seem to be as easy to make nor as effective as our original one.

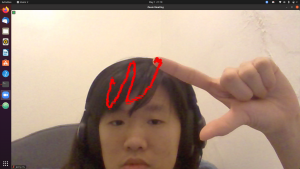

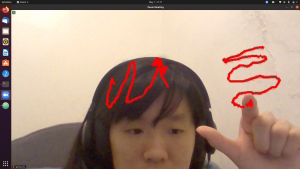

In the images below, we can see that even when the hand transitions naturally from the “proper” gesture to a tilted or angled version of it in the process of drawing, there are no gaps in the curves. The part at the end where there is more scribbling is simply due to me having trouble keeping my fingers absolutely still when trying to take a screen capture.

Additionally, I worked (and will keep working on) the final presentation slides with the rest of my team to have it ready by Sunday midnight.

Zacchaeus’ Status Report for 4/24/2021

This week and the past weeks I caught up on a lot of the stuff I needed to get done. Last week I ported the code to C++ and got everything done on the schedule. This week the team has been trying to pivot to using media pipe to get out of using a glove. For the first half of next week likely I will try to get media pipe working on my system as well. That way we should have a better time creating build packages that work in general for Linux systems.

Jenny’s Status Report for 4/24/2021

For this past week, our group decided to do a pivot to trying to integrate mediapipe’s handtracking into Whiteboard Pal. Because the c++ library is rather convoluted and lack any documentation, I first wrote all the gesture detection and finger tracking code in Python in a separate file. In the case that later we are unable to integrate the c++ mediapipe library, this Python file can also be used as a fallback to be integrated using ros icp or another method. The Python gesture detector I wrote has a near 100% accuracy when there is a hand on the webcam. The only issue is if there is no hand at all and you have a complicated background, it might detect some part of the background as a hand (but it’ll still detect it likely as no gesture).

In addition to the Python code I wrote, I also managed to get the c++ mediapipe hand tracking demo that’s provided by the library to build and run successfully. Currently, I’m in the middle of trying to use the same algorithm as my Python code, except turn it into a mediapipe calculator in c++ to be integrated with our Whiteboard Pal.