This week, I helped with the recording of the demo video, and finished the poster.

My progress is on track.

Next week, I’ll be working on the final report.

Jessica Lew, Lauren Tan, Tate Arevalo

This week, I helped with the recording of the demo video, and finished the poster.

My progress is on track.

Next week, I’ll be working on the final report.

This week, because our project is essentially finished and fully integrated/working, I worked on our final video with the rest of my team. Specifically, I recorded videos of us demoing our entire system as well as various clips of the mechanism moving and the sensor array working. I also have begun my voice-over recordings which will be overlaid with our final edited video as I am responsible for speaking in the final video. Here is a folder with all the clips I gathered with my team this week: Final Demo Videos.

I am on track as we are wrapping up the semester and our project is fully integrated and working.

In the next week, I plan on finishing up my voice recordings for our final video so it can be submitted by Monday night. I also will be working on our final report so that we can submit that by the deadline as well.

Because we have finished our project, the most significant remaining risk is completing the demo video and final report on time. We are currently on track with our schedule. We have already filmed all of the footage needed for the demo video, so the only tasks that remain are editing the video and modifying our design report for the final report.

We have not made any significant changes this week.

This week, I presented our final presentation and worked on the demo video. Specifically, for the demo video, I helped film and edit the video.

My progress is on-track. Next week, I plan to submit the demo video and work on our final report.

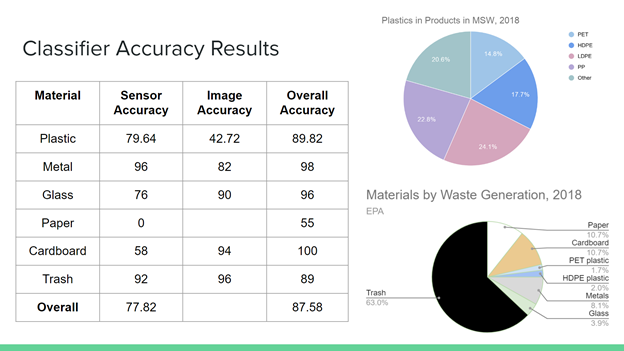

Moving into the final stretch of the semester, the most significant risk to our project is the accuracy of the image classifier and how that may affect the overall classifier. We were able to get pretty high confidence levels for most of our materials. However, our image classifier does not seem to be able to classify paper as recyclable with any confidence. To mitigate this risk, we have decided to use different weights for our separate accuracy rates per material based on how frequently certain items are thrown away to calculate our overall classifier accuracy (more specifically, we’re weighting each material by % of MSW generated, which is the proportion of each material out of all waste produced). This will allow our overall system accuracy to be a more accurate reflection of what it would be in the real world.

The most significant change we made this week was implementing the weighting system that I just described for each material’s accuracy weight. We feel that doing this will provide us with a more accurate overall accuracy rate for the entire system as if it was in full operation on a college campus, compared to if each category was weighted equally. One change that we may make to the design in the next week is retraining our ResNet-101 model to allow HDPE plastics to be considered as recyclable for the image classifier (the pictures of the HDPE plastics are currently in the trash training and validation folders). Retraining is necessary in order to improve our overall accuracy for plastics.

We are currently on track. We are preparing for our Final Presentation next week and are still mainly focused on increasing the overall accuracy of the system, but we do feel confident with our most recent accuracy data which can be found here.

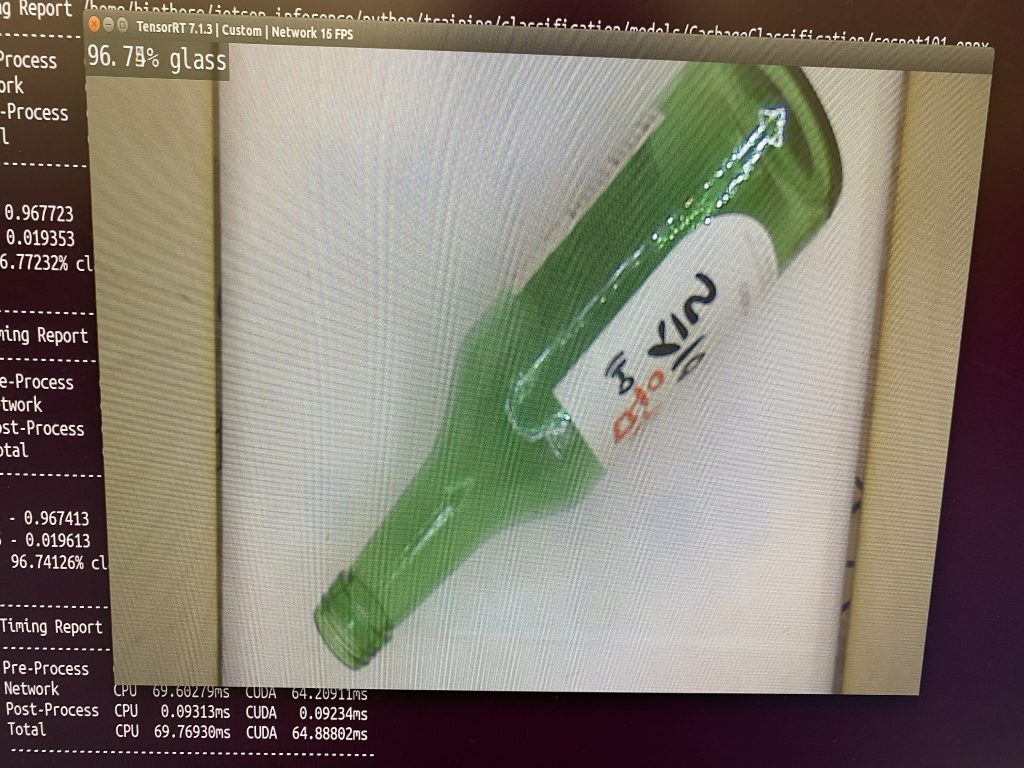

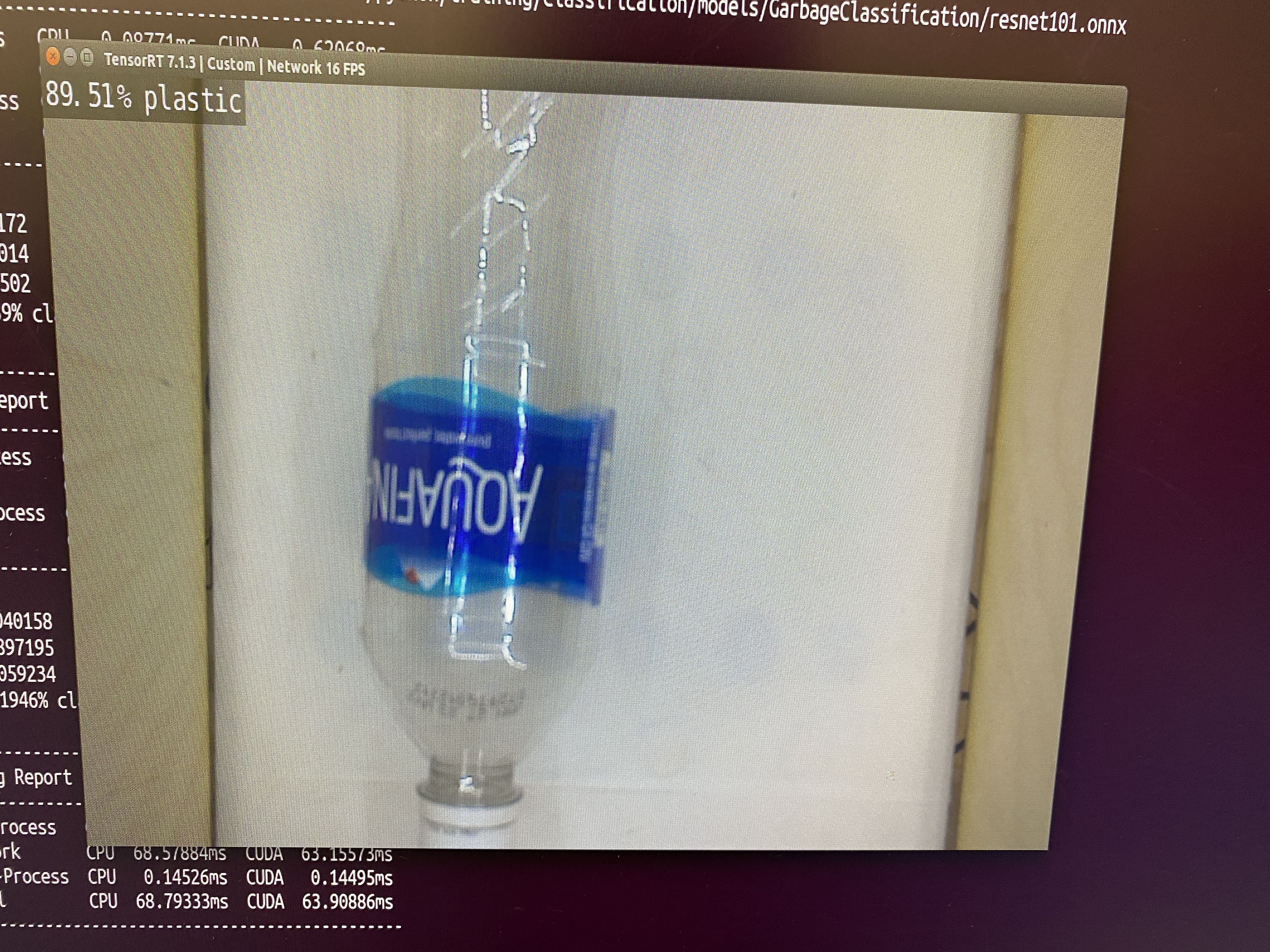

Here are some images of the image classifier working with high confidence levels.

This week, I collected and recorded a lot of data on the accuracy of our recycling system. Specifically, I collected data on the accuracy of the sensor classifier, the image classifier, and the overall classifier. I conducted 100 trials per material for our overall classifier for various different items of the same material classification. So, 100 trials were conducted for each material category which includes PET plastic, HDPE plastic, metal, glass, paper, cardboard, and trash. In addition, for the individual testing of the sensor and image classifiers, I conducted 50 trials per material category for both classifiers separately. Here I have attached the full data sheet with all of the recorded accuracy numbers: Data. I also recorded the accuracy of our ResNet model per epoch during its training and graphed the results here.

My progress is on track.

In the next week, I hope to continue to improve our overall accuracy with the rest of my team and help Jessica prepare for our Final Presentation next week. I also plan to work with my team to start making videos of our functioning system for our video demo coming up.

This week, Jessica and I retrained the image classifier model using the ResNet-101 model instead of ResNet-50, since the accuracy before wasn’t very good. I updated the sensor classifier a bit to reduce fluctuation between readings and make it more consistent, and modified the code for the switch (which is used for detecting whether someone closed the lid) to make it work properly (previously, it was detecting whether the lid was opened and whether the lid was closed). I helped test the classifiers with real objects, and updated some of image classifier confidence levels according to the tests. I also worked on coming up with the distribution of weights for different categories we used to calculate the accuracy of the different classifiers (sensor, image, overall) – we decided to use % material by MSW generation, which is the % of a certain material out of all waste generated/produced. For our slides, I calculated the accuracies of the different classifiers and plotted the accuracy per epoch of the ResNet-101 model.

My progress is on track.

Next week, I hope to start recording videos of our project for the video demo.

This week, I helped to retrain the image classifier on Resnet101, collect latency and accuracy data, synthesize the data, and update our final presentation slides.

My progress is on-track. Next week, I hope to present our final presentation and to continue retraining the image classifier (potentially on a larger image dataset or a Resnet model with more layers) to further improve accuracy.

This week, I captured a lot of pictures of different types of trash and recyclables with our camera mounted in our bin. For each individual item, I took many pictures from many different angles and orientations. I estimate that I probably captured 15-20 pictures per item. These pictures will hopefully help increase the accuracy of our image classifier by providing pictures that directly represent the environment the camera will be seeing when items are thrown into the bin. Our accuracy for the image classifier was pretty low given the existing data set we were using, so adding these pictures in our environment will hopefully help quite a bit. Here is a folder of all the images our image classifier will be using, which includes the pre-existing images and the ones I took: Images.

My progress is on schedule as every system has been integrated.

In the next week, I hope to create a data sheet for my team to record accuracy data of our subsystems and entire system. I plan to work with my team this week to begin collecting data on how accurate our system is by running the system a bunch of times on different items and recording whether the outcome was successful or not.

This week, I helped to find additional garbage image datasets, relabel images from our dataset, and retrain the image classifier. I also wrote short scripts to help with these steps which should speed up the process next week. So far, we have been training on the Jetson Nano, but ideally we would use AWS. I set up an instance on AWS this week, but could not figure out how to retrain the Resnet50 model using our dataset.

My progress is on-track. Our entire project is integrated and we have already added more pictures to our dataset. Next week, I hope to continue updating the image dataset, retraining the image classifier, and testing the overall accuracy. I will also continue to experiment with AWS, but if that does not work, training on the Jetson Nano is sufficient though slightly inconvenient.