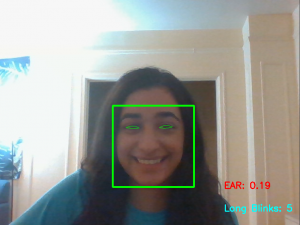

Since the previous status report, I worked on completing the Design Report with my teammates as well as adding to and testing our FocusEd codebase on my laptop. The first addition I made was that I integrated my eye classification code with Heidi’s HOG facial detection code. An image of this is shown below. While I completed this integration step earlier than I had designated on our schedule, it was a logical step to integrate the early version of two as soon as possible to then proceed to training and testing.

Next I worked on training of our eye classification model. Since every individual will have a different EAR threshold (which is the EAR value in our algorithm that determines if an individual’s eyes are closed), we wanted to train a model in order to accurately determine whether or not an individual’s eyes are closed or not. I am working on building a Support Vector Machine (SVM) classifier to detects long eye blinks as a pattern of EAR values in a short temporal window, as stated in T Soukupova and Jan Cech’s research paper “Real-time eye blink detection using facial landmarks” (this was the first paper that introduced EAR). I am using Jupyter Notebook in order to do so, which is something that is new to me and thus required time this week to set up and learn how to use. My EAR value, which belongs to a larger temporal window of a frame, will be given as input to the fully-trained SVM machine learning model.

Finally, another change I added was that I made my eye classification code simpler to run in terms of where the video input is found on the device the code is running on in anticipation of beginning Xavier testing of my code this Tuesday. I am currently slightly behind schedule since I hoped to have already tested my code on the Xavier, but I was able to complete the integration step mentioned before earlier than intended to make up for this. In the next week, I hope to finish training and then test on the Xavier starting Tuesday.

Sources

T Soukupova and Jan Cech. Real-time eye blink detection using facial landmarks. In 21st Computer Vision Winter Workshop (CVWW 2016), pages 1–8, 2016. https://vision.fe.uni-lj.si/cvww2016/proceedings/papers/05.pdf