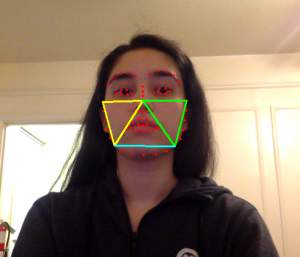

This week I worked with my team members on the design presentation. From last week, I was able to follow along with an existing example to get a simple face detection python script to work. The script uses OpenCV to read in the frames from my laptop webcam and using dlib’s HoG Face Detector. Additionally, with the face detector working I was able to also continue and follow a facial landmark example using dlib shape predictor. From observation, detection works pretty well, head pose does effect detection, if I look down or up the bounding box no longer appears over my face. The dataset that dlib’s face detector uses is the LFW (Labeled Faces in the Wild). This dataset has 2585 images. Last week I was looking at the CMU Multi-pie face dataset and was hoping to train the dlib face detector with this one instead. This dataset is extremely large with about 750,000 images of 337 people with 19 illumination conditions. As I’m not sure if we will be able to use this dataset (since we would require a license), this data set and the Yale face database did help consider how we would label our own dataset, to build upon the LFW dataset. We can classify images as either center-light, left-light, or right-light which is what the Yale face database does, but we could also classify as bright-light, normal-light, and low-light. I also looked at an example of face landmarking training that uses dlib for a custom shape predictor. Both code examples were uploaded to our team’s GitHub repo.

Progress is still on schedule. We ordered our equipment on Monday and in the meantime I am trying to play around with pre-trained models to see how the face detection would work with landmarking and continue to research how we will be training our models. Design presentation is on Monday/Wednesday and based on the feedback we receive we can start writing the design report.

This next week I hope to use this face detection script but with a different dataset to practice training a model with a new dataset. The dataset I plan to train with is the DriveFacce or the Yale face database. Additionally we can hopefully build on top of these and create our custom dataset following this format, especially when considering different light conditions. Also this next week, I will be working with my teammates on the design report.

Sources:

Face Detection Example

https://learnopencv.com/face-detection-opencv-dlib-and-deep-learning-c-python/

Face Landmark Model

https://github.com/davisking/dlib-models/blob/master/shape_predictor_68_face_landmarks.dat.bz2

Face Datasets

http://vis-www.cs.umass.edu/lfw/

https://search.wellspringsoftware.net/opportunity/FBX2:4283?qd=CMU%20Multi-pie

http://adas.cvc.uab.es/elektra/enigma-portfolio/cvc11-drivface-dataset/

Dlib official Face Landmark Example:

http://dlib.net/train_shape_predictor.py.html

http://dlib.net/imaging.html#shape_predictor

Training Example:

http://dlib.net/train_object_detector.py.html