One risk that could jeopardize our success is when we implement GPU acceleration to remove any delay of the system. It is important to be as close to real-time as possible. Right now we noticed a small delay when we integrated the head pose and eye classification code. Our plan for this is to get it as close to realtime first on the CPU and then implement GPU acceleration using CUDA Python and following the NVIDIA walk through. Additionally, for our testing we have been using a monitor to visually see how our algorithms are working so when we remove the cv2.show calls this should also improve the delay we see. For the interim demo we will most likely have this delay still but are working on minimizing it by checking the frames per second.

There have not been any significant changes to our existing design since last week. We have just been working on integration. To improve our integrated design, the calibration for head pose will resemble the calibration for eye classification. The ratio used will be the area of the cheek areas of each side of the face to determine whether the driver is looking right, left, forward, etc.

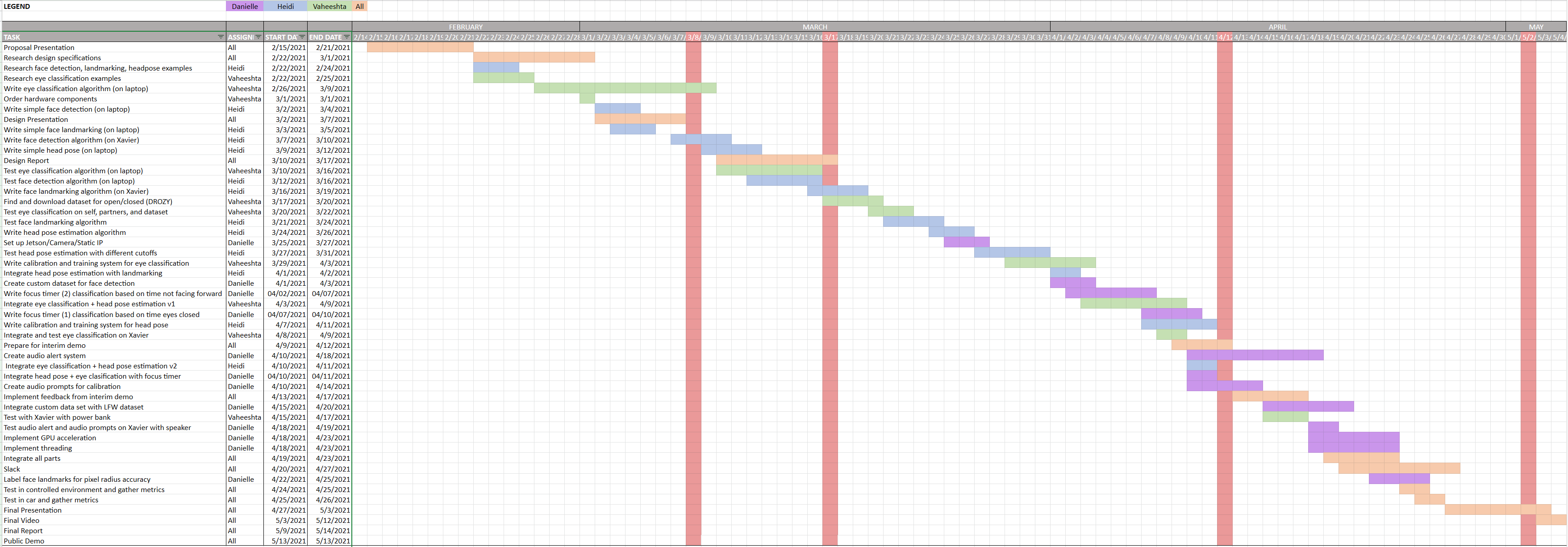

Some changes were made to the Gantt chart. We updated it with the actual dates we integrated the two algorithms and added additional tasks to ensure our MVP will be ready to test in a car setting.

Our updated Gantt chart is below.