Progress

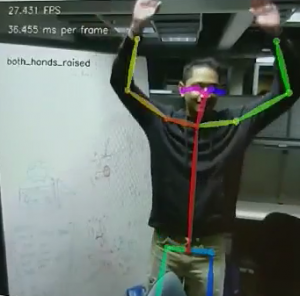

This week, I explored machine learning methods for gesture recognition. We originally used heuristics in order to recognize gestures, but found that heuristics often fail when the user is not standing directly facing the camera or standing on the left or right edge of the camera view. For example, an arm extended straight forward on the right edge of the screen has a large difference between shoulder X and wrist X compared to an arm straight forward on the center of the screen.

In order to collect more training and testing data, I improved our data collection pipeline to collect features by finding the x and y distances from each keypoint to the chest and normalizing the distances for a 22 feature vector. The system has a warm up time of 200 frames before it starts recording data and then records every 5th frame of the keypoints as training data.

Because the amount of data we were using were low (~1000 examples), I chose to use SVM (support vector machine) models because of the promise they were converge with low amount of training data. I built infrastructure to support multi class SVMs to take in a config file of hyper parameters and feature names, train multiple models, and measure the average performance. Online research showed that multiclass SVMs were not reliable, so I also implemented infrastructure for training and running one versus all (OVR) SVM models.

Because the model only recognizes teleop commands, the model is only used when heuristics detect that an arm is raised horizontally. I first tried using a multi class SVM to detect if the keypoint vector was left, straight, or right.

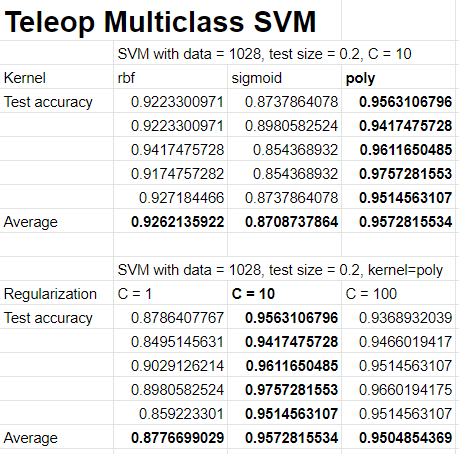

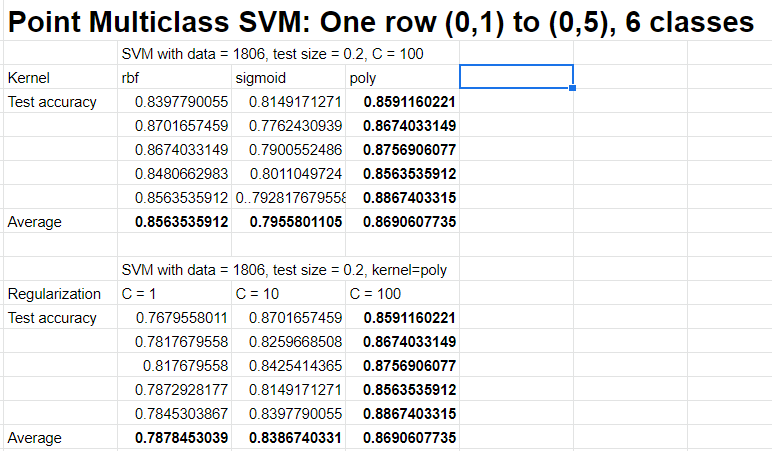

I tried the following hyperparameter combinations, with each row representing one run.

The SVM multiclass model worked great for the teleop commands, with an average test accuracy of 0.9573 over 5 runs. The best parameters were polynomial kernel and regularization constant C = 10. The regularization constant is inversely proportional to the squared l2 penality. After testing with the live gestures, it worked reasonably well and was able to handle many edge cases of not directly facing the camera and standing on the edge of the room.

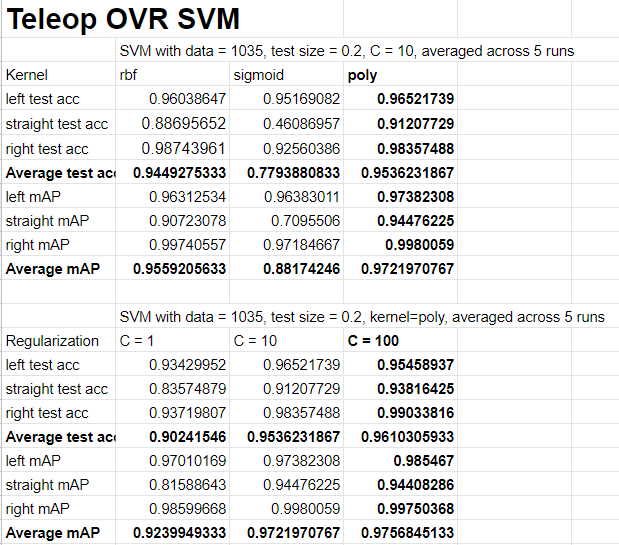

I tested the OVR SVM method on teleop commands, building a SVM for detecting left, straight, and right. The test accuracy and mean average precision is measured over an average of 5 runs. I saw slightly higher accuracy using a polynomial kernel using C = 100 with a test accuracy of 0.961. It was interesting to see a dropoff in performance for the straight data. there may have been some errors in collecting that data so I may try to collect more.

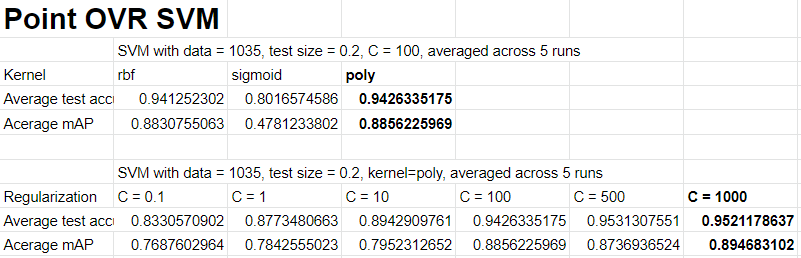

SVMs worked well for teleop data, so I wanted to try it for pointing data as well. The idea is to divide the room into bins of 1ft by 1ft and use a model to predict which bin a user points to based on the user’s keypoints. I didn’t have enough time to collect data for the entire room, so I only collected data for a row of the room. Here are the results of experiments I tried:

The results for point were also pretty good at 0.95 test accuracy, but the spatial data is only along one dimension, it would be interesting to see the performance across two dimensions. It is also interesting that the performance between OVR and multiclass is more noticeable with more classes, as it’s harder for one SVM to optimize many decision boundaries.

Also worked on documentation for how OpenPose / Xavier board / Roomba communicate.

Deliverables next week

I want to try to do point detection with more than one row of the room. I’m not sure if SVM methods will scale to cover the entire room or another neural network model is needed.

Higher quality data is also needed, I will continue to gather data. I want to explore other feature engineering techniques like normalizing the points but also letting the model know where the user is in the frame. I also want to experiment with using keypoints from multiple camera perspectives to classify gestures.

Schedule

On Schedule. More time needs to be allocated to gesture recognition, but it is fine because we need to wait for the mapping to be finalized to proceed.