Progress

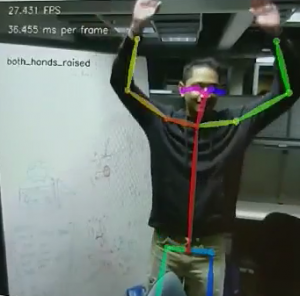

I started this week by finishing up the design presentation. I hooked up the camera system, image processing system, webserver, and robot to finish the first version of our MVP. We are now able to control the robot using teleop and also gesture for the robot to go home.

However, there are some issues with recognizing gestures using heuristics when the user is not facing the camera directly. So, I have built a data collection pipeline to gather training data to train a SVM classifier for if a teleop gesture means a person is pointing left, straight, or right. The features are normalized distances from each keypoint to the person’s chest, and the labels are the gesture. We started collecting around 350 datapoints, and will test models next week.

There are also some issues with the Roomba built in docking command, so we will have to overwrite its command to use our mapping to navigate close to home before activating the Roomba command that slowly connects to the charging port.

In addition, I installed our second camera. Processing two camera streams halves our FPS, since we have to run OpenPose on twice the frames. So, we are rethinking using multiple cameras and seeing what we can do with two cameras. Ideally we can still have a side camera so there is no one angle where you can gesture perpendicular to all the cameras in the room. I will have to keep experimenting with different angles.

I also have been working on the design report.

Deliverables next week

1. First pass of the gesture classification models

2. Finalize camera positioning in the room

Schedule

On Schedule.