Progress

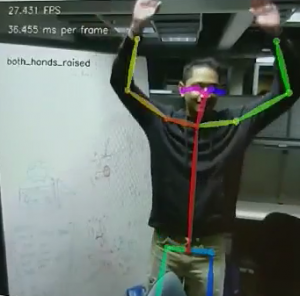

Rama and I got OpenPose running on the Xavier board this week. This allowed me to start playing around with the results OpenPose provides.

Gestures:

After first running OpenPose on images we took manually and videos, I was able to classify our 3 easiest gestures with heuristics: left hand up, right hand up, and both hands up. After we got our USB webcam, installed the webcam, and got the camera to work with the Xavier board (required a reinstall of OpenCV), I started working on classifying teleop drive gestures (right hand point forward, left, and right). I implemented a base version using heuristics, but the results can be iffy if the user is not standing directly at the camera. I hope to try to collect some data next week and build a SVM classifier for these more complex gestures.

OpenPose Performance on Xavier board:

Rama and I worked on optimizing the performance of the OpenPose on the Xavier board. With the default configuration, we were getting around 5 FPS. We were able to activate 4 more CPU cores on the board that brought us to 10 FPS. We also found an experimental feature for tracking that limited the number of people to 1, but brought us to 30 FPS. (0.033s per image) We were anticipating OpenPose to take the longest amount of time, but optimizations made it much faster than we expected. It should bring us below our time bound requirement of 1.9s for a response.

Openpose running with the classified gesture: “Both hands raised”

Deliverables next week

1. Help the team finish teleop control of the robot with gestures. Integrate the gestures with the webserver.

2. Collect data for a model based method of gesture classification.

3. Experiment with models for gestures classification.

Schedule

On schedule to finish the MVP!