This past week I thought a lot about node setup and the relationships between all of the moving parts in the interaction layer.

I will begin by discussing node setup. While we don’t plan to incorporate new device commissioning into our project, we do need some way of bootstrapping a node, at least for our own development. From this perspective I wrote some bootstrapping scripts to obtain the appropriate code, set up config files, install necessary libraries, and set up the database.

I also spent some time designing the database and its schema, which can be found in more detail here (note that while unlikely, this link could change in the future. If so, see the readme here. Most importantly, I defined what the tables will look like, and what datatypes will exist in each table.

With regards to the master process, I wrote a preliminary python executable that checks in a loop if the broker / webapp are running, and if not, starts them. While i think the master may end up having to do a few more things, I think that for the most part this will be its sole purpose

As for the node process, I spent some time debating the merits of implementing it in C / C++ vs. in Python. This was a difficult decision because the node process is where the bulk of the actual interaction logic will exist. The main problem with doing Python is that python is not particularly good for parallel programming. While constructs for concurrent execution exist, (i.e. threads), each thread must acquire Python’s global lock, which serializes the execution. Processes could instead be used, but are a much more heavyweight alternative for a problem that only needs a smaller snippet of code.

Since most of the node’s communications are going to be done through the MQTT broker over the network (an inherently async operation), bottlenecking the system by serializing execution seems at first glance to be a mistake, and points towards using C as a better solution. That being said, I believe that we can get around this problem and still use Python. As long as we keep each thread very lightweight, and limit the amount of time each thread can run (limit blocking operations), then it should be no problem if execution is effectively serialized. I think that this allows us to take advantage of Pythons very powerful facilities, and eliminate the complexity of C.

One thing that was brought up in our SOW review was that we should redefine our latency requirements. As we discussed, it won’t really mean anything if we define latency requirements between our nodes, as they are no longer on the same network and as such have potentially unpredictable (and out of our control delays). However, we do have some level of control over the on device latency. While it’s true that virtualized hardware such as aws ec2 instances don’t guarantee consecutive execution (the hypervisor can interleave execution of different machines), we believe this will be less noticeable than network latency.

After thinking about it a bit, I decided the simplest way to measure this on device latency is as follows: when a relevant piece of data is received from the broker (i.e. something that should trigger an interaction), the node will write a timestamp to a log file. When that piece of data is finally acted upon, the node will write a second timestamp to the log file. By looking at the difference in timestamps, we can measure the on device latency.

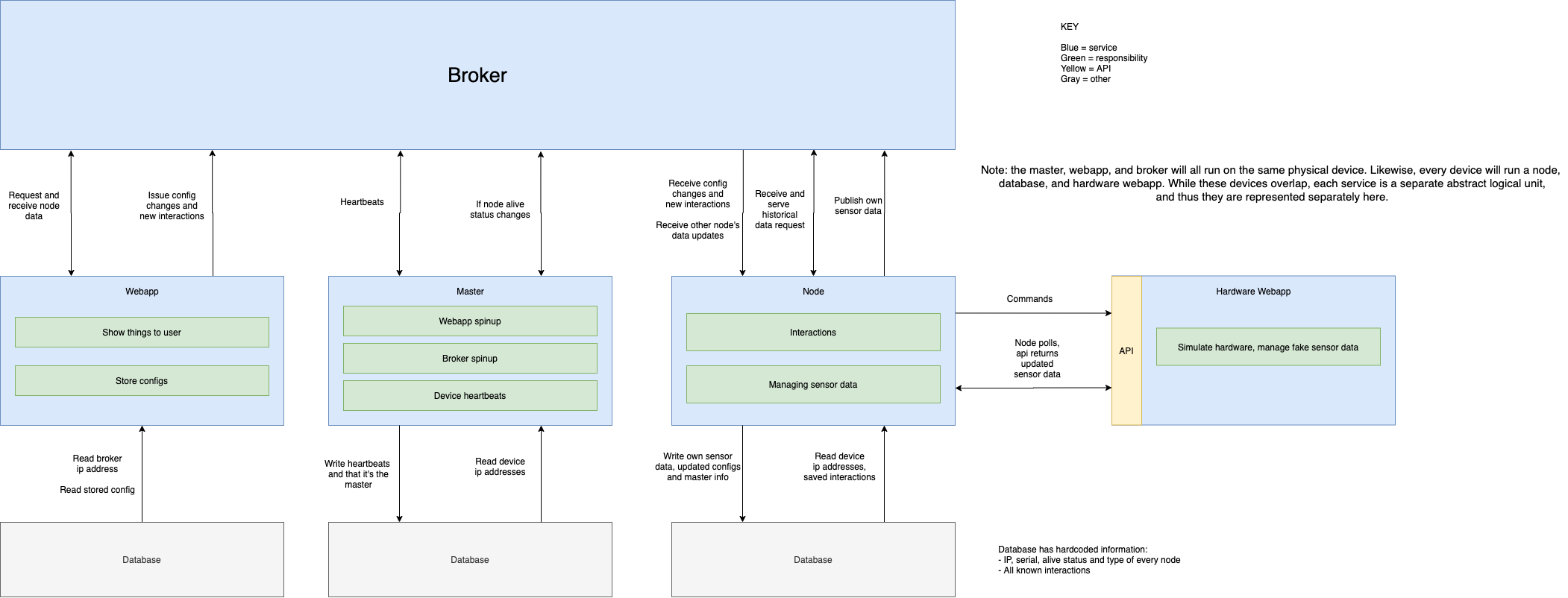

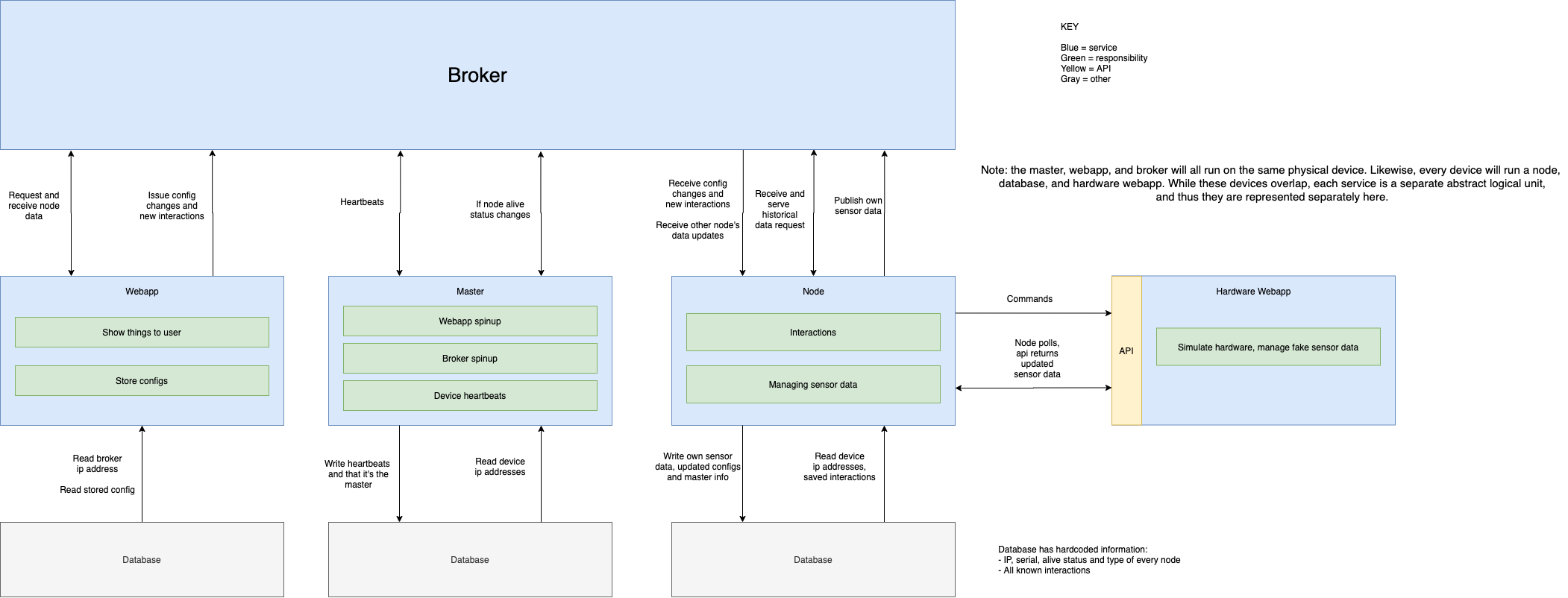

Another thing that I worked on was defining more formally how all the moving parts in the interaction layer interact. See the below diagram for more information:

Moving forward, I have a few goals for the upcoming week.

- Latency: I would like to define on-device latency that is reasonable and in-line with the initial (and modified) goals of this project.

- APIs:

- Work with Rip to define how my layer will interact with his hardware. Currently we are planning on having Rip implement a “hardware” library with a simple API that I can call to interact with the “hardware”. This would include functions such as “read_sensor_data” and “turn_on”, etc. I would like to iron out this interface with him by next weekend.

- Work with Richard to interface with the webapp. As I currently understand it, the webapp will need to publish config changes, read existing config data, and request sensor data from other nodes. While I plan for all this functionality to be facilitated by the broker, I would like to implement a simple library of helper functions to mask this fact from the webapp. Ideally, Richard will be able to call functions such as get_data(node_serial, time_start, time_end) or publish_config_change(config). I would also like to iron out this API with him by this weekend, even if the library itself isn’t finished.

- Node process: I would like a simple version of the node process done by this weekend. I think this subset of the total functionality is sufficient for the midsemester demo. It should function with hardcoded values in the database and mock versions of the hardware library / webapp to work against. This process should be able to:

- Read config / interaction data from the database

- Publish data to the broker

- Receive data from the broker and act upon it (do interactions)