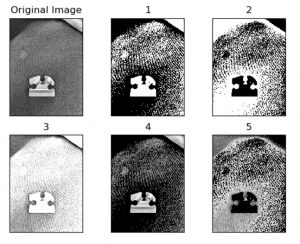

This week I mainly focused on testing the Leap Motion controller in various conditions and exploring the SDKs. It turns out that the company behind the controller has pivoted since I last used the controller and the new SDKs are focused on VR game development purposes, so I installed the original library (last released in 2017) to see if it would still work. It requires Python 2.7, but I was able to get a simple hand and gesture tracker up and running to explore the characteristics of its tracking. I measured that it has an effective range of about 22″ when facing upward, but we found some issues when we flipped it over to track hands while facing downwards. Some more research revealed that the tracking is done through IR LEDs and cameras, so when the controller was oriented downward, the reflection of the IR light off the surface below the hands threw off the software and led to issues. I found a tool from Ultraleap (the company behind the controller) that allowed me to see the actual image view of what the cameras were picking up, and was able to confirm that it was the reflection of the IR light washing out the image that was likely causing this. I worked with Connor to do some research on materials that would reflect less IR light and found a few options, including Duvetyne and Acktar Light Absorbent Foil. Duvetyne was the most affordable one and still promised significant IR absorption, so we are planning on purchasing a sheet of it to lay underneath the frame to assist the downward-facing Leap Motion’s hand-tracking.

I also worked on the system/software architecture, and made some diagrams for the design review slides. Because I didn’t want the old Leap Motion SDK to make all 3 of us have to write in Python 2.7, I came up with a simple client-server model communication protocol so that the other parts of the codebase can be in Python 3 and use sockets to communicate with the hand-tracking code. Since we have strict latency requirements, it is important that this added latency of using sockets, even when it’s completely local, isn’t too high, so I will continue to measure and be thinking about this and minimizing the amount of data that needs to be communicated.