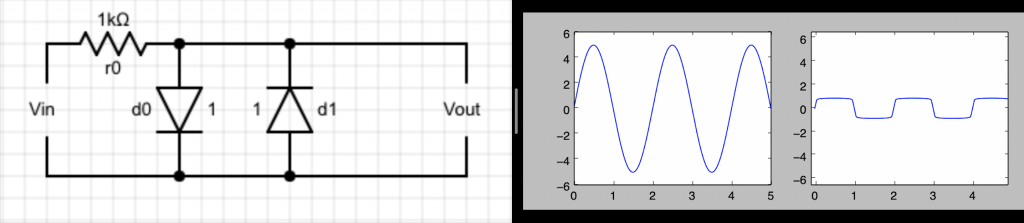

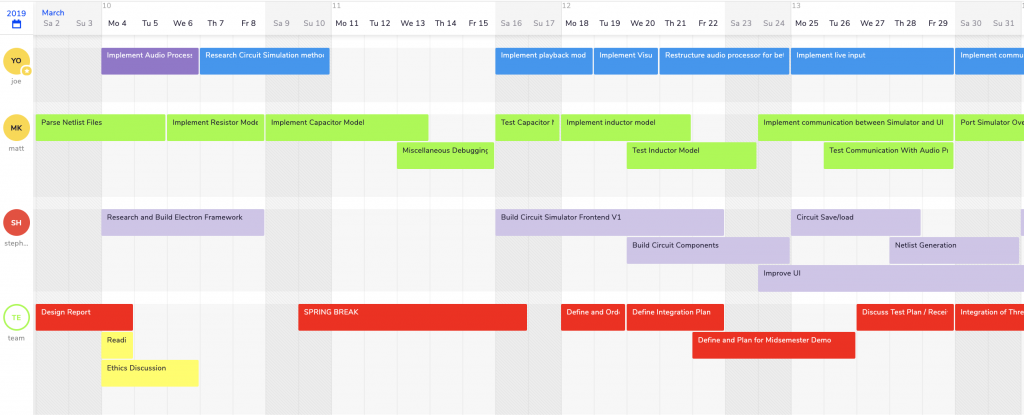

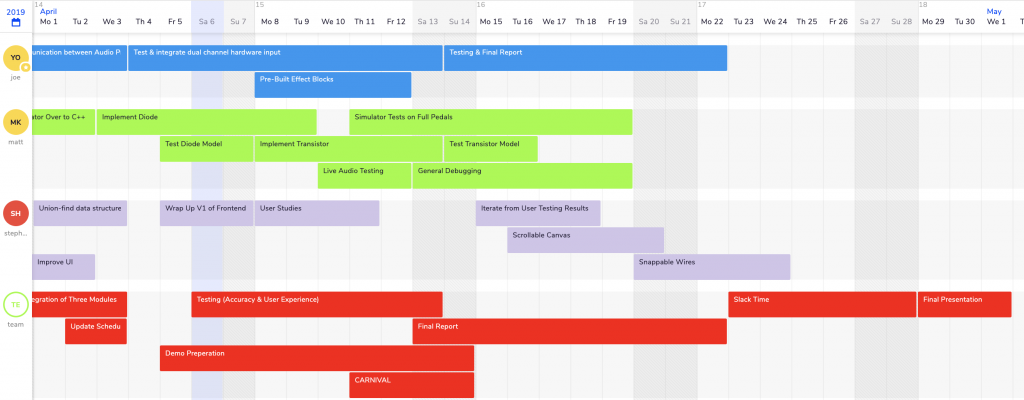

This week, we got a lot done in advance of our final in-lab demo on Monday. Though I still had some work to do on the circuit simulator related to implementing our transistor device model, I ultimately decided to shift my attention towards some other high priority issues for our demo. In particular, I helped Stephen out with some important outstanding items on the frontend side of our project.

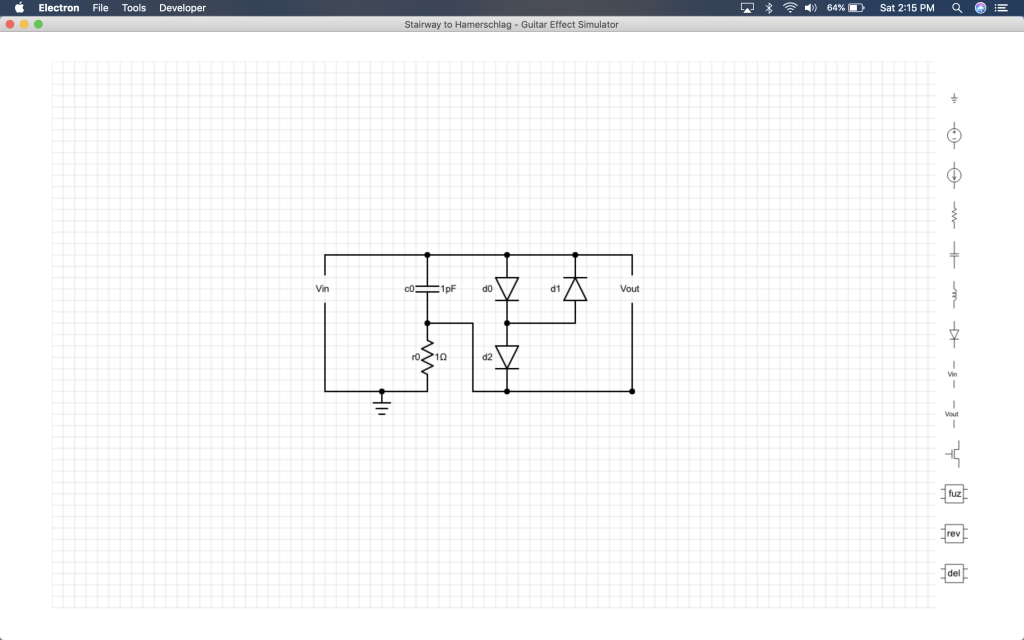

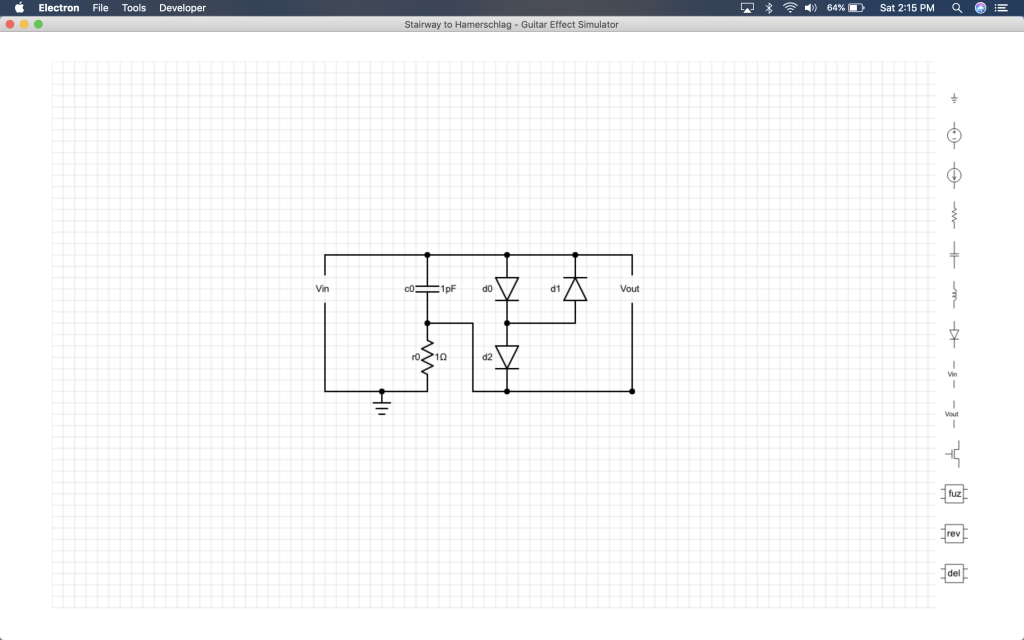

The first thing I worked on for the frontend was overhauling some parts of the GUI to provide a nicer user-experience. This involved making the application full-screen, widening the schematic editor, centering the toolbar, and moving several of the buttons into the native macOS menu. This actually turned out to be easier said than done, due to some interesting facts I learned about the ElectronJS programming environment. In particular, the parts of the code that have access to native menus and the parts of the code that render the GUI (referred to as the main and renderer processes respectively), run in different processes with no access to the other processes’ data. This means I had to write some code to trigger appropriate IPC via a message-passing model when I wanted a native menu option to render a change on the GUI. The actual GUI now looks like this:

The biggest frontend feature I worked on was support for live audio simulations. In particular, users needed to be able to start and stop the simulator from the frontend, as well as tell if the simulator is currently on. I decided that the nicest way to do this was via a modal that pops up when a user selects a live audio simulation:

The sound wave icon in the middle oscillates when a simulation is running to provide users with an indicator about the state of the application. When the user presses “Start”, the frontend launches a new simulator instance to run the user’s circuit against live audio from an instrument. When the user presses “Stop”, the frontend sends a SIGUSR1 signal to the running simulator. On the backend, we installed a SIGUSR1 handler which exits the simulator gracefully when the signal is delivered.

On the backend, I also spent some time adding parser support for netlists containing audio transformation “block effects” such as fuzz pedal blocks, distortion pedals, etc. Ultimately, we were able to get a full end-to-end test in today. This involved designing a circuit on the frontend using new features such as effect blocks, running a live audio simulation on the GUI, being able to pause and run the simulation as desired, and playing back the audio in real time.

Next week, we have our demo on Monday. I also plan to maybe finish implementing transistors this week, but I’ve run into some hard bugs that I have yet to figure out. Luckily, I think enough works for a nice demo even without this feature. We also are going to have to prepare for presentations and work on our final report/poster.