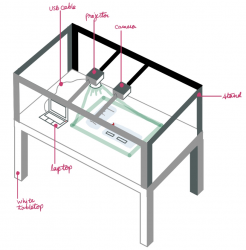

This week I have been debugging the system with Tanu and Suann. We found that most of the latency issues we were seeing were due to using the Mac OS platform. When we switched to using the Ubuntu platform on my laptop, these issues went away. To make this switch, we needed a new way of zooming in and turning off autofocus on the Logitech webcam since this webcam has no Logitech software support for Ubuntu. We were able to bypass zooming in by changing the positioning of our camera and projector. This also helps cut down on a step needed with the initial system setup.

Next Steps:

The main issues we are still facing are the sensor’s rate of response to tapping on the board. After receiving feedback from some peers, we are also prioritizing making the system more intuitive and visually appealing. We will solder together more sensor circuits to expand the range of tap detection on the poster board and work on improving our gui this next week.

We are on schedule.