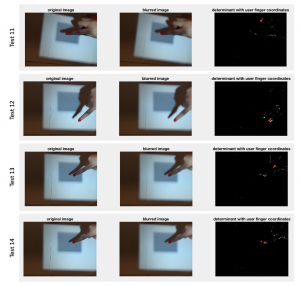

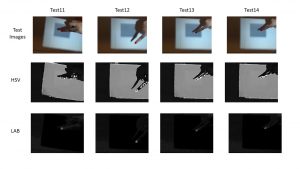

One of the major risks is that our webcam might not be compatible with Python. So far we have been testing in our demo environment using our old MATLAB code, but now we will be migrating all our code to Python. This means that we will have to prioritize taking data using a Python interface as a next step. In the case that we are unable to interact with the camera using Python, we will consider purchasing a PiCam. This is well within our budget.

Suann has placed an order for an SD card for the raspberry pi. We need this to read data from our sensor. To make sure that we do not have any delays in putting this system together, Suann will make a behavioral model of this tap sensing system using an Arduino.

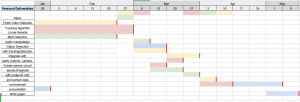

Currently, there are no changes to the existing design, nor is there any change in our schedule.