I converted the Matlab code for color detection with thresholds to Python using numpy and matplotlib.

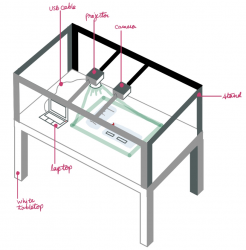

I also wrote code to use Python to access the camera. I first tried using pygame to do this using the code from this link. However, a past image kept showing up when taking a new image since when the camera starts, a significant number of frames from the previous session are saved. Then I installed opencv and found this code to capture an image when hitting the space bar. This can be easily adapted to take in input from the piezo sensor circuit when a tap is detected.

We took a new set of 26 test images with our new poster board set up.

I finished the SVM code using scikit-learn to detect a red dot. I wrote a csv file, coordinates.csv, containing data from our test images to use to train the algorithm. I wrote the following files:

test_svm.py:

Loads data from file coordinates.csv. Uses the data to train the SVM algorithm. Uses the trained algorithm to predict if each pixel is red or not. Then finds the centroid of the red pixels and displays the image with the centroid plotted.

write_data.py:

Loads an image and searches for the red coordinates with hard thresholds. Uses a 2 pixel radius around the coordinate to find the values of red pixels. Saves the pixel A and B space values along with a 0 if it is a red pixel and 1 if it is not a red pixel to a csv file coordinates.csv

coordinates.csv

Contains data for the SVM alogrithm in the following format:

(A space value) (B space value) (0 for red/1 for non red)

color_detection.py

Loads data from coordinates.csv to train the algorithm. Takes a frame from the Logitech webcam when the spacebar is clicked. Analyses the image to find the centroid of the red pixels in the frame using SVM to predict if a pixel is red or not.

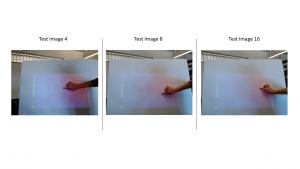

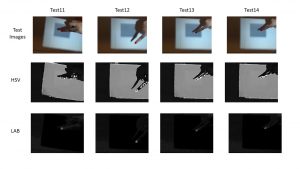

I looked at the results for all the 26 test images we have. The SVM code gets incorrect results for tests 4, 8 and 15 as shown below:

Basic color thresholding does not find red pixels for tests 2, 3, 5 and 19. I have saved these image results to the bitbucket repo so we can compare them.

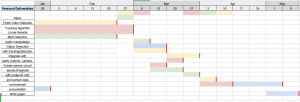

The detected coordinate of the red dot is slightly different for the two measures. A chart of the coordinates is included at the end of this post. I think the svm is not detecting the proper coordinate for all cases because right now it is only being trained on images 12-26 and not the images 1-11. I had only saved data from these images since the preliminary gui is projected on the poster in these images. Once I add all the data to the dataset I think that the algorithm will become robust enough to cover all our current test cases. I will also start to constrain our dataset to only contain every 10th pixel that is not red since the data file has become very large as each image has 480×640 pixels.

My progress is on schedule.

Next week’s deliverables:

Constrain the write_data.py script to write values for every 10th script instead of all the pixels

Detect green pixels in the image for border detection to be used in Tanu’s gui coordinate calculations.