We decided to do our real time testing with the second classifier, which takes about 0.8 seconds on average to finish computations. I tried a few different methods of classification: svm.LinearSVC and the SGD classifier since they are supposed to scale better. However, the results of our original classifier remain significantly better so we decided that these methods are not worth pursuing further.

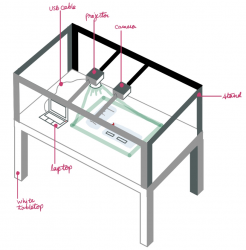

Tanu and I finished debugging our detection and gui integration on 3/31. Our system now works in real time. We added buttons on the screens as a placeholder for a tap to trigger color detection.

The first iteration of the calibration system that I wrote last week is working. For this system, we detect the coordinate of the top left and lower right corner of the gui screen using our existing color detection. After repeatedly testing in real time, we realized that since a user has to position the red dot at the corner of the screen for calibration, there is significant variability in detected coordinates. Thus the mapping of coordinates from detection to gui is not accurate. Each mapped coordinate is consistently a fixed distance away from the target coordinate.

To adjust for this error, I wrote a second iteration of calibration. The second version of calibration consists of a splash screen that appears on loading the program as shown below. A third coordinate is detected in the center of the screen to adjust for differences in the detected coordinates of the two corners of the gui.

Next steps:

- Test the second iteration of calibration function in real time. If this function does not give good results, possibly change it to project and detect red squares at the corners of the screen so that they will always be at fixed positions.

- Compile detection results from the 16 test images we took on Wednesday in a table

I am on schedule.