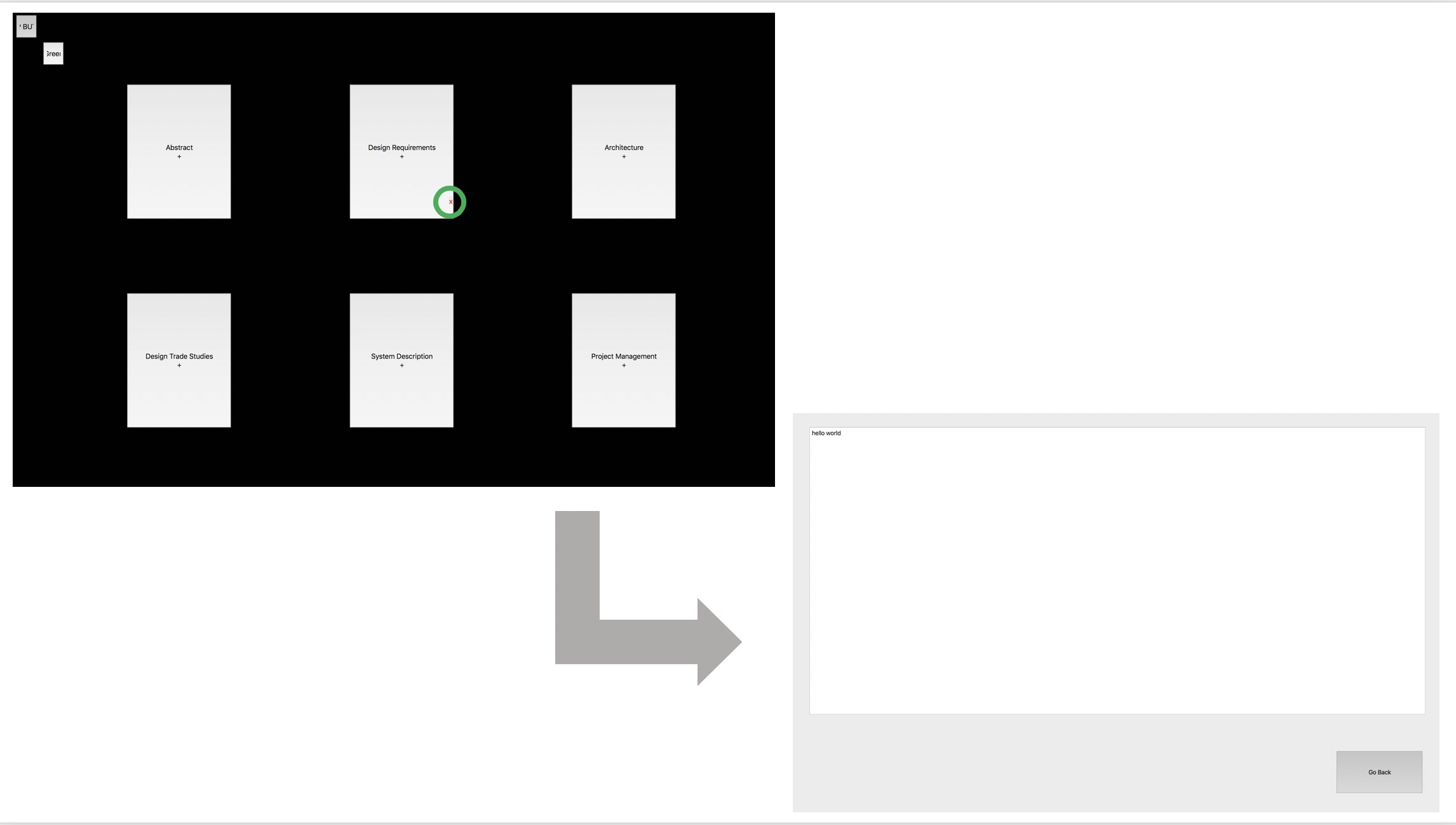

I worked with Isha to integrate my GUI subsystem with her detection subsystem. I added the following functionalities to my GUI in order for the integration to work:

1. After receiving the calibration coordinates from Isha’s part of the code, I set them as reference points and used to calculate the observed width and height of the GUI as seen by the camera used for the detection algorithm.

2. On receiving the coordinates of the red dot from Isha’s detection code, I calculate the position of the user’s finger with respect to the actual GUI coordinates. Thus, the red dot observed by the camera needs to be transformed to a scale that matched the GUI dimensions.

I tested my code using synthetic data: hard-coded the calibration coordinates and red dot coordinates according to the the test images we took on Tuesday. The mapping code works but with some degree of error. Thus, following will be my future tasks:

1. I will be working on testing my code on real time data and make appropriate changes to improve the accuracy .

2. I will also be working on integrating the tap detection subsystem with the GUI and detection subsystems.

Below is an example test image:

Below is the GUI and the mapped coordinate of the red dot is shown with a red ‘x’. Since it is within the button, it launches the modal containing the text from the appropriate file:

I am currently on schedule.