Omar:

For this week, I primarily focused on transitioning our product to using our TensorFlow algorithm instead of the previous OpenCV neural network. This consisted of some complicated plug and play, as I needed to manually feed in images from the camera to the TensorFlow neural network, then subsequently pulling out the face it recognized from the 7 of us and enacting the rest of the logic based on this. Getting this done was a lot harder than I thought, as sending the “recognized face” to the neural network was pretty difficult. We were recognizing the faces with OpenCV, and extracting them and sending the separate faces to the algorithm to be verified/recognized took a lot of time for me to understand. I also spent a good amount of this week in Detroit for a job conference, so I didn’t devote as much time as I normally do to working on this. However, now that I have interaction set up between the algorithm and the detected faces, I now have to devote a bunch of time to optimizing the framerate of the algorithm and making sure it runs quickly. In order to do this, I’m going to have to optimize the photo selection process for the following week. I’m also going to have to investigate the face detection process, making sure I detect all the faces that exist at the front porch.

Chinedu Ojukwu:

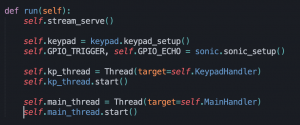

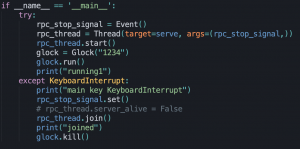

This week I focused mainly on preparing for the live checkpoint demo. To accomplish this I worked on integrating all components and sensors to be handled by one python script consisting of a GLOCK class and class methods that support our system features. Since multiple processes need to be running simultaneously, I created threads that handle: listening for RPC call from the client, listening for the correct keypress, waiting for the ultrasonic sensor to be signal the beginning of the recognition process, and listening for any streaming server requests. Provided below are some screenshots that show how the threads are created and run.

To go further in depth into the main lock handler, the function consists of a continuous while loop in which the distance observed by the ultrasonic sensor is checked in a loop guard and if a potential resident is seen, the process of polling frames from the camera begins. The ML suite will then return the result of the verification to the main handler and the lock and led will activate accordingly. All this being said, the functionality that will be included in the demo is a simple RPC call to unlock the strike, keypad functionality to unlock, and on each unlocks the LED strip will be activated.

Joel Osei:

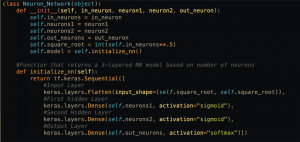

This week for our capstone we talked with Professor Tamal and Raghav about our neural network. We decided that it might be more effective for us to focus on the architecture of our neural network and not actually worrying on the code for creating it from scratch. So I scratched most of the python code that I created for feeding forward and backpropagation and decided to use a library to help us with the machine learning. We decided Tensorflow would be the best thing for us to use, so I spent hours looking up examples of how it is used and just reading up on documentation. The power of Tensorflow really shocked me, as it really allows for complete customization for deep learning. They give the ability to make different types of neural networks from convolutional networks, to recurrent networks, to simple densely connected networks. For this project, all we needed was a 3 layer densely connected neural network. All that I needed to do was create the model, and decide exactly what activation functions I wanted for each layer, how many neurons, and whatever parameters I wanted.

Once everything was connected they provide a beautiful way for compiling the model and making it learn in whatever method we choose. We are using stochastic gradient descent and a loss function of cross entropy.

At this point in time, the biggest risk factor is the speed of the machine learning pipeline and the time it takes to complete the verification process. To mitigate this risk we first have to integrate the system to see if our pipeline meets the design requirements we had set. If the process is too slow, we plan to mitigate this by using two Raspis; one for the camera and ML suite and the other to handle the other functionality of the system. Currently, nothing has changed from our schedule and we plan to be on track for the demo.