Chinedu Ojukwu

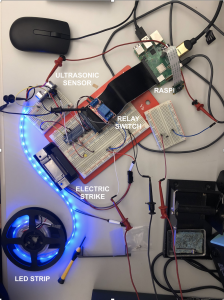

In this past week, I was able to focus on building the hardware prototype for the Electric strike circuit and connecting sensor components via the RasPi GPIO pins. The electric strike was connected by connecting the strike to the relay module and the DC power supply. The PI sends a signal to the relay module by using a python script to activate the strike. In a similar fashion, I created a python script to control and read distances from an ultrasonic sensor through the use of gpio pins. Lastly, I was able to create a simple python script to control the LED strip and make it pulse in different colors. Our main goal for the LED strip is for the color to be green when unlocked, red when unauthorized and be white when idle. As of now, these components are using three gpio data lines and two 5v VCC lines to run. The other components are powered using DC output from a power supply. As we look to mode the system more modular, we seek to use only a 5V and 12V power supply to power the system and all components. Provided below is an image showing the completed circuit.

I was also able to work on the streaming server for the RasPi. By the next TA check-in, I plan to have remote unlocking implemented and fully flushed out. I was able to install the UV4L streaming library and launch a simple client-server streaming connection. Specifically, the RasPi launched a server while a client (my laptop) connected to this server and was able to view the camera feed on the Pi. The next step is to implement remote unlocking over this server connection.

Joel Osei

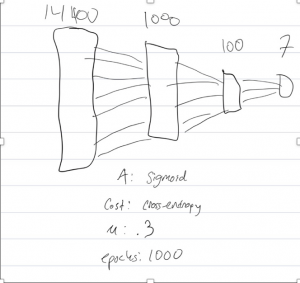

This week I’ve been working on the machine learning aspect of our capstone. This is the biggest part of our project and to this point has taken the most planning, and designing. Up to this point, we haven’t done much actual implementing of the neural network, but this week I spent a lot of time finalizing a lot of design details and implementation choices for the neural network. Although this isn’t set in stone, we decided we will be using a 3 layered neural network with an input layer, 2 hidden layers, and an output layer. I have attached a brief image below to describe the network below.

The hidden layers will have an activation function of sigmoid, while the final output layer will have an activation function of softmax. This means the network will output probabilities for each of the 7 individuals, and to determine who it is all we need to do is find the one with the highest probability. We will also have a minimum threshold in order to combat false positives. If our network is not at least 80% sure who it is, then we will not choose anybody and just output an error.

The coding and math behind this neural network are quite complex and we are making sure to take our time in order not to cause silly mistakes and errors. Each layer will have associated weights and biases, which will be adjusted using Stochastic gradient descent from a cost function. This cost function will show us how far off our network is currently. Based on this we will perform backpropogation in order to properly adjust the weights and biases and as a result, have a more accurate network. This upcoming week will be spent collecting training data and completing the entire network in order to begin training and testing it.

Omar Alhait

This week, we kicked off a lot of the building process, as our parts came in, and we got straight to work assembling the different parts of our project. Our work was concentrated on the React Native app, the lock circuit, and the machine learning algorithm.

A lot of my work was concentrated on the React Native app as well as setting up the pipeline for the machine learning application. The goal is for us to work on as many things in parallel as possible, and this isn’t easy when all our parts are interdependent. For this reason, I worked on the pipeline and got APIs to cover for a lot of our work while the main machine learning algorithm is being written. I set up an OpenCV application and implemented its plug-and-play facial recognition algorithm into our system to substitute for the ML. This is in order to set up a pipeline where we can monitor the uptime/statistics of all our moving parts. So far, the facial recognition algorithm has pretty low accuracy, but it recognizes faces; it will definitely work well enough for the demo in the coming week, if our main machine learning algorithm isn’t functional by then.

I also spent a lot of time working on the React Native side of things. Building upon the previous week’s streaming server that I set up to stream from the Raspberry Pi to my local computer, I put in about 6 or 7 hours researching how to set up streaming in React Native. I currently have an app working that allows me to stream any Youtube or local video to the React Native component on my phone. React Native is proving to be difficult to deal with at times, as the documentation for it is split up into subsections that don’t overlap. If I end up implementing a solution for one of the subsections, it won’t be able to interact with any other parts built with the second subsection in mind. For this reason, I worked myself into a hole last night, and I’m still debugging my way out to get the app back up and running again. It’s clear that I’ll have to put a lot more foresight and planning into every move I make, as making rash decisions will result in me having to look through every line of code I’ve written to try to find an incompatible statement.

Besides the React Native app, though, streaming is going fairly well. I was able to expand upon the basic streaming app we set up last week, making it a little bit more robust and lossless. We’re experiencing significant lag at times when trying to stream video from the Raspberry Pi on campus to my computer at home, so I’ve been playing around a lot with the different resolutions and camera settings to try to make the video as lossless and clear as possible. By the next week, this streaming service should be connected to the facial recognition app, enabling me to recognize faces remotely from a stream on a webserver. Once this is done, we’ll simply have to move the data pipeline from the web client to React Native. This is already being set up with the streaming app I have set up, so connecting the two endpoints shouldn’t take more than a few hours of debugging and code tracing.

Our work is still relatively on schedule. We are working regularly on this project now, so the progress is steady, but the time really isn’t. We’re aware that there are about 5 weeks left before we need a tangible project up and running, but the progress is rapid, and there’s no lack of things to work on with all the parts we have to deal with.