Team Update

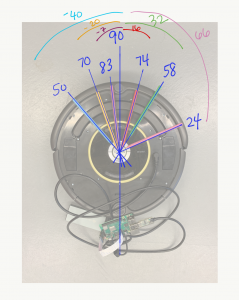

Currently, the most significant risk that we foresee is the robot getting too close to humans to take a decent picture. After a lot of testing, we found that the minimum distance that our sensors would report was around 24 inches, which is very close to the distance requirement we’ve set. This means that in order to accurately detect an upcoming obstacle, the robot would have to be fairly close to the obstacle, which might hinder its ability to take a decent picture. Another risk that we foresee is the support structure not being sturdy enough, after pivoting from our initial heavier structure.

To mitigate the IR sensor risk, we plan to do more testing with the sensor processing software and playing with IR sensor trigger values to determine the farthest that the sensors can detect without constantly being triggered. To mitigate the support structure risk, we have reduced some of the height to our structure so that the robot isn’t as top heavy. We plan to conduct more testing in the actual test environment up until our final demo, and will respond to any issues as they come up.

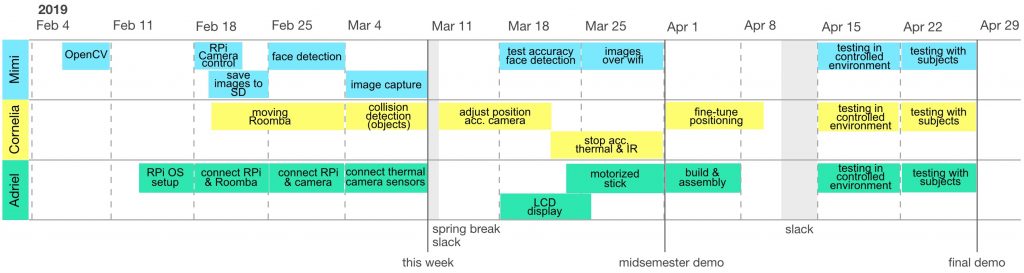

Our Gantt chart remains the same. It’s attached here for easy reference:

Adriel

This week I worked on one of the iterations of the support structure of our robot. It turns out the wood we got was too heavy and that we would risk damaging the Roomba’s motors if we kept all that weight on top. Thus, we pivoted to using lighter wood, and a less tall support structure. I also worked on moving the top platform connections from a breadboard to a protoboard. All of these wires and components were soldered onto the protoboard to ensure that wires would not fall out during our final demo.

I am currently on schedule.

For the last few moments before our final demo, I hope to have any outstanding issues resolved and have testing fully completed.

Mimi

This week I worked on helping to build the base of our structure, which carries all of our hardware on top and needs to be sturdy enough to move around, but not too heavy. Specifically, I helped measure and cut the wood in the wood shop, glue the pieces together, and test on the robot. Cornelia and I also made some small adjustments to our code based on testing.

I am currently on schedule.

For tomorrow, we plan to do final testing and adjustments for the final demo in our actual demo space. In the coming week we will also need to do our final demo presentations, and our final report.

Cornelia

This week I worked on rehearsing and preparing for our final presentation which happened on Monday. I also worked on building the base of our structure, which involved getting lighter wood, cutting them, slotting them, and gluing it all together. We then assembled the entire structure and adjusted the height. We tested our robot a lot. Mimi and I also made small updates to the code after Adriel adjusted the camera movement code.

According to our Gantt chart which has remained unchanged, I’m on schedule.

With one day left before the final demo, we plan to do more testing to make sure we’re completely ready for the public demo on Monday. Then we will work on the final report due Wednesday night.