Kevin:

- Accomplishment #1 description & result

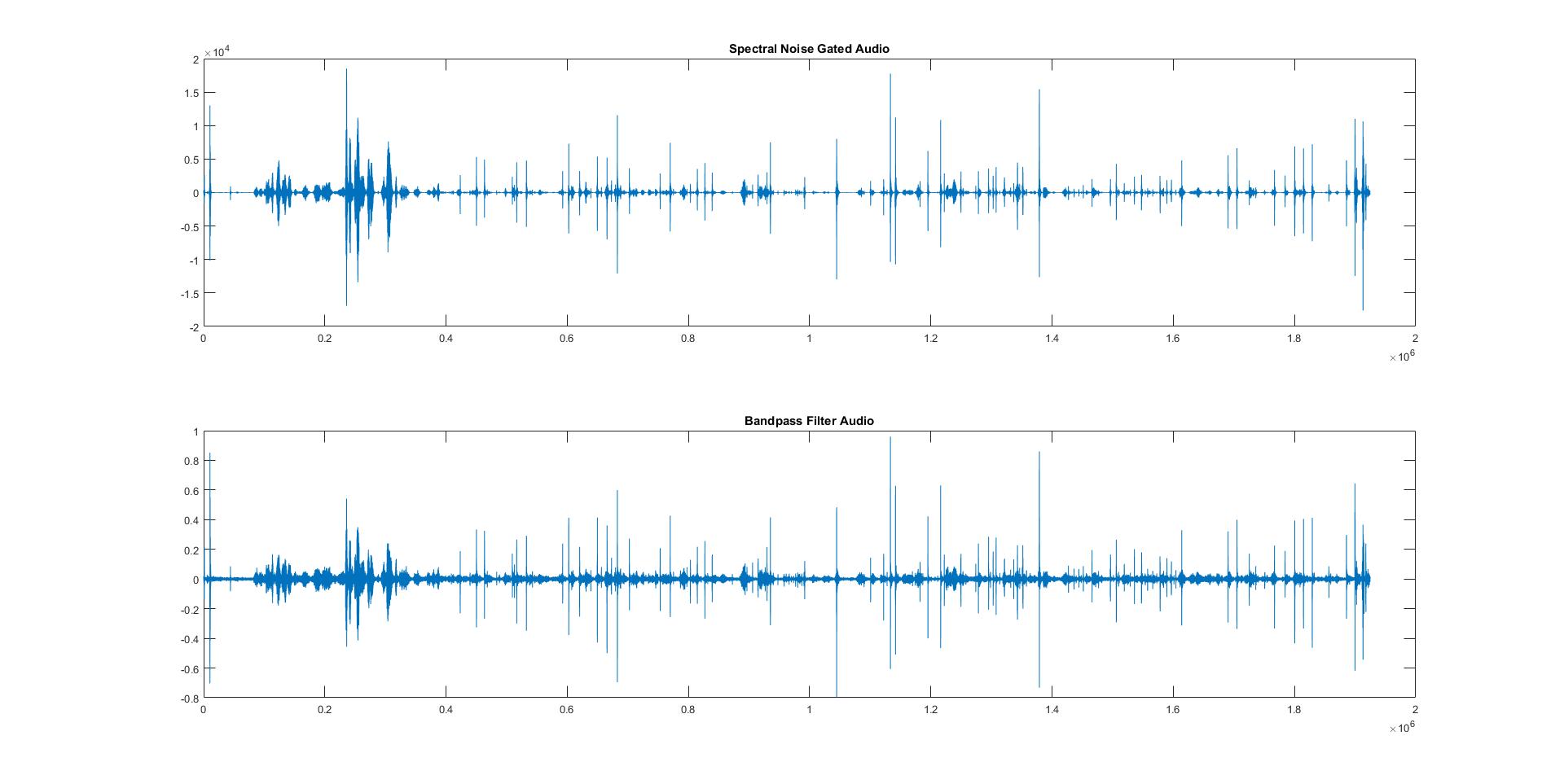

- This week I mainly focused on noise reduction. I was able to start using a noise reduction method similar to the algorithm used by Audacity. The technique is called spectral noise gating. Essentially, a clip of pure noise is given to the algorithm to analyze. The FFT is taken in windows and then the algorithm tries to reduce similar patterns in the main audio clip.

- This method of filtering is great for removing stationary noise. Stationary noise are sounds that remain relatively constant, like hums, hisses, or even more complicated sounds that remain throughout the clip. However, it is not able to cope well with non-stationary noises such as voices, or sporadic chirps.

- We collected a small sample of noisy data to test on. There are several voices in the background as well as some generic coffee shop sounds. For the reasons described above, the noise was suppressed, especially background hums and some general chatter, but the voices were mostly intact. As a result we has quite a few false positives for keystroke detection.

- For the test, I first ran our keystroke detection algorithm with high sensitivity on the clip with a simple bandpass filter. I then extracted the noise by taking randomly about ⅓ of the samples between detected keystrokes. I then used the spectral noise gating algorithm and applied this to the bandpass filtered audio clip. I re-ran the keystroke detection with lower sensitivity to reduce false-positives.

- The result was that the noise was suppressed but of course not completely gone, especially the voices. The main goal is to see if this filtering can help us more accurately separate keystrokes. I sent the filtered data to James to see what kind of difference he can see in the features collected.

- We would like to be able to remove more noise, however, the voices and other non-stationary sources of noise are very complicated to remove. Some people have had great success in doing so with deep learning model however those are trained to pick out everything except human voice. Training our own model would not be feasible at the moment.

- Upcoming work #1 description & expectation

- This week I will continue to refine and study the effects of noise filtering. I will need to collect more data to help further tune the filtering process.

- I will also be research removing non-stationary noise with the use of multiple microphones. I may be able to have some modest success with the added data of a second microphone to determine what is noise and what is a keystroke.

- Lastly, I will begin planning our final revision of the PCB. We are aiming for a smaller package size without as many pinouts.

James:

- Accomplishment #1 description & result

- In order to collect TDoA data, I made changes to the server to allow connections from multiple sensor boards. Upon connecting, each board is assigned a unique ID, and the timestamp of the connection is recorded. This will allow us to align the beginning of the recorded data in order to perform TDoA analysis.

- Along with Ronit, we worked on collecting noisy data in order to help improve our noise reduction scheme.

- Upcoming work #1 description & expectation

- Using the three working boards we currently have, I will begin collecting keystroke recordings with TDoA data next week. I will then be able to perform TDoA analysis using the code written earlier in the semester using gunshot data. I will attempt to recluster the data with the TDoA as an additional feature.

- The board is responsible for ending the connection and allowing the server to write the data out to a .wav file. This is currently handled by resetting the board manually. In order to allow the board to automatically power down and sleep in the absence of noise, we are currently planning on utilizing the Vesper wakeup microphone to kick a watchdog timer. Ronit has already begun working on this portion of the system.

Ronit:

- Accomplishment #1 description & result

- We narrowed down our trouble with our machine learning to having non-representative data. Our previous data was collected improperly.

- Additionally, we were not performing enough noise reduction.

- I worked with James to collect some noisy data for Kevin to test the new noise reduction technique

- In parallel, I got the ESP32 to work with the wakeup microphone.

- Now when there is no background noise, the wakeup microphone is switched on and the processor goes into deep sleep. The oscillator, execution units and sram are clock gated and consume no energy.

- When there is noise in the environment detected above a preset threshold, an interrupt is generated that brings the processor out of deep sleep to resume its normal actions.

- We will order a power analyser next week to measure more accurately how much power is saved, but preliminary experiments using the oscilloscope show that the power savings is around 20%.

- We now need to modify the network stack to recognize when the processor is in deep sleep mode.

-

- Upcoming work #1 description & expectation

- There needs to be a last bit of tweaking to the network stack to support tdoa data and the sleep modes on both the processors.

- Next week will involve a small portion of work on the network stack, and then the remaining work to the machine learning

Team

- Accomplishments

- After testing we verified the new PCB is working with the modifications discussed last week. We are able to obtain data from the main microphone over WiFi while powered by the battery. We also confirmed the Vesper mic is working. Everything is getting the proper voltage. We believe the battery charging system is working, but further testing may be required.

- More progress was made in the signal processing side. We are tuning the keystroke detector and exploring noise reduction options.

- The server is being updating to handle TDoA data.

- Upcoming

- We will be collecting more data to aid in refinement and further development. We need data for TDoA, noise reduction, clustering, and machine learning.

- We have our demo on Wednesday and will be showing the data collection and networking portion of our project.

- We will be refining our feature extraction, clustering, and machine learning this week to prepare for integrating everything together.

- Changes to schedule

- We are behind on integration since all of the parts are not fully complete yet. Most of this week will be focused on getting everything ready for integration.