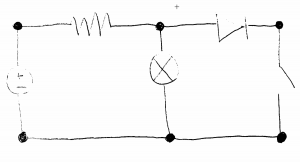

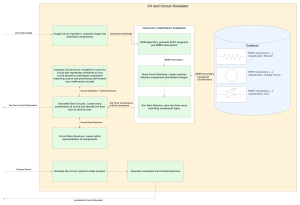

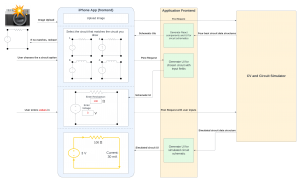

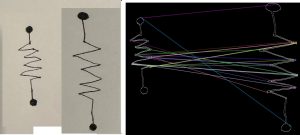

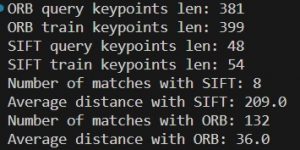

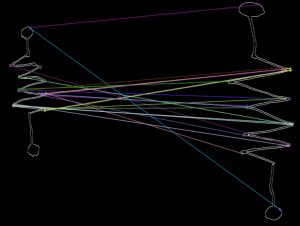

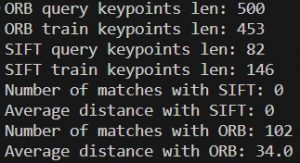

The only major changes made to the design were the addition of preprocessing algorithms to the computer vision. We knew that we were going to have these, but we weren’t sure exactly what algorithms we were going to use. Upon receiving the user’s image, we grayscale it, then do some simple thresholding to get rid of the effects of different lightings and shadows and the grain of the paper. Then, we do some aggressive median blurring that will eliminate all drawing marks except for the dark, filled in circles representing nodes. This has been the major change and success that has allowed our use of Hough circles to work (see Stephen’s report for more information).

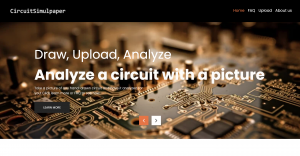

This was the first week since we changed to an iOS application from a web application. A lot of the early stages of the week was researching Swift and looking through examples to develop some of the UI for the app. The front page of the app has been completed and the page that uploads the photo needs just a little more time to be completely finished. The next step will be to feed the picture of the uploading hand-drawn image into the computer vision algorithm.

The schedule is the same except for Stephen’s schedule rework (see below). The circuit simulator is running a bit behind due to Devan getting sick, but he will work over fall break to make up for the lost time. Jaden will also be working on the phone application throughout fall break.

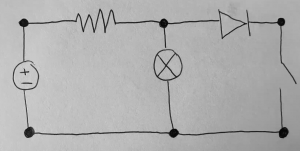

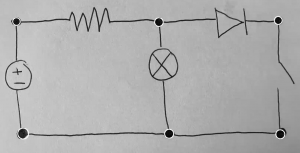

Two engineering principles that we used to develop our design are linear algebra and image processing. For our circuit analysis tool, we need to run nodal analysis on nearly every single node in the circuit. This involves setting up a system of equations that models the voltages and currents going into and out of each node. By calculating equations for each node, we then will solve the matrix system of equations that represents the circuit. We also need to process the images that users input in order to make them easier for our computer vision algorithm to recognize. The images go through a grayscale filter, a binary threshold filter, then a blur in order for our algorithm to detect the nodes at the end of each component.