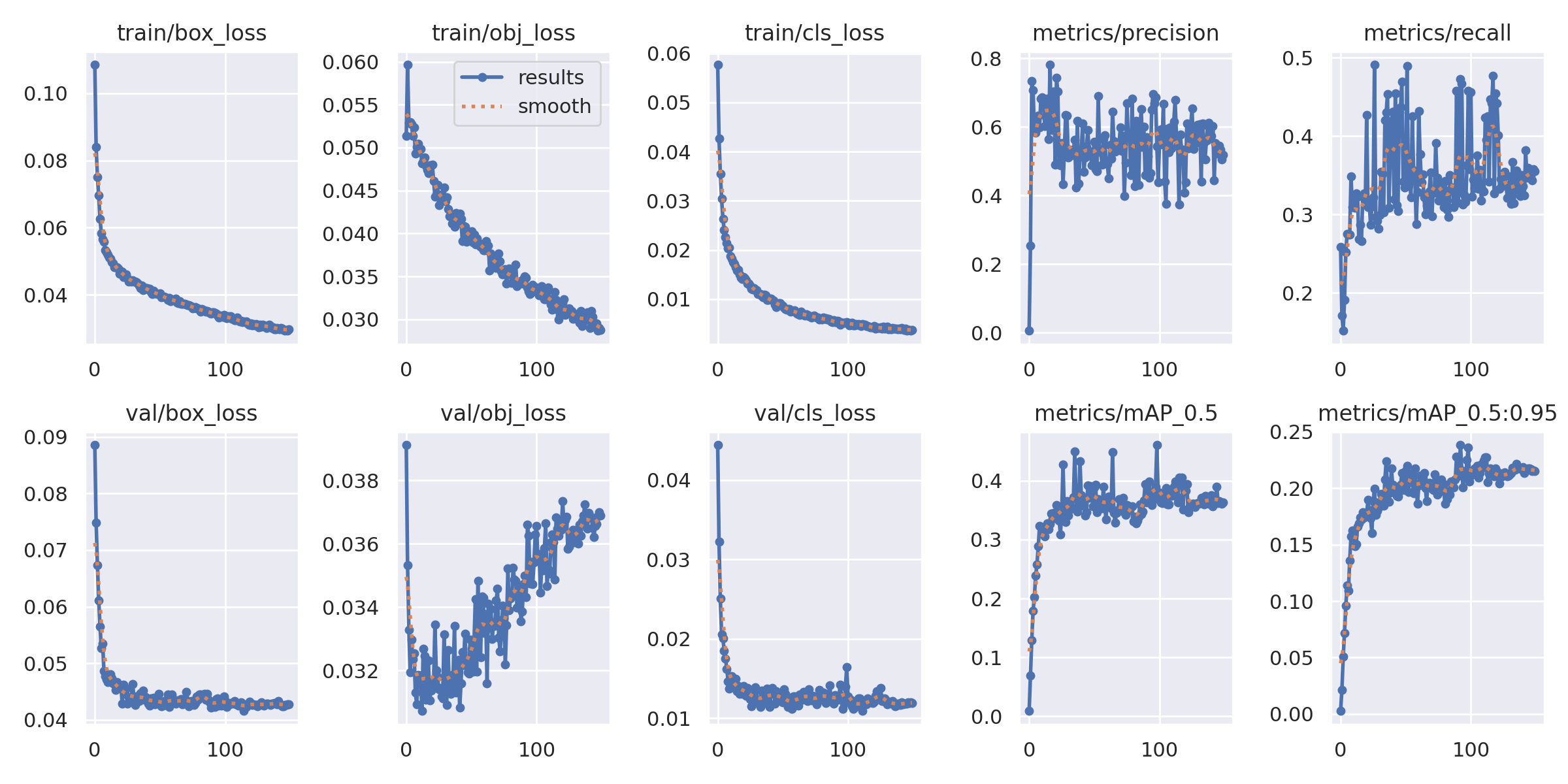

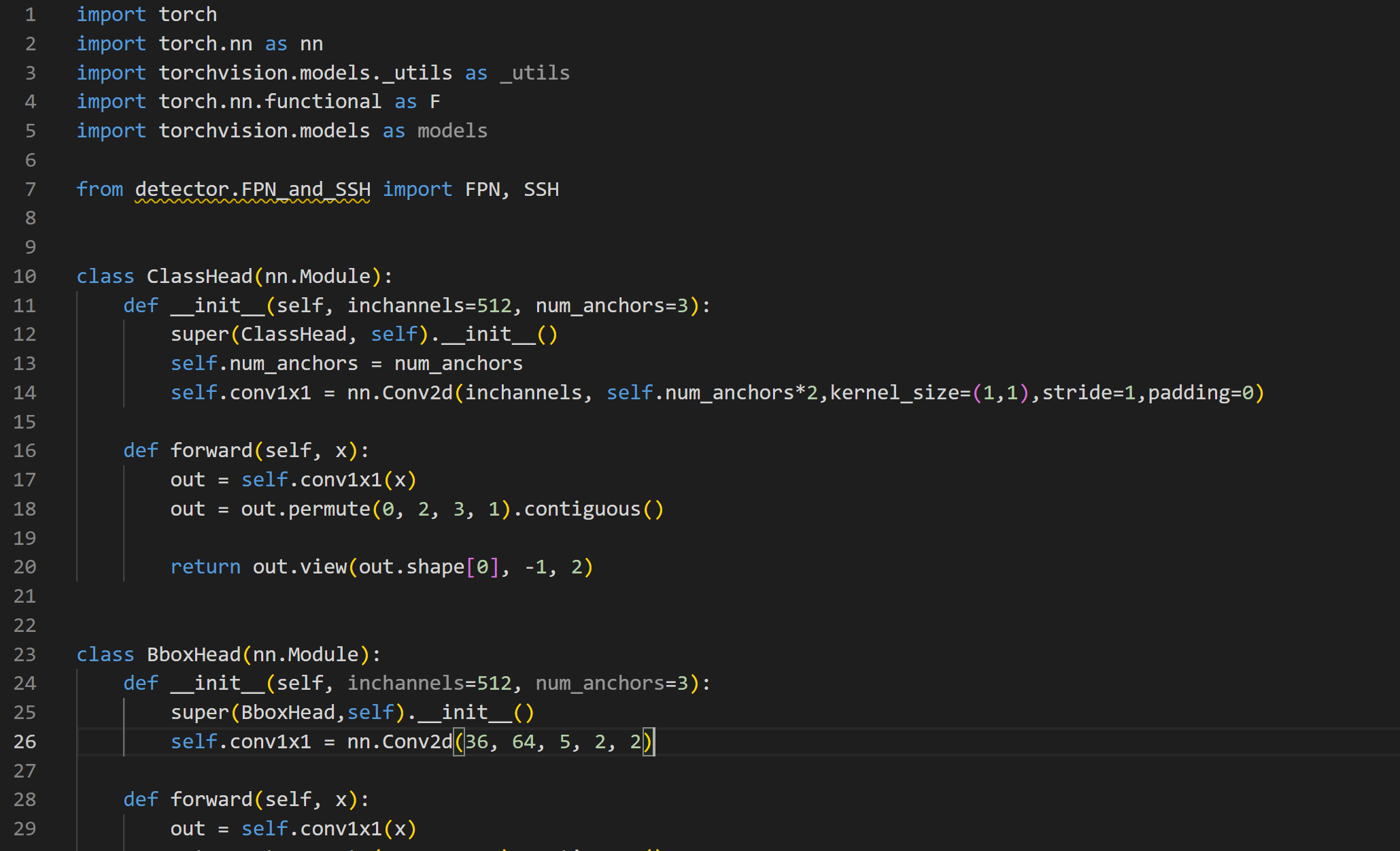

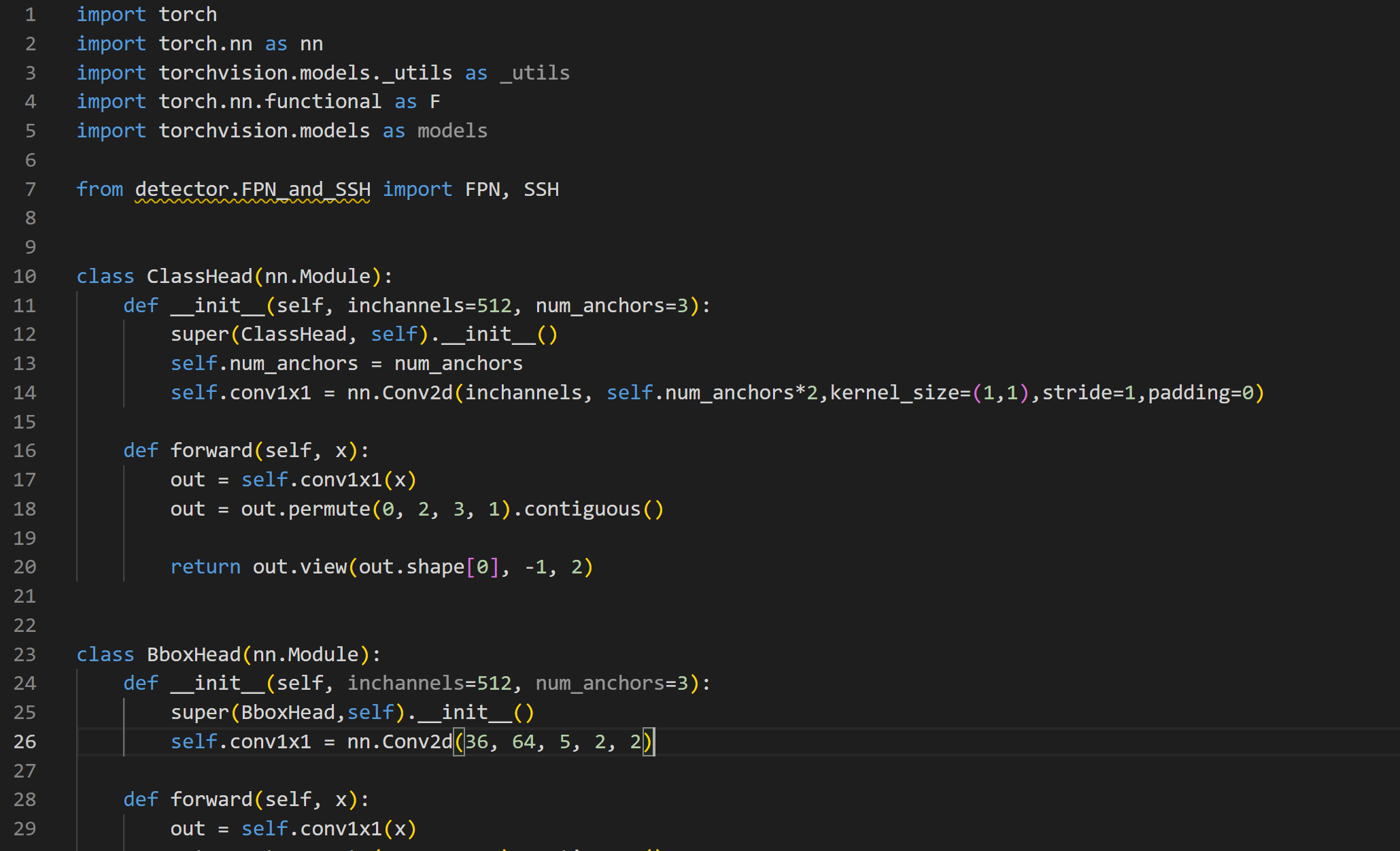

This week I’ve explored the deep learning model for object detection. I’ve looked at the code for backbone, neck, and head and browsed which set of model I should use. This is the code of the detector:

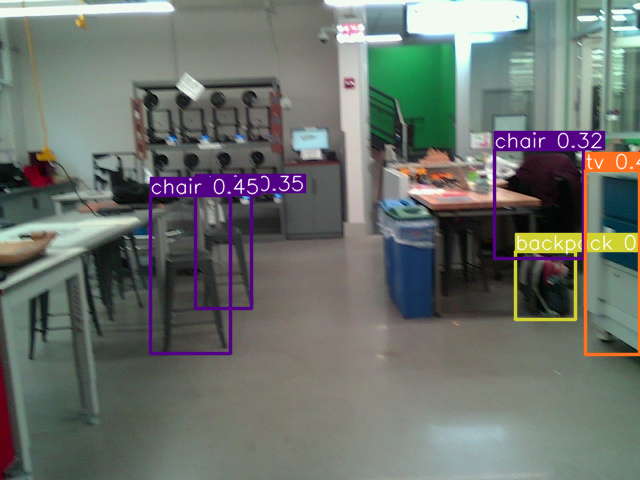

Also, I looked at how objects are identified using bounding boxes. I ran a demo with facial recognition to show hoe human’s faces are detected with facial landmarks.

The project is roughly on schedule. Next week, I hope to achieve a workable version of the object detection network.

Individual question:

ABET #7 says: An ability to acquire and apply new knowledge as needed, using appropriate learning strategies

As you’ve now established a set of sub-systems necessary to implement your project, what new tools are you looking into learning so you are able to accomplish your planned tasks?

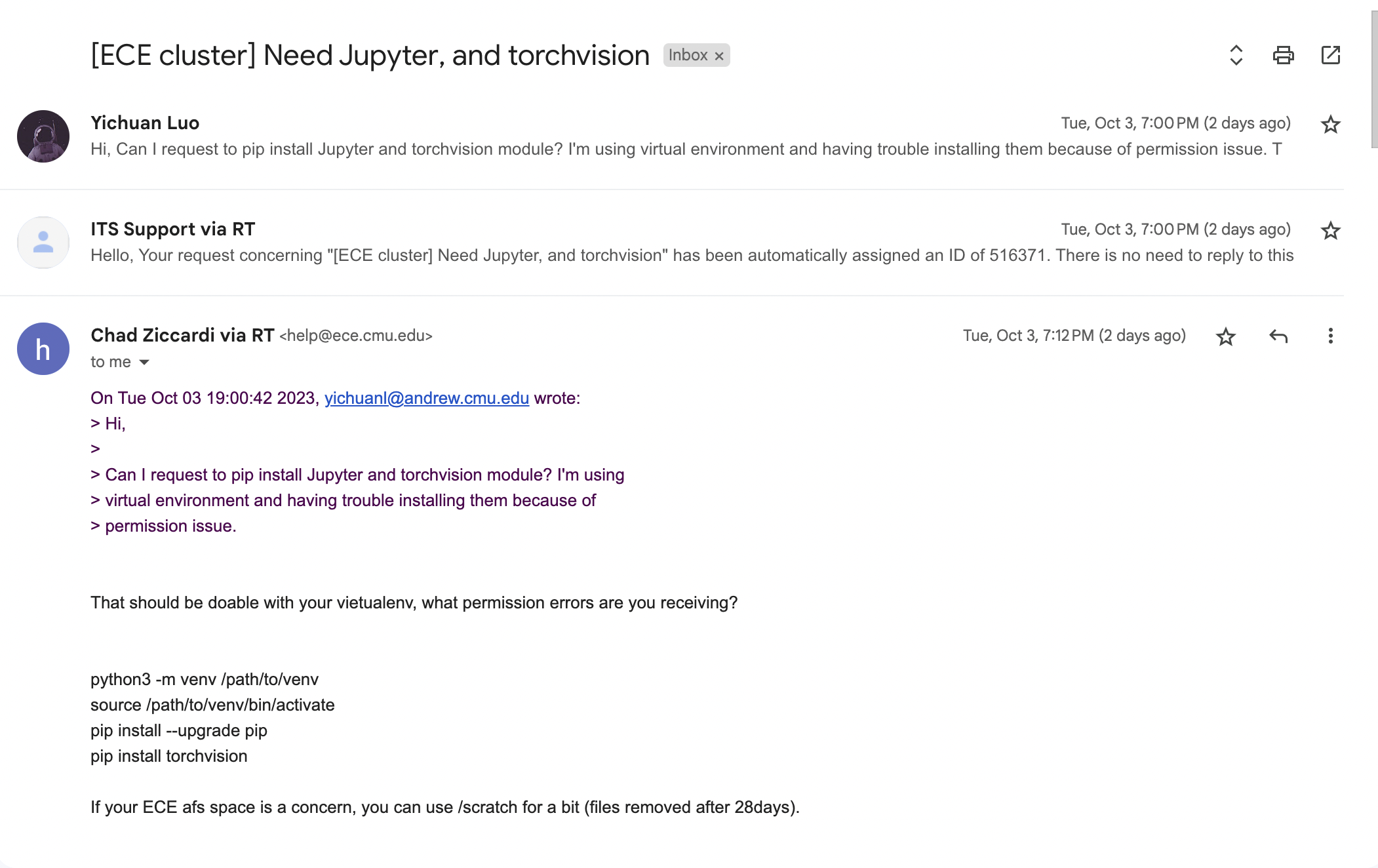

Personally, I will learn how object detection model works, and the fine tuning tradeoff of different CNN backbone, different FPN, and different heads. Also, to train the model, I will learn how to work with jupyter notebook.