This week I prioritized my time on integrating the Raspberry Pi further with the Teensy. This mainly included receiving feedback from the guitar and forwarding it to the website.

In order to implement this feature seamlessly from the front end, I took a top-down approach to transferring information between the systems. The main issue at focus was properly synchronizing the physical guitar’s state to the virtual one on the website. So to ensure the website always stays up-to-date, I added a javascript function that constantly requests feedback from the server once every 100 milliseconds. The feedback returned includes whether or not the player strummed correctly and a timestamp of the strum. If a note is correctly strummed, then the website updates the virtual guitar to stay in sync with the guitar and teensy. For the Raspberry Pi to retrieve feedback from the Teensy, it constantly reads from the Uart port for packets that include feedback from the user’s playing. We removed the blocking feature for reading from the Uart port as this incurred a significant time penalty for the website’s updates. Instead we opted for immediately returning even if there is nothing to read. This was the website can send multiple requests for feedback every second without waiting for actual user input. In addition to receveing information from the Teensy, I have reworked to the file transmission and now the Pi now sends even more metadata to the Teensy including the tempo for the speaker metronome, the volume for the metronome, and the playing mode.

For next week I would like to implement a way for the user to view the statistics gathered from their playing data. Additionally, I am looking to implement the “scales” mode that just shows the user all the notes of a particular scale.

Ashwin’s Status Report for 11/18/2023

This week I focused the majority of my time on the integration of the Raspberry Pi and the Teensy microcontroller. This involved further development of the communication standard between the two devices. Initially, I tried to use the pretty midi python library to aid in the processing and transmission of the midi file. The processing itself included adjusting the timing of the notes played based on the speed the user wished to play the file at, and stripping the file of any unplayed instruments. However, after further inspection, we noticed that the library took too many liberties with the byte representation of the file making it difficult for the teensy to parse its data. So we decided to create our own format for sending the necessary data. We decided on this protocol:

Preamble: first 4 bytes of file, contains length of file including length bytes

Each entry represents a note and is 5 bytes:

First 4 bytes: start time of note in millis

Last byte: bits 0-3 are fret, 4-5 are string

Using these rules, the teensy will be able to parse the data with much less complexity. This method was implemented on the Raspberry Pi using Uart.

This progress on the Uart communication puts me on track for the schedule we decided. For the next week, I want to continue to work on the communication between the pi and the teensy. Now the teensy should send back information to the pi about the user input. In order to implement this, we will need to define another protocol over Uart to send packets of information containing the time the user played a strum and whether the note was correct or not. In the future the Pi will use this data to generate statistics of the user’s playing and display on the website.

Ashwin’s Status Report for 11/11/2023

This week I made significant progress on the web interface for the Superfret system. Now when you click on a playable file, the user is prompted to submit a short form which allows the user to customize their playing experience. This includes handy features like configuring the playback speed, choosing the specific track on the midi file, and choosing between training and performance modes. Training mode will wait for the user to strum each note before continuing, allowing the user to take their time learning the song. Performance mode will not pause the song unless the pause button is clicked. Once the submit button is clicked, the bass guitar will pop up on the screen along with the notes that begin to slide into place. In order to implement pausing in the system, I had to redesign how each note was drawn on the canvas. Initially, each note was catered to by a unique thread that sleeps until it is time to play the note. Now I draw each note at the start time onto one very tall canvas and slowly slide down the entire page of notes as one whole unit. This allowed me to very quickly implement pausing. Additionally, I added a note-worthy feature of playback audio. Now a user can click on the ‘toggle listening’ button to hear their MIDI file play out loud on the website. To implement the audio I used the Tone java script library to create a noise synthesizer (I gave it settings to make it sound like a bass). Then every time a note is played on the canvas, the synthesizer uses the midi file information to sound out the notes in real time.

For next week, I would like to work on the integration between the Pi and Teensy. To do this, I would need to makes sure the web interface logic agrees with the finite state machine of Teensy and we would need to test and set up the interrupt pins and the Uart connections.

Ashwin’s Status Report for 11/4/2023

This week I have been making further progress on the web interface for the Superfret guitar. Now when you activate a guitar midi file, instead of just showing a pop-up indicating to the user that the song is active, the user is directed to an interactive page that visually displays the notes of the file that is being played. Here is how it works:

I developed an additional file called MidiFileReader.py which extracts the notes from a file that a user submitted and tokenizes them into an array of note objects which include the note value, time at which it is played, and the fret and string to play the note on. This way, I was able to modify the front end to process this array of tokenized notes and produce the moving blocks on the guitar at the right time and right place.

Retrieving the appropriate string and fret from the midi file note required me to develop an algorithm that could translate between the two. To do this, I created a function that takes in a midi_note, and a prev_midi_note as parameters. The algorithm checks each of the four strings to see if the midi_note is playable on that string. If so, it then compares its distance to the previous note using a simple distance formula. It then picks the option that is closest to the previous note. This ensures that the beginner guitarist will play sequences of notes that are close together and not far apart.

For next week, I would like to implement a pausing mechanism within the file playing. This would allow the midi file player to become interactive with the user as it will wait for the user to play the notes on the guitar before continuing.

Ashwin’s Status Report for 10/28/2023

In the last couple of weeks, I have made further progress in the development of the software components of the superfret system. Firstly, I developed a Uart driver that allows the web app being hosted on the Raspberry Pi to communicate directly to the Teensy microcontroller via a simple interface. We have additionally conducted rudimentary tests to check the communication and were able to successfully send entire midi files as well as interrupt signals using the midi driver. Tasks that are left on this component include refining the communication standard more as the hardware develops to enable seamless communication and synchronization between the two processors. This puts me on track with our projected schedule. Additionally, I began working on the dynamic scrolling note feed since our team determined it would be a very nice feature to have from the perspective of our users. This is a much more involved task so I broke it down into separate categories:

– Display Alignment

– Display synchronization

– Midi file parsing and sending

– Translation from note number to fret and string number

Currently, I was able to make significant progress in the Display alignment category. This task involved creating a dynamic webpage with moving shapes. I implemented this behavior using HTML canvas graphics manipulated by frontend JavaScript. I created a demo webpage that helped debug the page and showcases its functionality:

For next week I hope to have made significant progress on the dynamic guitar display. Progress in any of the categories for this task will help improve its functionality and help determine the viability of the feature.

Ashwin’s Status Report for 10/7

This Week I worked more on the website in order to implement the backend functionality that would eventually allow us to send MIDI files to the guitar via a web interface as well as stop and start songs. The work primarily consisted of creating database endpoints and linking the front end to the backend via Javascript. More specifically:

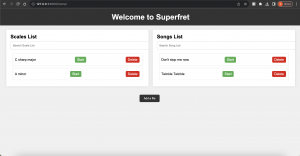

Here is the website without any songs in the database (we would have pre-downloaded files for final user experience). Clicking on the add file button allows you to add a file:

Here you can designate a name, a file, and whether it is a song or a scale file.

After you click submit, the file will appear in the list that you chose. This is significant as it means the file is stored on the Pi itself allowing us to parse it and display it on the guitar in real-time. You can then delete the file if you no longer wish to use it or start the file to theoretically begin the song on the guitar.

Upon clicking the start button, the webpage freezes and a popup appears indicating that a session is in progress. The user can then stop the song at any time using the stop button.

This completes most of the basic functionality for the GUI which puts me on schedule. However, in the future, we plan to implement a scrolling guitar dynamic display (like that from Guitar Hero) on the website. This would allow the user to refer to both the guitar and the website for guidance. For next week, I hope to implement a UART driver that allows the Raspberry Pi to communicate with the microcontroller.

Ashwin’s Status Report for 9/30/23

In developing the design for our project. There was a significant software component that allowed me to utilize skills I learned from 17-214 principles of software construction and 17-437 web app development. Specifically, I knew that our web app would need a model-view-controller design pattern to help sustain maintainability and testability while isolating separate components.

After deciding on this, I spent time researching the best library for developing a web app and ultimately decided on using Django. This is a high-level web framework for Python that promotes rapid development and clean, pragmatic design, providing built-in features like an ORM, admin interface, and robust security, making it an efficient choice for developing maintainable web applications.

I created a GitHub repository for our codebase at https://github.com/ashwin-godura/18500-SUPERFRET, and started coding up the user interface. I developed a static webpage that visualizes how our users will interact with our device. This puts me on schedule with the development on the software side. Next week I hope to have most of the user-facing logic complete with the ability to add and delete files and start and stop songs.

Ashwin’s Status Report for 9/23

Over the past week, I contributed to authoring design requirements and began work on a Python web app that will become our product’s UI.