- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

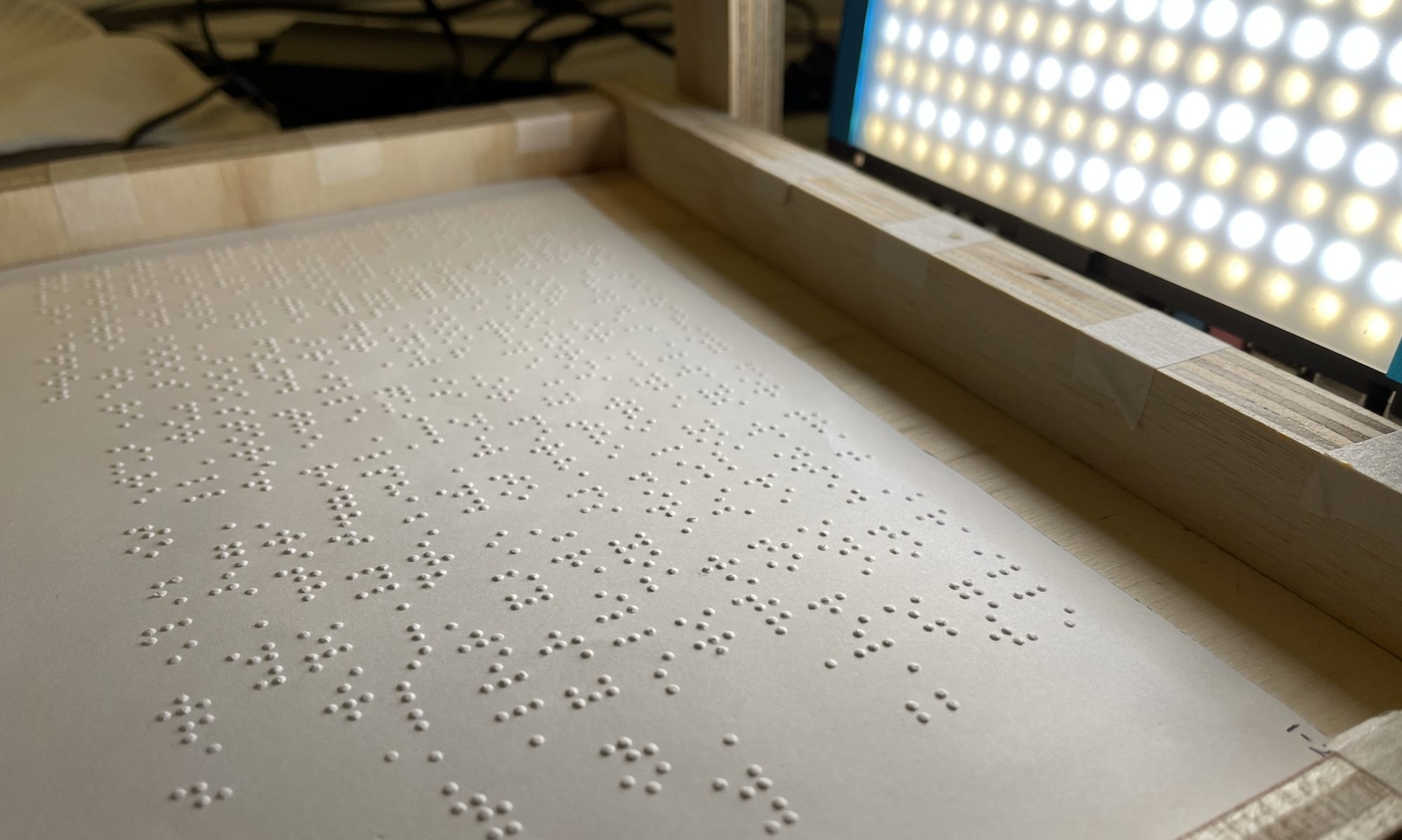

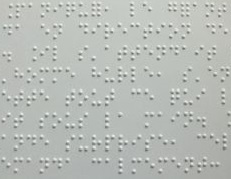

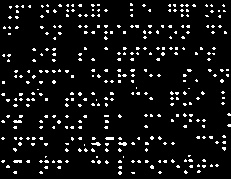

This week, using various openCV functions that grayscale an image, or reduce the noise and enhance the edge contrast, or binarize an image, I have tested out various outcomes of applying different filters with controlled variables to attain a clean pre-processed image of braille text that would facilitate the recognition of each braille alphabets by our recognition ML dataset. For example, running the following python code below would display various potential intermediate outcomes of applying filters from the original braille image.

After the initial image pre-processing stage, the image then needs to be segmented both vertically and horizontally, then save individually cropped braille alphabets into separate folder containing continuous jpegs of individual crops to be handled by the recognition ML dataset to be translated to the corresponding English Alphabet. This week, I worked on the vertical segmentation, and similar works will be applied for horizontal segmentation and the cropping and saving in coming weeks.

In order to parallelize the workflow of our team, I manually cropped out the first 5 or 7 braille alphabets of the various versions of pre-processed images to be handed to Kevin for the next step of our algorithm, recognition phase. Kevin will then test the recognition ML algorithm and give metrics on the accuracy of translation given various clarities of pre-processed images. It is then going to be my goal to continuously enhance image-processing to match the thresholds required by Kevin’s metrics requirements.

Last but not least, further method to enhance the accuracy rate from recognition phase was investigated, which was using non-max suppression from imults libraries. Since colored circles are drawn on top of the currently existing braille dots, there is a high chance that this way of pre-processing and cropping individual braille alphabets may return relatively higher accuracy rate. And the code for non-max suppression will be written from scratch in coming weeks.

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Things that were due this week were i) collaborative initial hardware boot ii) assess tradeoffs for various image pre-processing and segmentation methods and start testing out the effectiveness of each pre-processing algorithm, and iii) start writing some code from scratch for pre-processing. All goals were met and my progress is currently on schedule.

- What deliverables do you hope to complete in the next week?

By the end of next week I plan on accomplishing the following: 1) Starting works on vertical & horizontal segmentation of the currently pre-processed images to attain cropped individual jpgs of braille alphabets, and 2) Research on non-max suppression methods using imults libraries to draw colored circles on top of pre-processed images for potential boost in recognition accuracy.