- What did you personally accomplish this week on the project? Give files or photos that demonstrate your progress. Prove to the reader that you put sufficient effort into the project over the course of the week (12+ hours).

First half of this week was spent on the preparation and rehearsal of the Design Presentation, as I was the presenter of team B1-Awareables.

Latter half of this week was primarily invested in further research on segmentation and partial implementation of horizontal segmentation. After I obtain the region of image (ROI) after the initial pre-processing, the next step to proceed is the vertical and horizontal segmentation, a process of dividing the original ROI to vertical and horizontal segments of crops, in order to process into final cropped images of individual braille characters.

After some research, I found out that the approach for the horizontal and vertical segmentations should be a little bit different. More specifically, for horizontal segmentation, there are two different approach options: 1) Using hough transform lines, and 2) manually grouping individual rows by label, assigning labels to each row based on whether they are following each other or not (i.e. diff >1), and then find the mean row value associated with each label to color each row lines for horizontal segmentation. For vertical segmentation in specific, since the space between the dots depends on the letters following each other, the similar “2)” approach from horizontal segmentation should be somewhat adapted using Hough transformation, which will be further explored in the coming weeks.

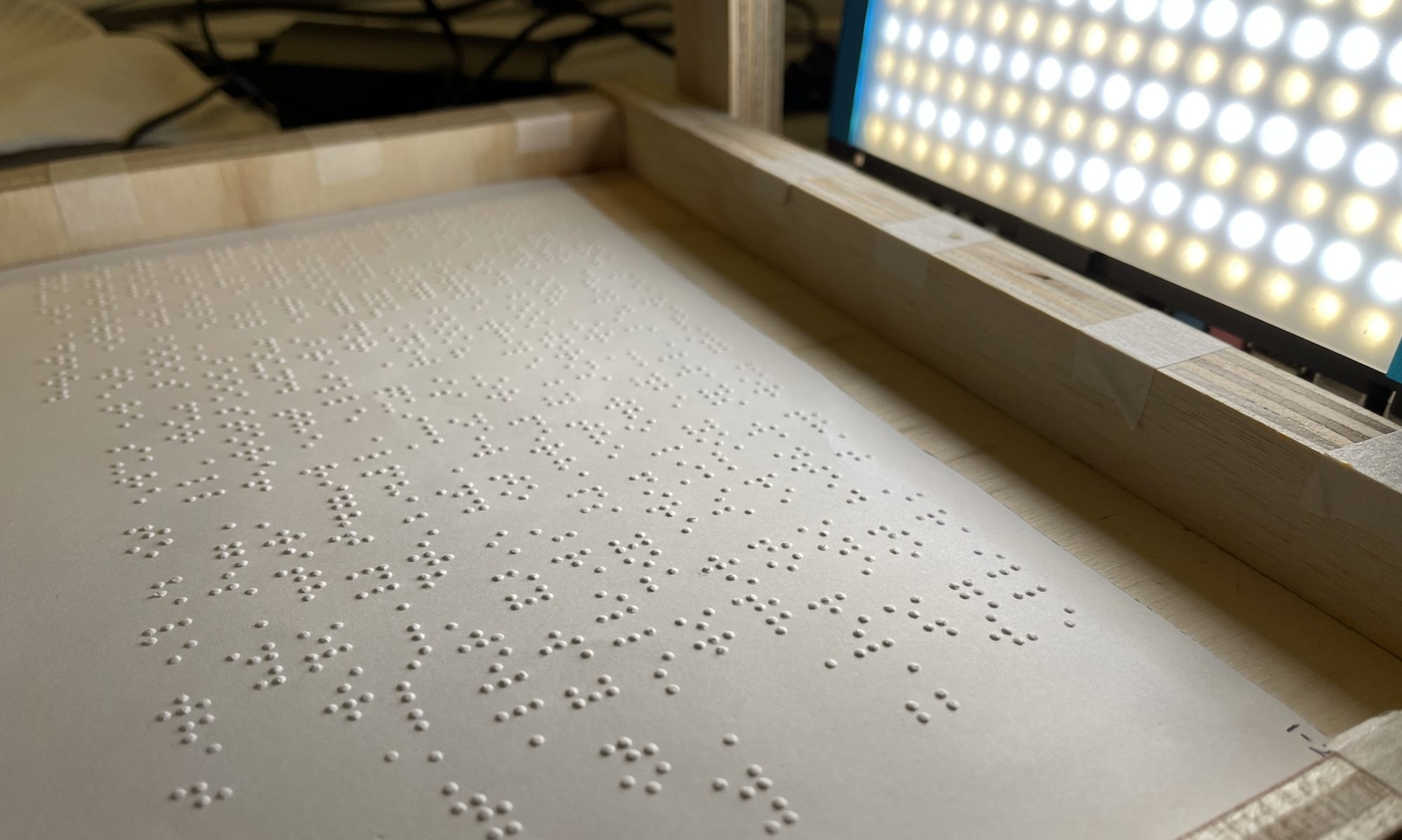

For the horizontal segmentation, this is the current result:

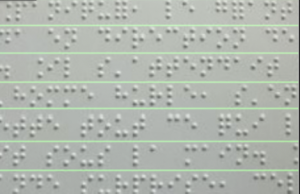

Next steps would be to crop individual rows of ROI and save them into individual segmented rows which will be further vertically segmented using Hough Transforms:

EX)

![]() +

+

![]() +

+

(further segmented rows of ROI)….

- Is your progress on schedule or behind? If you are behind, what actions will be taken to catch up to the project schedule?

Things that were due this week were i) start working on vertical & horizontal segmentation of the currently pre-processed images to attain cropped individual jpegs of braille alphabets, and 2) research on non-max suppression methods. This week I made some progress with horizontal segmentation and studied in depth how non-max suppression would be applied to our final filter. All goals were met and my progress is currently on schedule.

- What deliverables do you hope to complete in the next week?

By the end of next week I plan on accomplishing the following: 1) Keep working on vertical & horizontal segmentation of the currently pre-processed images to attain cropped individual jpgs of braille alphabets, and 2) Further research and application of non-max suppression filters.