This week I create a brand off the GitHub Jason created for code management to develop the basic code for signals processing. I spent a fair amount of my time this week considering how to implement storage of historical data that might need to be kept for filtering and settled upon a linked list to allow easy ordering step through. For our purposes a list will also work better that a double array as we won’t have to rewrite in the data to shift its relative time position. The list is doubly linked to allow for O(1) removal of old data as I believe time will be more of a concern that storage for us. The list and helper functions have been implemented but not robustly tested yet as I am refamiliarizing myself with C code. I have also have written the code to calculate multiple target distances from the data, my code for this portion is based off the code the manufacturer had provided to preform such a task. I plan to try to have a version of the speed filtering code that will have some changeable constant (concerning what speeds to filter out at what distances/should we show things with negative velocity if they are very close or allow minor negative velocities to account for error) done before class on Monday and plan to get some opinions from my teammates about how to set these constants which I will use as benchmarks about whether or not to display data.

Team Status Update 3 OCT 2021

The main focus of our team this week was making some final selections of in preparation for our design review. A lot of this focus was around our sensor. At the beginning of the week, we were looking into transitioning away from a rotating LIDAR Sensor to an array of stationary LIDAR and ultrasonic sensors, but after a thorough review of this idea and the introduction of the possibility of the use of microwave sensors, we decided to move to the newer microwave technology. The sensors we selected will give us a 20m range without the sensitivity to light that concerned us with the LIDAR sensors. The sensors have a horizontal 3db beam width or 78 degrees, meaning a small array of such can easily cover our field of view. The sensors can also track multiple objects, though they only give back distance information without any information concerning the look direction.

We also looked at the lights to be used for signaling and the draws this would have on power. Though we had found some promising looking single point LEDs they had low efficiency, so we have currently transitioned back to using an LED strip both in the front and back. Our want for a full day of use and the large draws of both these LEDs and the sensor will require a rather large battery. Some discussion has also been had about using an Arduino in conjunction with the STM32F4 to run the lights, and it has also tentatively been decided that the signals processing interface and code will be written in C code.

Design review slides to be amended later or posted separately.

Albany’s Status Report 2 OCT 2021

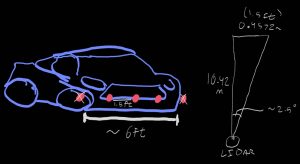

For most of the week I have been looking into sensors with my team to make final determinations for what should be used for our blind spot sensor. At the time of my last status report we were looking into a mix of long range single point LIDAR sensor and ultrasonic sensors, so I started the week by coming up with a possible arrangement for an array of sensors that used two of each kind. This arrangement is can be seen in document V2: V2SensorPlacementLIDARULTRA

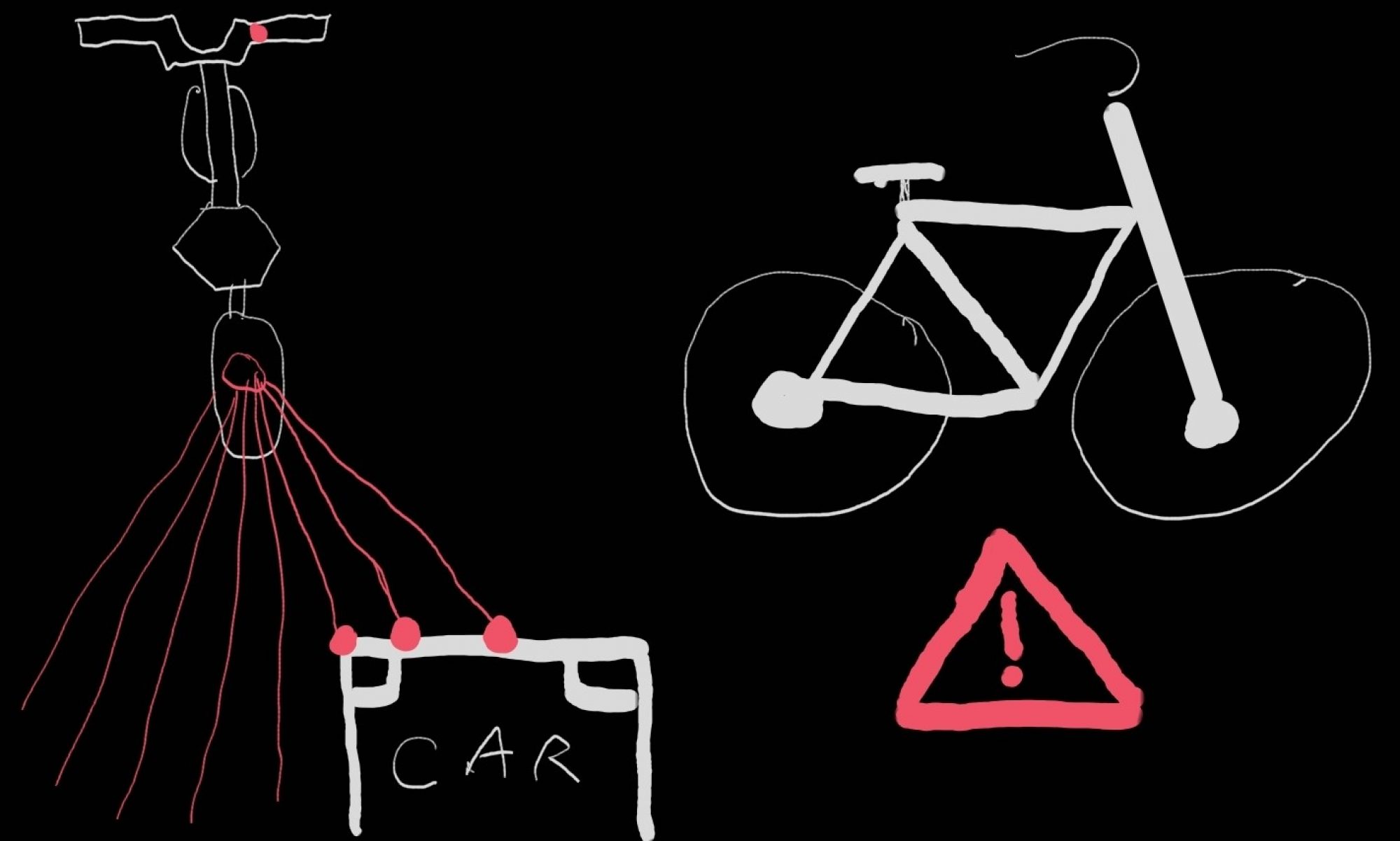

However, following a suggestion for the use of a 24GHz microwave sensor from Jason, and some research into that solution and discussion of the pitfalls and merits of all solutions we had discussed prior to it, we decided to make our primary solution an array of at least two microwave sensors which we filled out the form to order during class on Wednesday. I have since been familiarizing myself with how that sensor presents distance information. It appears as though the sensor will allow us to determine the distances of multiple objects, but not their particular look directions. With two sensor this means that though we can cover our entire FOV as the sensors have a 3db beam width of 78 degrees, the most information we can give back to the user is which sensor detected the object. However, this still allows us to tell the user if something is behind them to the right or left. Further, by looking at a possible overlapped area of the sensors, a third sector for objects seen by both sensors could be achieved. Some of my initial thoughts into the example code provided by the manufacturer for multi-object detection and a basic layout of the signals processing code to handle information can be seen in document V3: V3ManCodeCustomCodeStructureMicrowave

Me and my teammates are planning to get together later today to edit/create our design presentation slides and I hope to also determine with Jason who is in control of the device drivers what language we plan to use so that I can code up most of the signals processing code this week. Possibly with the exception of the code to filter out any “noise” from the ground as some baseline data from the sensors will be useful before adding that section.

Albany’s Status Report 25 SEP 2021

As I was the presenter for our project proposal, prior to Wednesday, I spent this week predominately practicing my presentation and delivery to make sure I wouldn’t forget any pertinent details and would stay on schedule when speaking.

Following the presentation, I started working on some of the basic signals processing considerations for the sensor, attempting to figure out some basic cutoffs for showing sensed objects to the biker and trying to consider what issues might arise. One of the things I foresee might be especially problematic will be filtering out stationary objects.

Further following some comments from the professors and staff, and a small back and forth, the team is looking into other sensor options than the RPLIDAR and we are getting together later today to discuss some of the suggested options. One possible solution, were we to assure we had the budget for it, that I started looking at was using a mix of longer range lidar sensors to first detect the object in certain common look directions and then a set of ultrasonic sensor to give us a more complete FOV if necessary and be able to track when warning lights on the tail mount might interfere with the LIDAR.

Some of my thought process can be seen here: SignalsProcessingSensorConsiderations25SEP

Albany’s Status Report 18 SEP 2021

This week I worked primarily on creating the slides for the project proposal and preforming some hand calculations for new requirements and component selection. For the slides I focused on integrating our requirements throughout the rest of the presentation and fleshing out the section on our choice to use LIDAR and the needs to be met by the sensor in particular. This included some calculations about necessary range and angular resolution. Earlier in the week I also researched and compared two different rotating LIDARs from which the Slamtec RPLIDAR A1M8 was chosen due to better documentation and support as well as eye safety specifications. I also made some website modifications adding our current logo and a unique header picture.