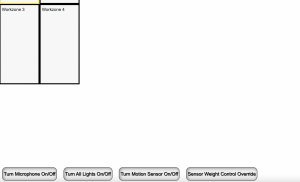

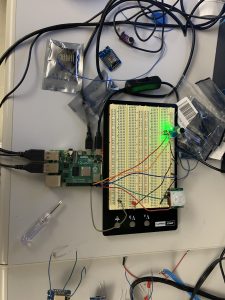

This week I worked on testing, refactoring our code to allow for faster testing and verification, and drafting a user survey to guide some of our design decisions. In addition, I soldered and created another “base station.”

(User Survey: https://docs.google.com/forms/d/1YM4OFE08eWwsJJnDHzXr_HDfEiTtsAuOjxWQ_ADB7e0/edit)

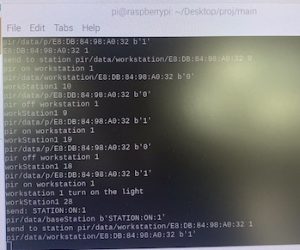

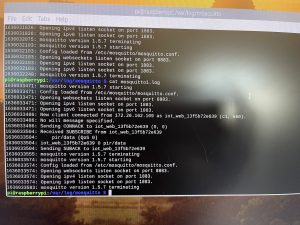

The testing I completed involved our first requirement: to turn on the lights within 2 seconds of a person entering a section. This ensures our computation and communication latency from signal detection to computation to signaling the lights is fast enough for our user. In order to capture times, I recorded myself on video, and timed from first movement to the lights turning on for five trials. I obtained the following results. The average time is 1.028s and each trial is below 2s.

Trial,Time

1, 1.2s

2, 0.81s

3, 1.11s

4, 0.97s

5, 1.05s

To assist with further testing, I refactored our code and began developing a script to modify and adjust our weights given values captured and written to a test file along with a desired light behavior. The script uses a gradient descent-like algorithm for adjusting weights and validating defined test cases. Weights will be slightly modified until (hopefully) the desired light behavior is correct for all test cases.

This coming week I will continue testing, send out the survey to collect responses after it is finalized and create another base station.