Product Pitch

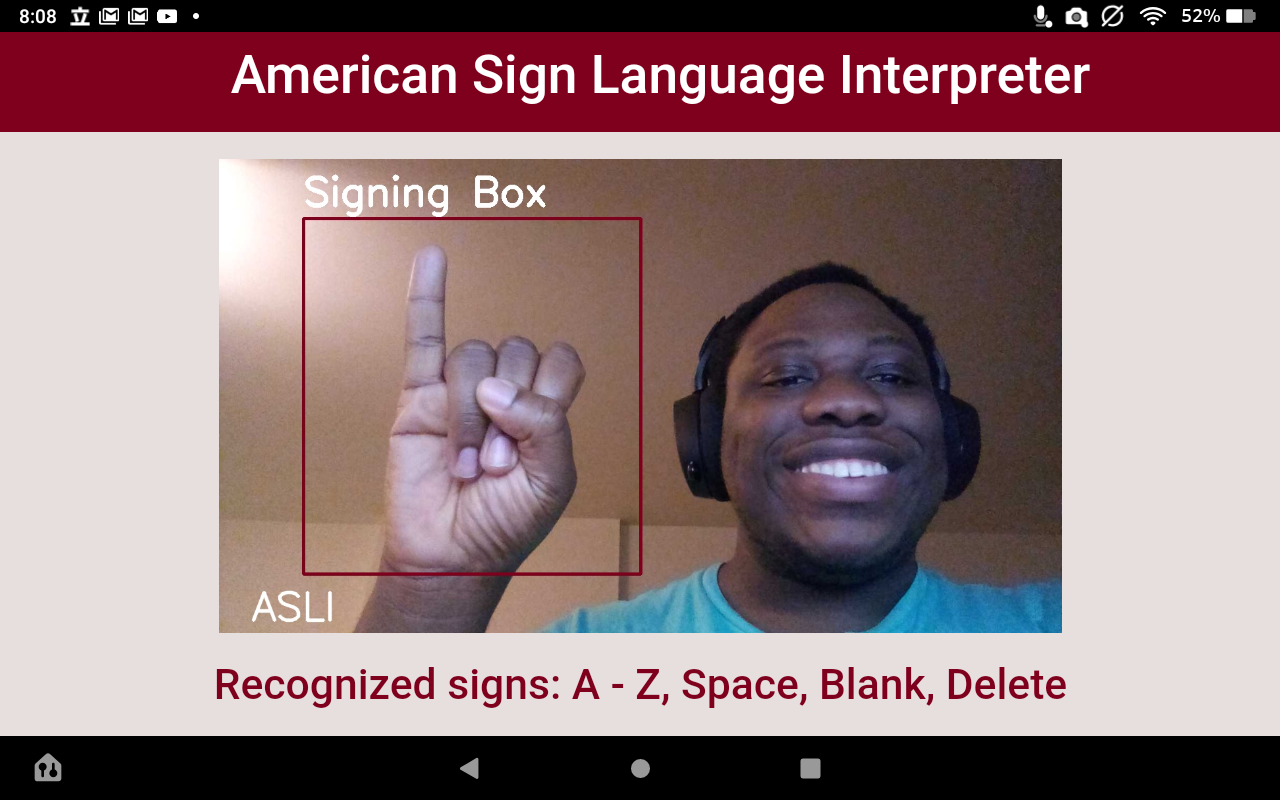

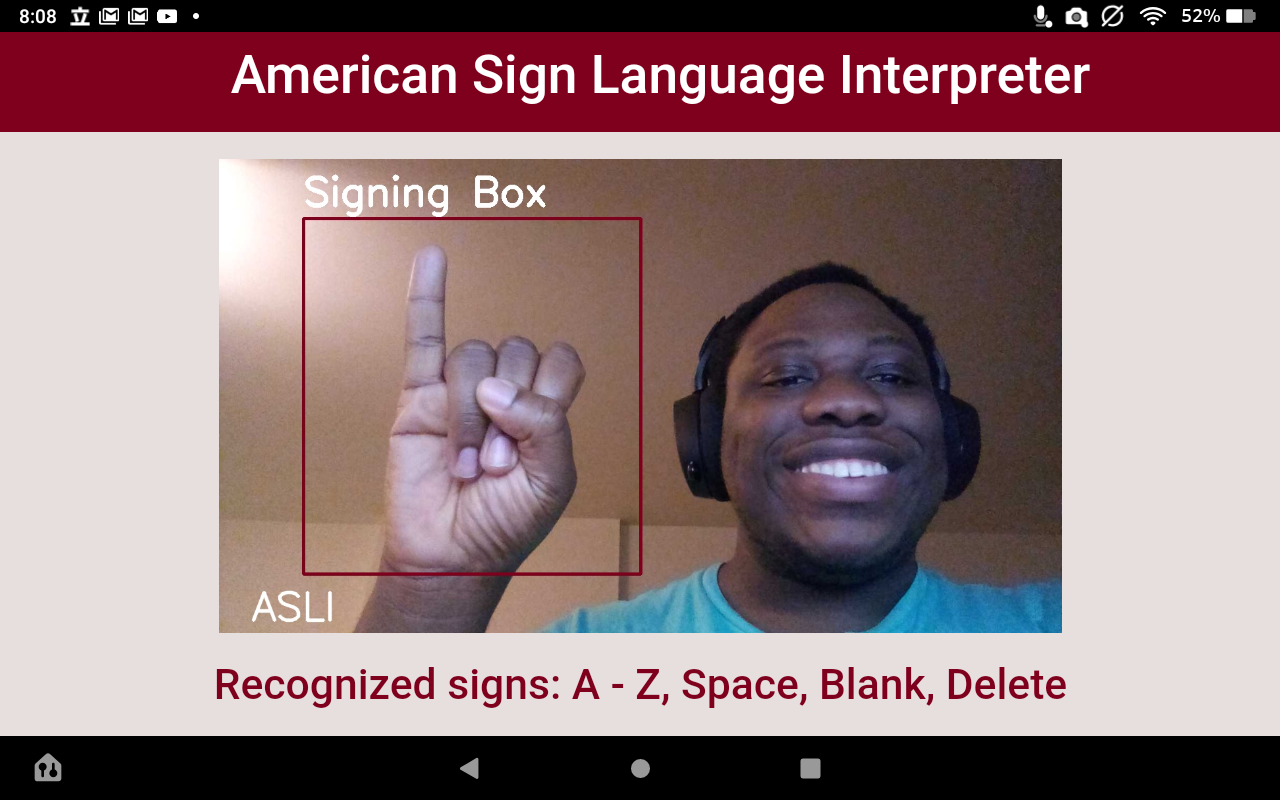

Our project aimed to tackle a need we recognized in the world of assistive technology for Deaf persons. Today those with hearing disabilities communicate through sign language, while only a small percentage of hearing-able persons have an understanding of sign language. So we endeavored to create a system that can visually detect American Sign Language hand signs and translate them in real time. Through the use of computer vision and machine learning technology, we aimed to develop this system to improve the everyday lives of deaf persons.

MEET THE TEAM

Young’s Status Update for 12/6/20

This week I set up AWS and got it running code for the first time. Struggled a lot initially with memory issues and figuring out how to set up instances, but was very satisfied with the result. I got to test out the different models I had prepared on the Kaggle ASL dataset with different parameters and found that the best model returned a surprising 95% validation accuracy rate. Fine tuning the end layers after freezing the model from transfer learning resulted in some accuracy problems, but since most of the models did not take too long to run, I decided to train all of the model parameters over the night and found much better results. I also found that since the size of the dataset was so large (100,000 total images I subset from), training with even a few epochs returned very strong results. Having saved these model weights and model json files, I just need to export them into the web app and be able to run the classifier there. For the first time, there’s light at the end of the tunnel.

Team Status Update for 12/6/2020

This week has been us coming to terms with the scope of our original project being out of reach and finding way to descope our project to try and have a working prototype at least. We have descope to the letters of the alphabet in sign language and are working on having a simplified version our hand tracking with a bounding box where the user must sign within is bounds. Though disappointing we are working hard to make sure that we have something to show for the work we put in.

Update: There is now progress on the classifier, which is an Inception-v3 with a Flatten, Dropout(0.5), and Dense layer with NUM_CLASS outputs, achieving 95% validation accuracy. We still need to see how well the classifier operates in real time under real-time captured images and figure out how to sample frames to classify, but the bulk of the classifier phase has been completed. This is a breath of fresh air and should the classifier perform as well as it does on the valid set, we’ll have a project to be proud of.

Aaron’s Status Update for 12/6/2020

This week I have been working on the trying to get a working prototype of our project up. I have updated the UI, integrated background subtraction and face removal into the video stream from the tablet. Most just getting things ready for the integration of the the neural network. I have also added a form that I am working on, in order to tell the app what sign we are trying to interpret as a part of the descoped version of the project. At this point we are just trying to get something that works and is presentable.

Malcolm’s Status Report 12/6/20

This week contained a lot of progress with background subtraction, bounding box experimentation and general debugging. Much of the progress dealing with the background subtraction was in terms of the implementation. Using background subtraction itself and image thresholding gave different results. However since background subtraction just captures the edges of a profile, the resulting image will have a smaller space necessary to represent it. So this method is helping our NN grow. We have also now decided to use a dedicated space for the “bounding box” on our hand, since the spatial tracking of the hand proved near impossible. I made attempts at it by using structural similarity and color profile-based searching in the image but the results were less than ideal. Now I am working towards fully implementing our pieces of the project and debugging.

Malcolm’s Status Report 11/28/20

This week was not a heavily productive week, as I had many assignments due, an interview and it was Thanksgiving. However I was still able to get work done on the Amazon database. I made progress on moving more of our testing and training data to the cloud as well as working on developing architectures for the neural network. We need to start fully implementing it soon, so will meet as a group and discuss the details of this.

Team Status Update for 11/21/2020

This week has been a lot of work trying to figure out how to combine the individual component of the project that we are all working on. We think we have figured out a way to integrate the neural network into the web app, so on that front it is just a matter of finishing the training on our final dataset, testing and then integration. the same goes for aws integration as well. Hand tracking is also a crucial component of the project that is still unimplemented but is being researched and worked on now. Once we figure out the next step of finishing our components and combining them I think we will be in a good place, but for now its going to be a lot of work to mitigate risks and finish the project.

Aaron’s Status Update for 11/21/2020

This week we met up to try to figure out how to consolidate our individual component for asli. I did some research into integrating Young’s work on the neural net we are training for gesture recognition into our web app. I think we were able to find a way to do so without to much hassle so now its just a matter of getting it up and running so we can integrate it. I also created to new branches for the development of hand tracking into the web app and that’s current what I’m working on figuring out how to do, the other branch is for a different method of capturing the tablet webcam video stream with lower latency. So next step for me are to get them working with priority on hand tracking as that feature is part of our core functionality. I believe we on schedule but we are on a tight deadline.

Young’s Status Update for 11/21/20

This week our team began to integrate the web application and the backend of the image processing and the classification together I started working on adapting the classifier to the Django app by importing the network weights from within the app. Meanwhile, I’m also working on hand detection which should lessen the amount of work on the image processing side. Admittedly, I am starting to get a little bit uneasy about the amount of work left to be done. It would have been nice to have a deliverable product by now, regardless of its level of sophistication, but we still have the training and the feature extraction left to be done. So I have begun to look into transfer learning, which involves taking a generic pretrained model for a similar task and supplements it with training under a more specific and personalized dataset. The amount of time it takes to implement this seems to be less than the training time it would save from learning from scratch. I am hoping these give us a more reasonable path towards reaching our benchmark results.