Product Pitch

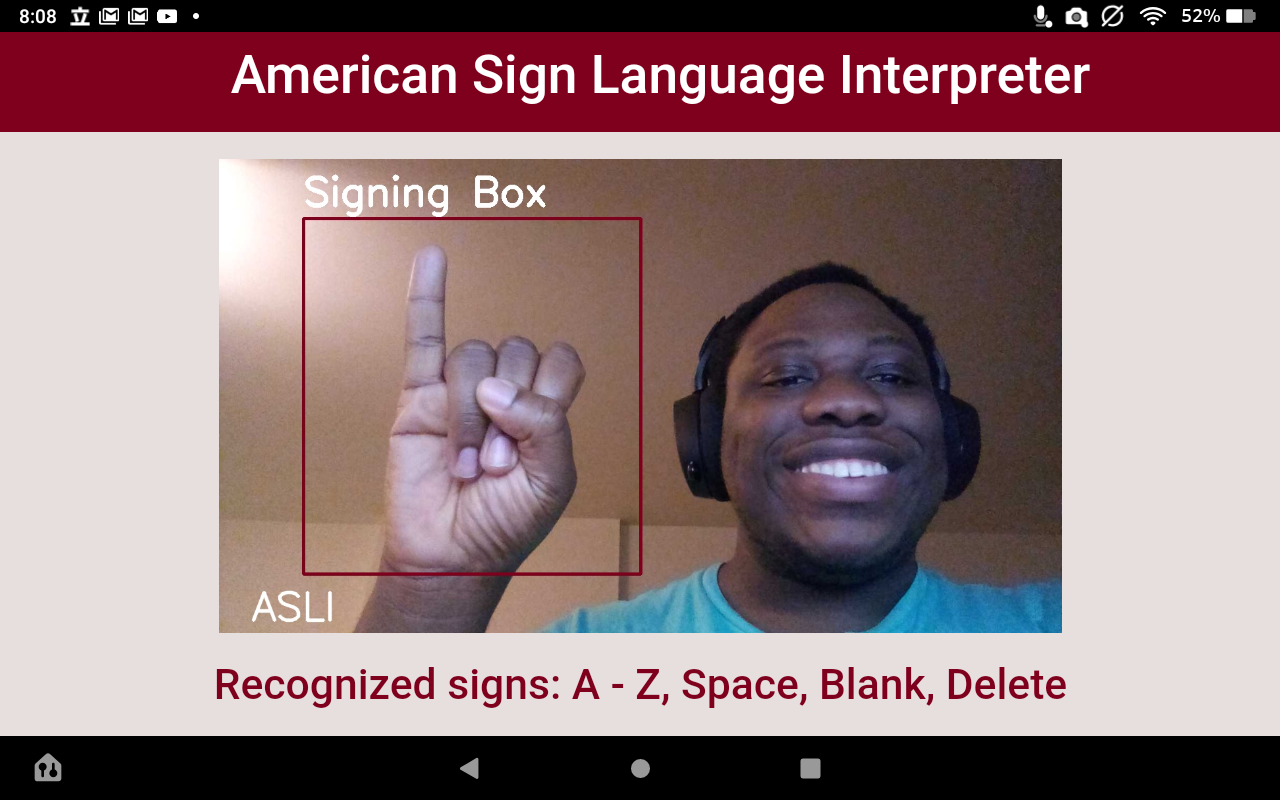

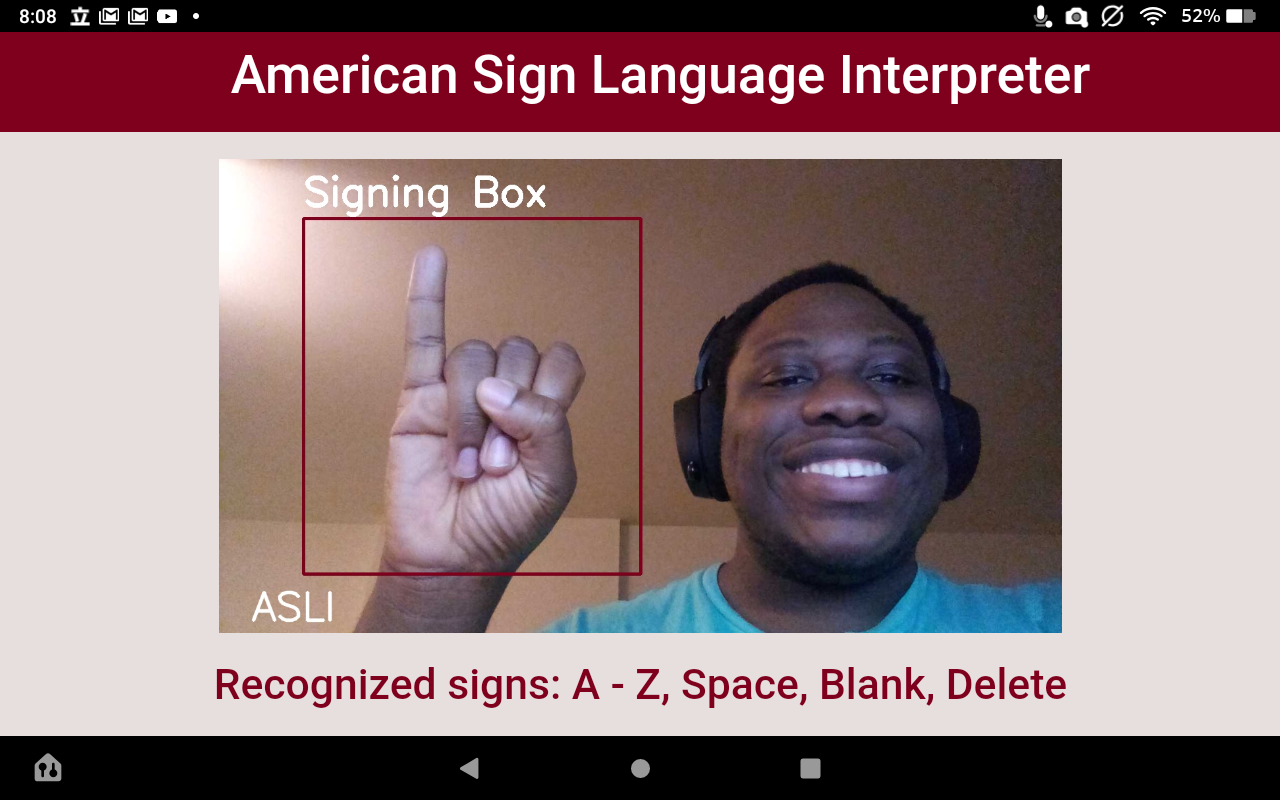

Our project aimed to tackle a need we recognized in the world of assistive technology for Deaf persons. Today those with hearing disabilities communicate through sign language, while only a small percentage of hearing-able persons have an understanding of sign language. So we endeavored to create a system that can visually detect American Sign Language hand signs and translate them in real time. Through the use of computer vision and machine learning technology, we aimed to develop this system to improve the everyday lives of deaf persons.

MEET THE TEAM

Team Status Update for 12/6/2020

This week has been us coming to terms with the scope of our original project being out of reach and finding way to descope our project to try and have a working prototype at least. We have descope to the letters of the alphabet in sign language and are working on having a simplified version our hand tracking with a bounding box where the user must sign within is bounds. Though disappointing we are working hard to make sure that we have something to show for the work we put in.

Update: There is now progress on the classifier, which is an Inception-v3 with a Flatten, Dropout(0.5), and Dense layer with NUM_CLASS outputs, achieving 95% validation accuracy. We still need to see how well the classifier operates in real time under real-time captured images and figure out how to sample frames to classify, but the bulk of the classifier phase has been completed. This is a breath of fresh air and should the classifier perform as well as it does on the valid set, we’ll have a project to be proud of.

Young’s Status Update for 11/21/20

This week our team began to integrate the web application and the backend of the image processing and the classification together I started working on adapting the classifier to the Django app by importing the network weights from within the app. Meanwhile, I’m also working on hand detection which should lessen the amount of work on the image processing side. Admittedly, I am starting to get a little bit uneasy about the amount of work left to be done. It would have been nice to have a deliverable product by now, regardless of its level of sophistication, but we still have the training and the feature extraction left to be done. So I have begun to look into transfer learning, which involves taking a generic pretrained model for a similar task and supplements it with training under a more specific and personalized dataset. The amount of time it takes to implement this seems to be less than the training time it would save from learning from scratch. I am hoping these give us a more reasonable path towards reaching our benchmark results.

Young’s Status Update for 11/14/20

This week I updated the neural network to be a fully learning CNN. I’ve implemented a couple different CNNs, namely a mini CNN that takes in 28×28 pixel inputs and outputs a likelihood over the different possible classes, as well as the AlexNet that takes in larger image sizes and has more filters and larger convolutions with the same output dimensions. I’m having some syntax bugs with the SoftMax layer at the moment which are confusing me but as soon as that gets fixed, I have a sample data set to test out the miniCNN on and check that it can learn. As of now I’ve decided to use the same network and input dimensions for both static and dynamic images. Afterwards, I’ll begin to run the AlexNet on the ASLLVD or Boston RWTH set for static images and resume feature engineering modules.

Young’s Status Update for 11/7/20

This week was unexpectedly very exhausting and difficult for me all of a sudden, so I have not yet made progress since Monday, but I will work on the project tomorrow and make updates here accordingly.

Malcolm’s Status Update 11/07/20

This week I spent time working with AWS and getting our data ready to be used for learning in the cloud. So the majority of my effort went towards understanding the API’s that would be used as well as the specific services we need to correctly employ the service for our project. Outside of this, I continued researching and preparing ideas for the neural network since that step will need the most work at this point. We plan on using the network to train on all the data and run multiple iterations of the network at once to discover the best architecture.

Young’s Status Update for 10/31/2020

I got more work done this week in designing the neural network for general inputs of size m by n. As of now we have a neural network without convolutional layers, with two Dense layers with ReLU activations, Adam optimizer, and Multinomial cross entropy loss. This is the base network we will use for both static signs and dynamic signs, although the specifics of the hyperparameters and the layers will have to differ based on the performance we observe on the two classes. I’ve implemented the skeleton of the training process using the tensorflow graph flow model and will spend the rest of the week figuring out adding convolutional layers and returning to the feature extraction step to begin the dynamic gesture classification process.

Young’s Status Update for 10/24/20

This week I went out of town for a few days without internet connection and was mostly occupied with fitting midterms and assignments for the rest, so I didn’t get too much done on my end. However, I have a pretty good sense on how to design the neural network so that will be my next task going forward. One concern I’m having is the tradeoff between writing computer vision algorithms such as hand detection and using existing modules and their functions. Although I would rather write most of the functionality from scratch, I’m concerned that my implementation won’t beat the existing optimized module functions and cause performance to suffer, such as increasing the response time beyond the real time constraints.