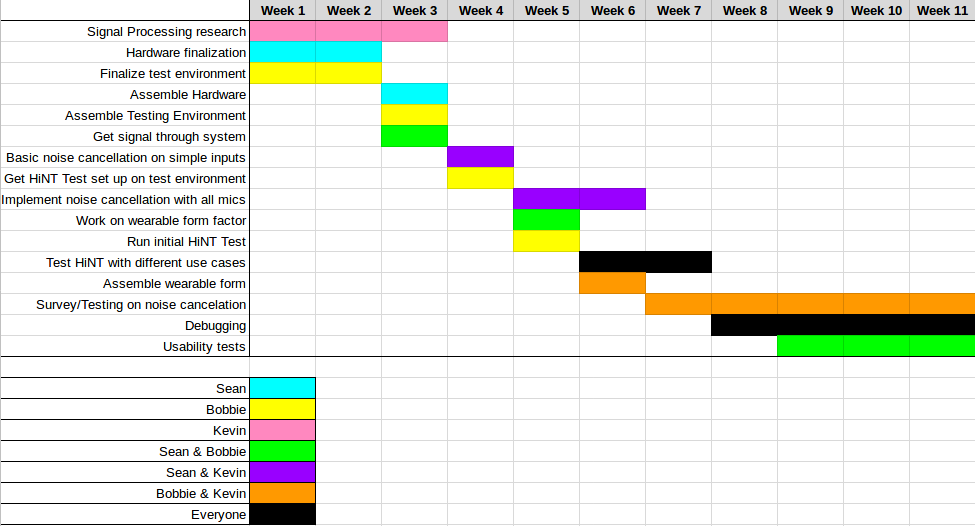

Ordering Parts (Sean & Bobbie)

This week we ordered several parts to begin creating our physical test environment and directional hearing aid. For our test environment we ordered dual speakers along with a USB hub power source and USB 7.1 Channel Audio Adapter to output our test recordings to individual speakers. We also ordered a mannequin torso to mount our directional hearing aid on. The total budget spent on this test environment so far is $140.65. (Bobbie)

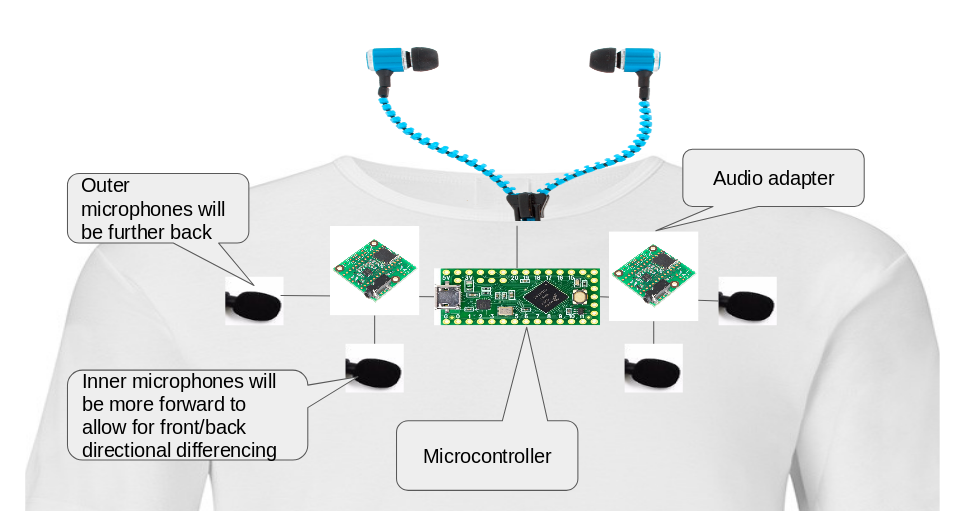

For our directional hearing aid, we purchased 8 omnidirectional microphones to mount on our test torso. We also ordered a Teensy 3.6 microcontroller, 4 Teensy Audio Boards to receive microphone inputs, and 8 headers to attach the audio boards onto the microcontroller. The total budget spent on our hearing aid components is $118.65. It is important to note that we ordered extra parts in case of changes or issues moving forward. (Sean)

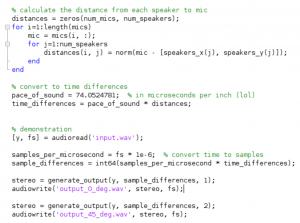

Generating Test Environment Recordings (Bobbie)

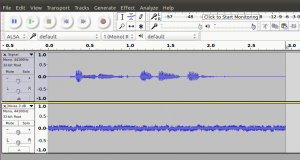

We moved forward with our test environment planning. Per discussion with Professor Sullivan in lab, we decided to build out basic matlab simulations to generate signals and sound delays given arbitrary speaker & microphone placements. Below is a screenshot of sample MATLAB code written by Bobbie Chen:

Signal Processing (Kevin)

We are continuing with our research on adaptive noise-cancellation techniques to implement on Teensy microcontroller. Several research papers have been read in order to get a better understanding of different potential algorithms that we could attempt to use in our MATLAB simulations. Some research papers of note include Adaptive Noise Cancelling: Principles and Applications (Widrow, McCool, Wililams). Looking forward, we aim to have some implementation of ANC for our MATLAB simulation by the end of the week.

Circuitry and Embedded Software (Sean)

We have a general design for using hardware microphones wired directly to the Teensy board’s I/O pins. We are currently doing research on how to read Pulse Density Modulation (PDM) data from the microphones onto the Teensy board. We will start working on assembling the microphones once they arrive, and we will aim to get a signal through the circuitry.

Goals for Next Week

For this following week, we wish to assemble the test environment. We also aim to begin assembling the hardware for the hearing aids, hopefully getting a loopback from mic to earphones. With regards to signal processing, our goal is to have some implementation of adaptive noise cancellation implemented by the end of next week in our MATLAB simulation.