Hi everyone! For our ECE capstone project, we are working on a directional hearing aid, using a wearable microphone array.

Inspiration

The inspiration for this project came from meeting a dance instructor, Dan, who is legally deaf and relies on a hearing aid to communicate with his students. Many conventional hearing aids only amplify all nearby sound, making hearing in a noisy environment like a dance studio with loud music and multiple conversations near impossible. As a result, Dan usually resorts to lip reading or turning his earpiece to a higher volume, which only damages his hearing further.

Solution

To improve on conventional hearing aids in noisy environments, our group proposes a directional hearing aid.

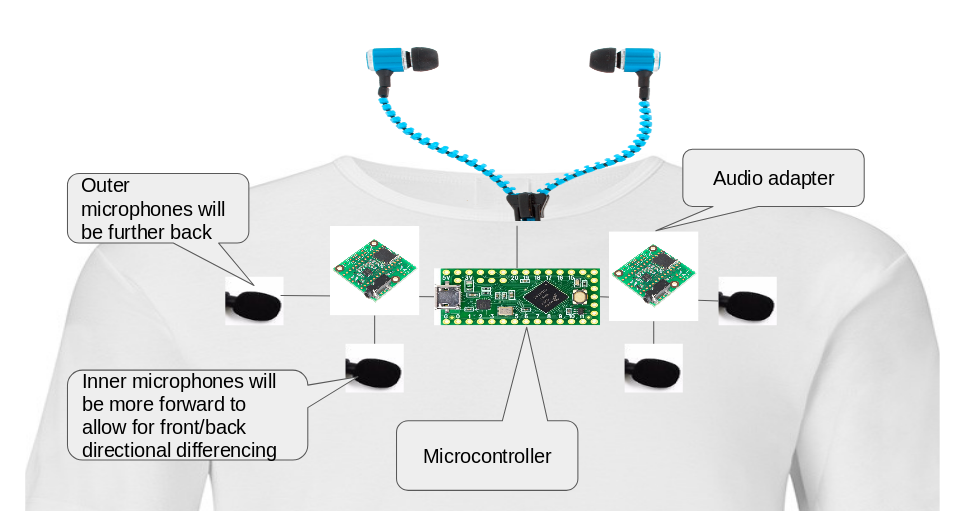

Our implementation will consist of a microphone array worn by a hearing-impaired person. The microphones will be connected to a microcontroller, which then applies signal processing algorithms to focus on the sound from the area in front of the user. We assume that the user’s torso is facing in the direction that they want to hear.

We chose the form of a microphone array worn on the torso because this gives us the space to place more microphones with greater separation. This allows for certain signal processing methods which are not effective in traditional small in- and on-ear hearing aids.

Problems We’re Not Trying To Solve

We picture a specific use case here, where the user is trying to hear a person in front of them in various noisy conditions like a crowded room. In particular, we do not aim to help users listen to things like music or public speakers in a crowded venue – this is because the standard solution for these is to use a hearing loop system to transmit the sounds wirelessly, directly to a user’s hearing aid.

And also, we are not trying to handle cases where the sound source is moving around the user – it would be very difficult to predict which sounds a person actually wants to listen to in a whole soundscape. With our project, we envision that the user can simply turn their body to look at whatever they want to hear.

Implementation Details

Physical Implementation

We will create an array of multiple (likely 4) microphones, mounted somehow onto the front of the user’s torso (for example, with a vest). These microphones will then interface with the Teensy 3.6 microcontroller – we chose this particular model because it is the base component for the Tympan open-source hearing aid board.

After signal processing is performed on the mic input, we will send the output to a pair of headphones. This is because we do not have the time or resources to build our own in-ear hearing aid or reverse-engineer a commercial one.

Signal Processing Implementation

Our goal is to reduce the background noise and improve the directionality of what the user can hear in real-time, especially for speech. We will implement algorithms in C and run them on the Teensy microcontroller to transform the sounds from our microphones into a better version that the user can listen to on the headphones. Potential methods for doing this include multi-channel Wiener filtering and adaptive nulling, though we have not yet decided on any particular algorithm.

Conclusion

We’re working on an interesting project with the potential to help countless hearing-impaired people around the world. If you would like to help, please contact us at seanande@andrew.cmu.edu, bobbiec@andrew.cmu.edu, and kmsong@andrew.cmu.edu!