Baby Got Track Video

Baby Got Track Poster

Just another Carnegie Mellon University: ECE Capstone Projects site

Going forward, I plan to reduce the resolution of the image and see if that improves the processing time. I also want to mount the raspberry pi on the crib so that I can make necessary adjustments in terms of light, exposure, etc to ensure consistency in images.

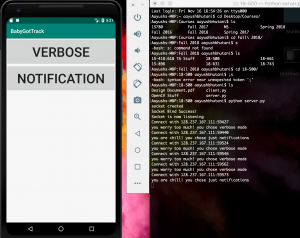

Going forward, I need to wait for Priyanka to automatize the bluetooth stuff which is used to send data from sensors to pi so that I can include that in the python script and load it at boot time

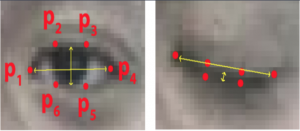

Eye markers for open and closed eyes look like:

The algorithm then computes distances between the features for each of the eyes and averages them out to give a number. I trained it on various baby images and it seems to work really well. (only 1 out of 100 was incorrectly classified).

Correctly detecting open eyes:

Correctly detecting closed eyes:

All of us worked to finalize the project requirements and came up with hardware/software specifications. We spent some time developing the slide deck for the presentation which gave us an opportunity to think through the integration, algorithms, risk factors and unknowns once again. We got constructive feedback which we are going to take into account going forward.

A breakdown of how I spent my time:

Here’s a break down of my time this week:

Here is how I spend my time this week: