Aayush

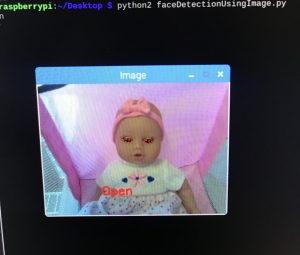

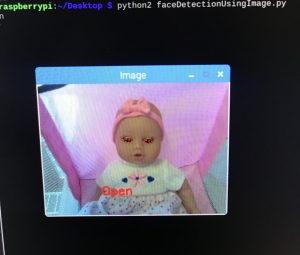

- I finally got the eye open/close detection to work on the pi after struggling for over 3 weeks to install the required modules. It turns out that the pi is 10 times slower than my macbook on which I had been timing. The pi takes ~10 seconds for each image taken using the raspberry pi camera with resolution of (1024, 768).

Going forward, I plan to reduce the resolution of the image and see if that improves the processing time. I also want to mount the raspberry pi on the crib so that I can make necessary adjustments in terms of light, exposure, etc to ensure consistency in images.

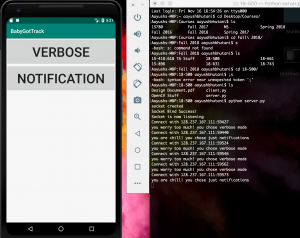

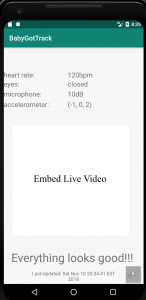

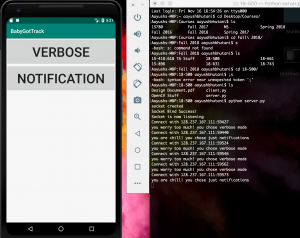

- I wrote a basic server in python and modified the app to send/receive data from the server over the internet. Below is a demonstration of data transfer from app to the server.

Going forward, I need to wait for Priyanka to automatize the bluetooth stuff which is used to send data from sensors to pi so that I can include that in the python script and load it at boot time

Angela

- I have continued to work on the crying detection. I installed packages such as PyAudio to do file transformation

- I am working on integrating my sleep-wake algorithm and heart rate detection to the packets that Priyanka is sending. The packets are in the form of:

- start character

- sampling rate

- data stream

- end character

- My plans for the next week are to finish this to make sure that we can start automating the detection algorithms after we come back from break. I will also work with some materials to make the circuitry into a wearable.

Priyanka

- working on connecting the pi via wifi with the app that Aayush has been working on. Spent most of the time researching what to do since I needed to get an end-point of the App from Aayush.

- Worked on automating the start-up so that everything connects properly on boot. (Still Work in progress)

- Also need to solder the wearable hardware together so that i can get readings from both sensors at the same time.