Baby Got Track Video

Baby Got Track Poster

Just another Carnegie Mellon University: ECE Capstone Projects site

Going forward, I plan to reduce the resolution of the image and see if that improves the processing time. I also want to mount the raspberry pi on the crib so that I can make necessary adjustments in terms of light, exposure, etc to ensure consistency in images.

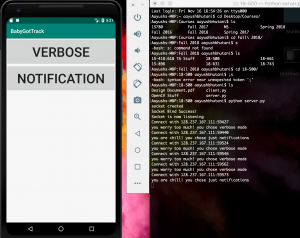

Going forward, I need to wait for Priyanka to automatize the bluetooth stuff which is used to send data from sensors to pi so that I can include that in the python script and load it at boot time

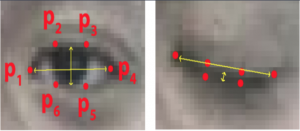

Eye markers for open and closed eyes look like:

The algorithm then computes distances between the features for each of the eyes and averages them out to give a number. I trained it on various baby images and it seems to work really well. (only 1 out of 100 was incorrectly classified).

Correctly detecting open eyes:

Correctly detecting closed eyes:

Angela :

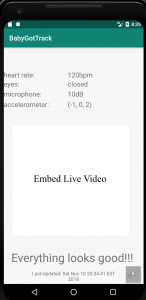

I implemented simple signal processing algos to identify sleep wake cycles, I tried to find different data sets to do sleep wake training on, I downloaded open CV.

Priyanka:

I set up the teensy board and set up arduino IDE to program the teensyboard. I also got all the parts and am trying to figure out how to program the different sensors.

Aayush:

I downloaded openCV, implemented basic face detection algorithm and sort of working eye open/close detection