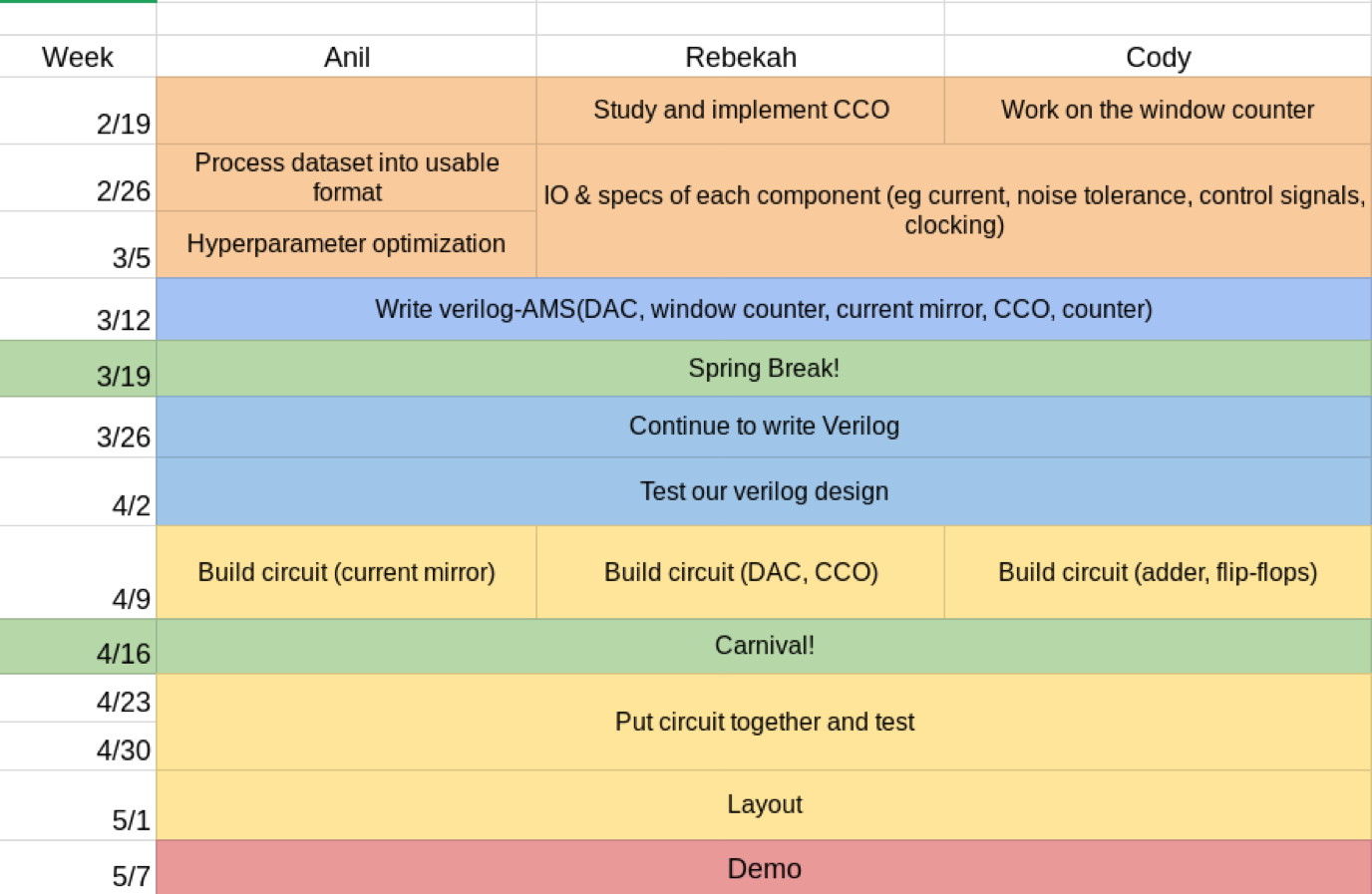

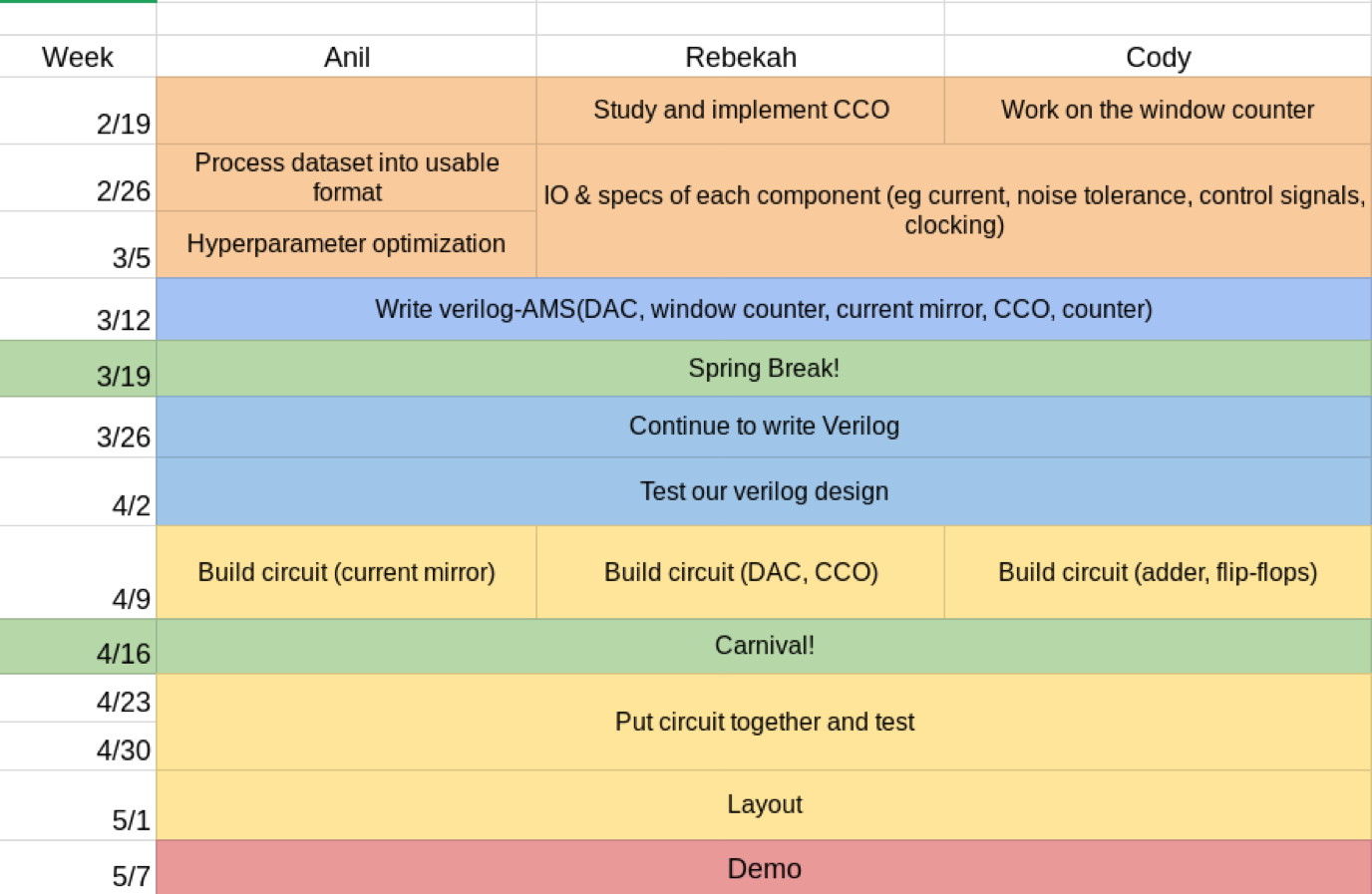

Projected Timeline

This week I was I was sick the majority of time. Therefore I was unable to contribute much. I did hand calculation of the current controlled oscillator and tested the performance of the CCO. Cody and I were able to extract information from simulation and decide specs for other components. I also wrote the website for our team.

What I plan to do the upcoming week: help Cody write verilog for the system and finalize spec for each component. I would also like to help Anil work on improving the classification accuracy and optimizing the algorithm so it best simulates our system

This week I've worked on the digital components and worked with Rebekah on characterizing each component to determine what specs we want to use (eg. number of window counters per channel, current range of DAC, working current range of CCO etc). In addition, I've started writing the verilog for modeling our circuit.

I also wrote some Matlab starter code to help Anil get started with programming. We discussed in detail how to manipulate the neural data we acquired online.

Next week I will work with Rebekah to finish the verilog model of system

What I’ve done this week

My plan is to write another Matlab script that sweeps possible values for hyperparameters as well and evaluates performance and picks out the optimal hyperparameters when run for a long time (e.g. overnight).

Together as a team, we worked on the design proposal.

This week I worked with Cody to determine specs of our system (input signal duty cycle, calculations to determine power consumption). I also studied analog-AMS and what to include in our modules.

Next week I will be working with Cody to figure out detailed specifications as well as various testing strategies for the system.

I continued working with Rebekah to determine more detailed specs of our system (input signal duty cycle, calculations to determine expected power consumption etc) I also wrote verilog for the digital portion of our chip and am currently working on the testbench verification. I plan to Work with Rebekah on writing verilog-ams of analog portion

I finished working on hyperparameter optimization by using the ELM algorithm in MATLAB to determine base line design specs that we’re going to go for in our design of the circuit. (hyperparameters include hidden layer node count, window counter sizes, number of classes for classification, and time bins for g rouping spike trains and some more)

Next week going to join Rebekah and Cody in designing analog portions of the circuit such as the current mirror array.

This week I worked with Anil on studying the power consumption specificattions with respect to the algorithm's tolerance. At first we roughly calculated the power consumption from simply scaling the process and found out that to meet the power requirement of the paper we need to reduce drain current. However, after running simulation I found out that the threshold voltage of the transistor is too high. Therefore we have decided to operate in subthreshold to achieve power consumption in nano Watts range. I also ran Monte Carlo simulation on current mirrors to characterize the standard deviation of process mismatch parameters. Anil has been running simulation with the data I provided. Once we collaborative solve this problem we will be able to plug this into our verilog model. Looking forward I will be studying sub-threshold circuit design

This week I worked with Anil and Rebekah to figure out the power problem. Although we haven't solved it completely, we're on the right track and are starting to understand the steps we need to take in order to significantly reduce our power consumption (how much we can reduce Vdd by, size of current mirror array, etc). Also, this week I've worked on the verilog model. The window counter is tested and verified, and now I'm working on the CCO model.

This week I’ve continued working on MATLAB, but now for a different analysis, Rebekah and I figured out that our current mirrors placed a random distribution of weights not around 0 but around 1, and I have been running MATLAB simulations to see how this change affects our decoding accuracy (it very significantly affects negatively unfortunately). I have been trying to come up with the reasons why this is happening and a possible solution for it. So far I have enough data to support the notion that this is happening due to all the data being stuck on a low gradient portion of the sigmoid function (used as activation function in hidden layer and implemented by CCO). I’m in the process of adjusting the steepness and the center of this sigmoid function to better improve the decoding accuracy and hence coming up with necessary restrictions (design parameters) for the CCO.

This week I built and tested the DAC in cadence. I first tried the topology provided in the paper and it had a large DNL. Therefore I switched to a current mirror based technology and the DNL is now less than 1 bit. I also studied the structure of the CCO and built it based on the desired operating frequency. On the other hand, I worked with Anil in understanding and improving the algorithm in order to obtain a higher decoding accuracy. This system now has components that are ready for a system level testing, which will be the focus of next week's work.

This week I worked on building and testing the schematics for the digital portion of the system. It is mostly working by itself right now but I need to make a few timing adjustments and also need to do testing with the rest of the system

This week I worked with Rebekah and sometimes on my own to improve the decoding accuracy of our algorithm. I developed a new approach to raise the decoding accuracy to ~70% from 60% for four class classification

This week I worked on running Monte Carlo simulation of individual transistors to make sure the tool kit we use is capable of performing both process variation and mismatch. I also integrated the entire system after Cody finished building the digital portion of the system. I set up testbenches to study the influence of mismatch on a subsection of the system. I also put together a smaller version of the entire system to study how to dump outputs for Monte Carlo and post-processing

This week I finished building the schematics for the digital portion of the system as well as testing and verifying functionality.

I also wrote matlab and verilog code for generating the input vectors to the system from the raw data

This week I finished hyperparameters adjustments and finalized the decisions for several size parameters of the circuit, taking power consumption and accuracy in consideration. Some of these decisions included the window_counter moving array size, number of hidden layer nodes etc. I wrote Matlab code to verify the adjustment of the activation function within the hidden layer and optimized it with respect to decoding accuracy. Currently working on how to dump/read Monte Carlo analysis from Cadence back into Matlab for testing purposes.

This week Cody and I worked on debugging the nominal performance of the overall system. The largest challenge we faced was simulation time. We tried spectre and ultrasim with analog and mixed signal simulation mode, each taking a long time (hours). We managed to verify the nominal behavior of the circuit eventually.

This week I worked with Rebekah in debugging a scaled down version of our design (there was an issue in our interpretation of the output signals as well as a timing issue of reset signals). I believe we've resolved the bugs for now.

In addition, I've been working on running simulations and modifying simulation settings in order to run longer simulations while keeping simulation times at reasonable lengths.

This week I worked to improve the decoding accuracy of our algorithm by other hyperparameter optimization techniques and adding more post-processing layers in the Matlab section. I’ve tested input vectors that are all going in the same direction, and in the process of putting appropriate padding in between turns during data analysis.

This week we had lots of issues with Cadence tool as well as lab space. I ran multiple overnight simulations with Spectre, Ultrasim, APS and XPS MS on both Monte Carlo and nominal simulation. We also drafted a list of things to do for next week.

This week we ran into a lot of tool issues with Cadence and so Rebekah and I worked on resolving that as well as running simulations. We also drafted a list of simulation objectives to cover. In addition, I also worked on building the schematic for our fullscale circuit.

This week I have worked on analyzing the decoding accuracy with regards to directional detection (along a single direction only). This had no effect in improving the accuracy noticeably despite training the model with a data set three times as large (same data set fed back three times over). Currently, I am working on converting another data set in a usable format for our Matlab code base so that we could possible use if for decoding if it happens to have a decoding accuracy

In order to prepare for the design presentation, this week I mainly worked on characterizing performances of analog components I built by running Monte Carlo analysis to get histograms of output distribution. I also tried to reduce power consumption. I have come up of the solution of keeping "NEU" on for a significantly short period of time. However, this still does not solve the problem of simulation time. Therefore I proposed pipelining simulation which we will try in the coming week. I also worked with Mazen to figure out how to simplify the simulation procedure.

This week, after building the full system schematic, I worked on running simulations to measure power consumption. However, we quickly found out that while the smaller scale system simulates within reasonable time, the larger schematic can't even simulate because Cadence runs out of memory before finishing generating the netlist. So Rebekah and I worked on getting around this problem, in addition to meeting with Professor Mukherjee to brainstorm ideas. We've come up wtih a few possible solutions, and hopefully see promising results after implementing them.

This week I've worked on the other data set we got from Prof. Byron Yu, this dataset has 8 class classification instead of 4, and I managed to improve the decoding accuracy of this dataset to above 85% with 8 class classification (using ~60 hidden layer nodes). I implemented the necessary post-processing necessary in Matlab to achieve this performance, and ran other hyperparameter analysis to determine how this accuracy changes with respect to other parameters within our design.