This week we all worked together to get the entire project working together, from Explor-R to Unity and back. For the most part, everything was integrated together already and had worked out the endpoints for each part for the final integration.

Alec: This week was all about bringing all of the parts of the project together. On my end, I worked on writing the TCP data transfer layer between the Python radio script and the C# Unity layer. This includes back and forth communication. On top of that, the point generator in Unity needed to be modified for the real streamed data. The touch controller movement also needed to have the communication layer added in order to talk to the robot. The next week will be focused on making the user interface much nicer, now that we know what the streamed data will look like.

Nathalie: This week I worked on making sure the robot was ready to be integrated with everything else. This meant making sure the robot actually moves to the correct position when given a command. Previously there was an issue where the robot would not stop moving even though it passed its goal position. This was because the threshold was too low and if the robot overshot, it would continue to move. I fixed this by automatically stopping the robot whenever it went beyond the destination point. Next week I will work on improving the Odometry. I will do this by making sure the robot can move smoothly on any surfaces. Additionally, I will do some tests to check how good the robot’s Odometry is.

Robin: One of the main things I worked on was getting a bare bones read/write script ready for Alec to use. I also worked with Nathalie to ensure we got messages passed between the motor controller node and the radio node correct so we know when exactly to send lidar and odometry data from the radio. I also made sure the data was modified in such a way that Alec would not need to do any processing over it to use it in Unity. Doing it on my end made the process more streamlined because when receiving the LIDAR data, we would have to go thorugh each datapoint anyways. Next week I will work on figuring out a way to reduce the downsampling of the data with better compression techniques.

This week I worked on integrating the radio communication into the robot. First, I had to get the FTDI driver on the Jetson to turn on (similar to what I did to the cp210x driver for the Lidar before) in order for the radio to be proplery mounted. I made the radio into its own ROS node and added publishers/subscribers to new topics within the motor controller+rplidar. I wrote some code to take data received from the radio plugged into my computer and pass it on to the motor controllers such that the robot would move. I also made it so when the robot was moving, it would send the data over radio to the transceiver connected to my laptop.

Next week, I need to write more code for the transceiver connected to the computer so it can do everything in one function instead of what I have been doing and manually setting the radio to "send" and "receive". We will also all get together in order to get the radio+robot integrated with the game in Unity.

This week I worked on improving Explor-R's odometry. There were still some issues with not going the right distance despite the encoder values corresponding to the right distances. It turns out that there were some variable in the ROS nodes that weren't affected by the settings in the launch file. Once this was changed, the odometry was much better and there was typically an error of 2.5cm per meter. This week I also worked on getting more traction on the wheels. Last week we added additional treads to the wheels which did improve the traction, but did not allow the robot to move on all surfaces. Instead of treads, I added rubber bands to the wheels, which gave them better traction.

Next week I will do more tests on the odometry to make sure that there are consistent results. Additionally, I will make it so that if the robot does overshoot its position, it will immedietally stop, rather than continue to run, which happens ocasionally.

This week I was adding additional features to make the gameplay experience more intuitive. I added a board displaying the overhead view of the enviroment the users wrist so that they would be able to see top down as well as first person at the same time.

I also changed the movement gesture controls to be a parabolic pointing system - this is what they used in many different games and is much more intuitive than pointing.

Next week I will work on changing the movement system to send messages to the robot and integrate the radio with Unity

This week I worked on fixing the odometry error bugs. The main issue I was encountering was that the robot would think it went to the correct location, but it went way past the location. It did go in the right direction, but it wasn't calculating the correct x, y, and theta positions based on the encoder readings. The issue was that the position was calculated too frequently for it to correctly accumulate the values. I fixed this by only calculating the odometry every other second. Additionally, in order to publish robot location for the Unity environment, I made sure to only publish odometry data while the robot is moving, as well as publishing a message saying the robot is moving, so that the radio node knows not to send LIDAR data. Lastly, I added more traction to the robot wheels by adding additional treads.

This week, we also all created the maze that the robot will be going through during our final demo. We made the maze out of purple insulaation foam, and plan on either taping or glueing it together on demo day to ensure that it doesn't fall apart.

Next week I will work on trying to get the robot fully integrated with all the components so we can start receiving commands from the Unity environment.

This week I worked on getting the XBees integrated into ROS for easy integration with the rest of the project. First, I read from a file that contained old LIDAR ddata and got that to be sent from one radio to the other. Once I got that working, I created a new ROS node that subscribed directly to the topic the LIDAR was publishing to and I got the data transmitted. I made sure to make the node/subscriber modular enough so the radio can switch from transmit to receive depending on the state of the robot easily by using a wait_for_message instead of subscribing and spinning. Unfortunately spin_once does not exist in rospy (it does in roscpp) so that was my workaround.

To reduce difficulties with two-way communication, we decided to have two separate "modes". When the robot is moving, the robot XBee will transmit LIDAR and odometry data to the computer XBee. Then when the robot is still, the computer XBee will transmit data to the robot XBee. This will make it so the robot has to be still before receiving a new move request.

Next week I will integrate the radio fully with the rest of the project. At that point, the robot should be able to move with requests from the computer and send real time data to Unity.

This week I worked on getting the Oculus touch controller pointing gesture working. This allows the user to gesture to a point in the world and move to it. This will be used to send a command to the robot to have the robot move to that position. To implement this, I had to validate the gesture location to not be within certain distances of walls, and not be out of line of sight of the user. This will ensure that the robot will always be able to easily move to that location in the real world.

Next week I hope to test the point generation on a dataset with robot encoder position and also start testing sending commands to the robot. The unity side is ready to accept all incoming data, but the serial handlers must be written to parse the data coming in.

This week I worked on getting the robot to move to a specific point. So the robot starts at the point (0,0), and you can give it some point (x,y) that it will move to. The current implementation is to turn such that the point is directly in front of the robot, and then move forward to the point. This works for our final project as the user will not be allowed to pick points where there are obstacles in the way, and it will be more intuitive for the user inside the oculus if the robot moves this way. In order to do this, I implemented odometry on the robot, so that based on the encoder ticks of the motors, the robot always knows where it is. Currently the robot thinks it has moved to the right location, even though it has not. I suspect it is a problem with how it is reading the encoder ticks.

Next week, I will work on fixing the odometry bug, so the robot actually moves to the correct location. I will also work on getting the motors to run without being tethered. This will allow us to get data to Alec, and allow us to test the odometry more accurately.

This week I was mostly focused on getting the robot ready for the demo with Nathalie. We worked on getting both the motor controller and the LIDAR to run on startup. On my end, I wrote a startup script that would start both ROS nodes. Then I ran a subscriber to the LIDAR and wrote all that data to a file so we could access it later.

Once I got that working, I played around with the XBee. I configured the settings and played around with getting it to send messages to each other using Python so I wouldn't have to use an Arduino. I was able to read old LIDAR data from a file and broadcast the data from one radio to the other. Then I read the data through serial and printed it to the console.

Next week I will work on getting realtime data from the LIDAR and odomotry data broadcasted to the other radio. I will also come up with some sort of protocol that allows me to also send "to-move" data from the computer back to the robot.

This week I focused on adding the Oculus touch controllers to the unity environment as well as bettering the user experience. I added capability to rotate the player view in the environment and base the robots movement direction on the way the head is facing. This made for a much better demo experience.

Next week I will be testing the point spawning with a dataset including robot position as well as adding the point and click method of selecting a point to travel to

This week I worked on getting the motors to run directly off of the Jetson. This required installing ros and using a ros node to publish odometer values and subscribe to velocity commands. Currently, the robot can be controlled by publishing velocity commands that are split into their linear and rotational components.

Next week, in preparation for the demos, I plan on working on getting the robot to move with simpler commands such as move forward 10 inches and turn 90 degrees. This resembles the kinds of instructions that will be interpreted from the Oculus generated commands.

Early this week, Nathalie and I got together and tried to get the old radio working. Unfortunately, the radio was causing us so much trouble we decided to switch to an easier to use transceiver in order to get things working. While waiting for the new transceiver to get in (xbee), we worked on assembling the robot and testing parts together.

I had to get the LIDAR to run off the Jetson. I already had a ros node from earlier so I was able to just add the node to the existing ros project. Then I hit a roadblock where the CP210x driver (driver that reads/assigns the USB device to /dev/ttyUSB0) was in the Jetson kernel, it wasn't built. I ended up having to reflash the Jetson with a newer version of L4T in order for the tutorials I was following to work (figuring this out took a painful amount of time). Fortunately, I am able to run the LIDAR off of the Jetson!

Next week, once I get the xbee, I want to be able to get basic communication to go from one radio to another. In preperation for the demo, I want to be able to send the LIDAR data from the robot to a radio transceiver that would be plugged into my computer. From that, I would be able to print out the data in a terminal.

In preparation for the demo next Wednesday, this week I added the Oculus functionality to the Unity environment. The virtual world can now be visualized through the Oculus. More controls were added to make controlling the virtual robot easier.

We tested the visualization with a new dataset where the lidar sensor was traveling through a hallway around a corner, and we used that data to test a stream of points being fed to the Unity environment.

Next week I will work on integrating the Oculus touch controllers and using them to select a point in the virtual environment. Along with that I well test the Unity setup with a data set that includes robot odeometry.

This week I worked on the processing of data points in the unity environment. I added functionality so that if points collide they will not both be displayed. This minimizes the amount of game objects displayed in the environment. I also added functionality so that if a point is seen through another point, the see through point will be destroyed as it can be assumed that it is not there.

Next week I will work on optimizing the point processing code and setting it up so that it can handle a constant heavy stream instead of a static dataset.

This week I worked on getting the motors to run using the RoboClaw motor controller. The motor controller is powered off of gpio pins on the Jetson, which provide enough power for the motors to go to full speed. I can also read the motor encoder values, which will be useful for odometry. Currently, the motors can be controlled through my windows computer.

Next week, I will work on controlling the motors through ROS directly on the Jetson.

This week I worked on getting te radio transceiver to send and receive data. The first thing I had to figure out was what went on each endpoint of the radio transceiver. I ended up deciding to use one raspberry pi to act as the Jetson (since both run Linux) and an arduino as the intermediate between the radio transceiver and the windows machine. There were some library functions included with the radio documentation. However, there was no straightforward way to use the functions because they had a HANDLE datatype which is not very straightforward to implement in Linux.

I also installed Arduino and got ROS to work with it as well. Using the code given in rplidar_ros and without the radio, I was able to have the arduino subscribe to the lidar publisher. The lidar publishes a lot of data so the arduino buffer overflows. This is a problem that we will need to deal with later.

Next week I will try to run the example code on a Windows machine. If both radios are able to communicate while running off a windows machine, I can take one of the transceivers and plug it into a raspberry pi and use the UART pins directly to write the stream of data.

This week I worked on making the foundations of the Unity environment. Using a sample data set from the LIDAR I got the 3d point spawner working as well as started to get movement in the game environment working. On top of that I started integrating the Oculus so that all testing can be done through the headset. A feature was also added to be able to switch between first person and overhead views of the 3d environment.

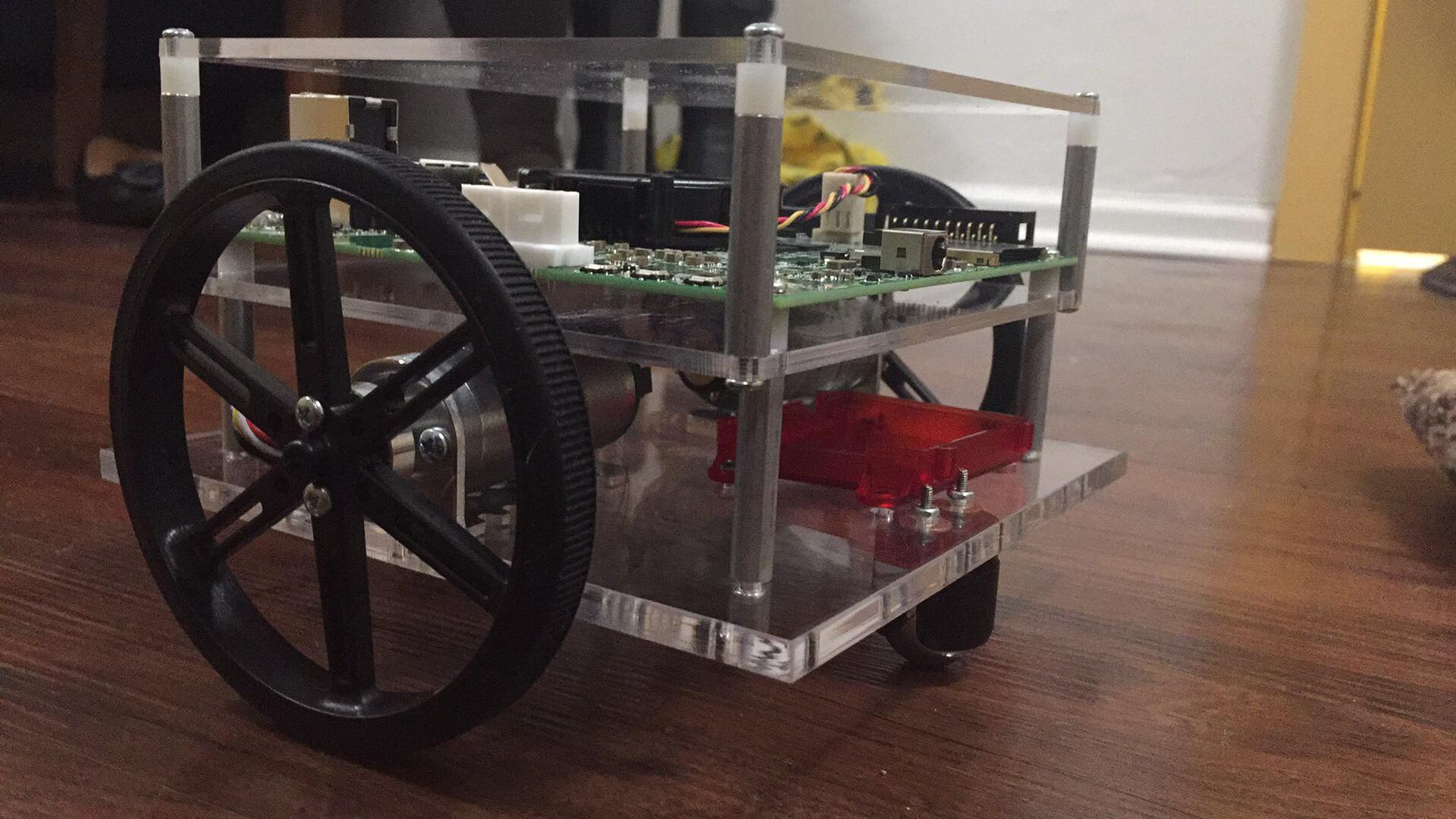

This week I mainly working on building the robot. This required laser cutting the custom built chassis designed in SOLIDWORKS, and putting together all the pieces. In addition to putting together the robot, I set up the NVIDIA Jetson TK1 in preparation for future weeks. This involved upgrading the Linux version to 16.04, installing ROS Kinetic, and installing additional packages, like Catkin.

The main things I have been working on were getting ROS set up on my computer and familiarizing myself with ROS as well as the RPLidar ROS package. I then created my own package, added rplidar-ros and got my own subscriber configured with the rplidar-ros publisher. Lastly, I looked into the "library C code" for the radio transceiver we bought and went down a rabbit hole of dependencies. As of now I am still in this rabbit hole. Next I'm going to try to get messages sent over radio.