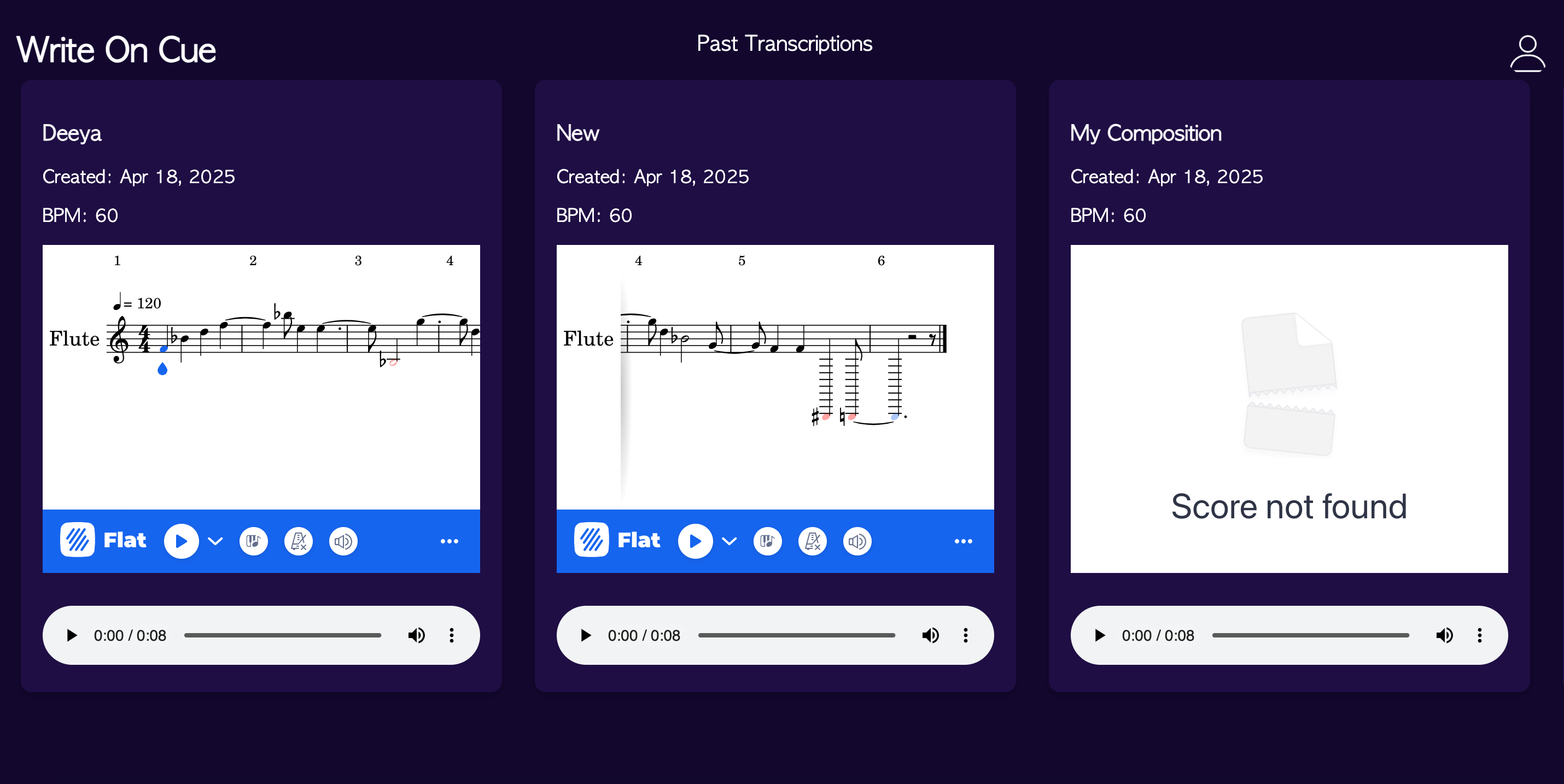

This week I focused on completely finishing up the web app and including all the functionality that it needs. We weren’t able to get reimbursed for the Edit functionality of the Flat.io Embed API so instead if the user wanted to edit the generated score the web app will take them directly to the Flat io website to the where the score is on their account. This way the user is able to edit the score and their changes get reflected onto the website in the Past Transcriptions page. Also on the Past Transcriptions page I added the edit feature on each of the past scores there so any generated score by the user can be edited at any time and the changes are shown on the web app.

Shivi’s Status Report for 4/26

This week, I spent most of the time making our final presentation and poster, as well as wrapped up integration of all our components. Our entire pipeline seems to be working smoothly from end to end, and we are currently working to test everything out on more complex pieces. I will also be writing some compositions in MuseScore with various note types/tempos, playing them back, and uploading a recording of the playback to our web app to test robustness. We are also meeting 1-2 more times with the School of Music flutists this week to stress test our system and receive some more qualitative feedback on the usability of the system. Overall, my progress is on track, and I am excited to continue testing and prepare for our final demo.

Grace’s Status Report for 4/26/25

This week I worked on fine tuning identifying the rests in the segments as well as begun working on things needed for the final demo and presentation. So I worked on my portion of the final presentation, did some additional testing on the segment with more complex compositions, like songs from the school of music students, and begun filling out the final poster.

I additionally tried experimenting with other forms of identifying rests, like using librosa’s package for identifying segments as well as looking into the onset detection algorithms other teams in my section did, but ultimately realized that I would not have enough time to fully realize these and flesh them out, so continued finetuning my originally algorithm to better results.

While creating the audio segmentation for tied notes, we realized that false positives would be better as individuals can go in and delete the note rather than having to add in the note. Fine tuning this further, our algorithm looks a lot better, especially for compositions like hot cross buns.

Deeya’s Status Report for 4/19/25

This week I focused on finishing up all the functionality and integration of the website. With Shivi I worked on adding the key and time signature user input and integrating in the backend for generating the midi file.

I also worked on the Past Transcriptions page where the user can view each transcription they have made with the web app including the composition name which the user names when they input/record an audio, the bpm of the piece, the generated sheet music, the date it was created, and the audio controls to playback the audio.

The only thing left is integrating the edit functionality which is a paid API call. We are waiting to hear back if it is possible to get reimbursed and we will explore another option which is to redirect the user to the Flat.io page to edit the composition directly there instead of on the web app.

Tools/knowledge I have learned throughout the project:

- Developing a real-time collaborative web application leveraging WebSockets and API calls – Youtube videos and reading documentation

- How to get feedback from the flute students and then be able to incorporate it to make the UI more intuitive

- How to incorporate the algorithms Shivi and Grace have worked on with the frontend and being able to update it seamlessly whenever they make any updates – developing strong communication within the team

Shivi’s Status Report for 4/19/25

After the live testing session with flutists on Sunday, we found that when performing two-octave scales, certain higher octave notes were still being incorrectly detected as belonging to the lower octave; after making some adjustments to the HSS pitch detection, they seem to be working correctly now. I also modified the MIDI encoding logic to account for rests. On the web app side, I worked with Deeya to incorporate time and key signature user inputs, and our webapp now supports past transcriptions as well. We also expired ways we could make the sheet music editable directly within the webapp. Since Flat.io API only supports read-only display with the basic subscription and we still have not heard back regarding access to the Premium version, we are planning to redirect users to the full editor in a separate window for now. Finally, I worked on the final presentation that is scheduled for next week.

In terms of the tools/knowledge I’ve picked up throughout the project:

- I learned to implement signal processing techniques/algorithms from scratch. Along the way, I learned a lot about pitch detection and what the flute signal specifically looks like and how we can use its properties to identify notes

- Web app components such as websockets and integration with APIs like Flat.io

- Familiarity with collaborative software workflows with version control, documenting changes clearly, and building clean/maintainable web interfaces with atomic design. We encountered some technical debt in our codebase, so a lot of time also was spent in refactoring for clarity and maintainability

- Conducting user testing for our project and collecting data/feedback to iterate upon our design

Team Status Report for 4/19/25:

This week, we tested staccato and slurred compositions and scales with SOM flute students to evaluate our transcription accuracy. During the two-octave scale tests, we discovered that some higher octave notes were still being misregistered in the lower octave so Shivi worked on fixing this in the pitch detection algorithm. Deeya and Shivi also made progress on the web app by enabling users to view past transcriptions and input key and time signature information. Grace is working on improving our rhythm algorithm to better handle slurred compositions by using Short-Time Fourier Transform (STFT) to detect pitch changes and identify tied notes within audio segments. However, we’re still working on securing access/reimbursement for the Embed AP in Flat.io, which is needed to allow users to edit their compositions. For this week we are preparing for our final presentation, planning on doing two more testing sessions with the flute students, and cleaning up our project.

Deeya’s Status Report for 4/12/25

This week I focused on making the web app more intuitive. Shivi had worked on the metronome aspect of our web app which allows the tempo to change as you change the metronome value using Websockets. I integrated her code into the web app and took the metronome value into the backend so that the bpm changes dynamically based on the user. I also tried to get the editing feature of Flat IO but it seems that the free version using iframe doesn’t work. We are thinking of looking into the Premium version so that we can use the Javascript API. The next step is to work on this and add a Past Transcriptions page.

Updated Gantt Chart (interim demo)

Deeya’s Status Report for 03/29/25

This week I was able to show sheet music on our web app based on the user’s recording or audio file input. Once the user clicks on Generate Music, the web app is able to call the main.py file that integrates both Shivi and Grace’s latest rhythm and pitch detection algorithms, generate a midi file and store it, convert it into MusicXML, make an API POST request to Flat.io to then display the generated sheet music. The integration process is pretty seamless now so whenever there are more changes made in the algorithms it is easy to integrate the newest code with the web app and have it functioning properly.

In the API part once I convert the MIDI to MusicXML, I use the API method to create a new music score in the current User account. I send a JSON of the title of the composition, privacy (public/private), and data which is the MusicXML file:

This then creates a score and an associated score_id, which enables the Flat.io embedding to place the generated sheet music into our web app:

Flat.io has a feature that allows the user to make changes to the generated sheet music including the key and time signatures, notes, and any articulations and notations. This is what I will be working on next, which then should leave good amount of time for fine-tuning and testing our project with the SOM students.

Deeya’s Status Report for 3/8/25

I mainly focused on my parts for the design review document and editing it with Grace and Shivi. Shivi and I also had the opportunity to speak to flutists in Professor Almarza’s class about our project, and we were able to recruit a few of them to help us with recording samples and providing feedback throughout our project. It was a cool experience to hear about their thoughts as well as understand how this could be helpful for them during practice sessions they have. Specifically for my parts of the project I continued working on the website and learned how to record audio and store it in our database to be used later. I will now be starting to put more of efforts in the Gen AI part. I am thinking of utilizing a Transformer-based generative model trained on MIDI sequences and I will need to learn how to take MIDI files and convert them into a series of token encodings of musical notes, timing, and dynamics, so that it can be processed by the Transformer model. I will also start compiling a dataset of flute MIDI files.