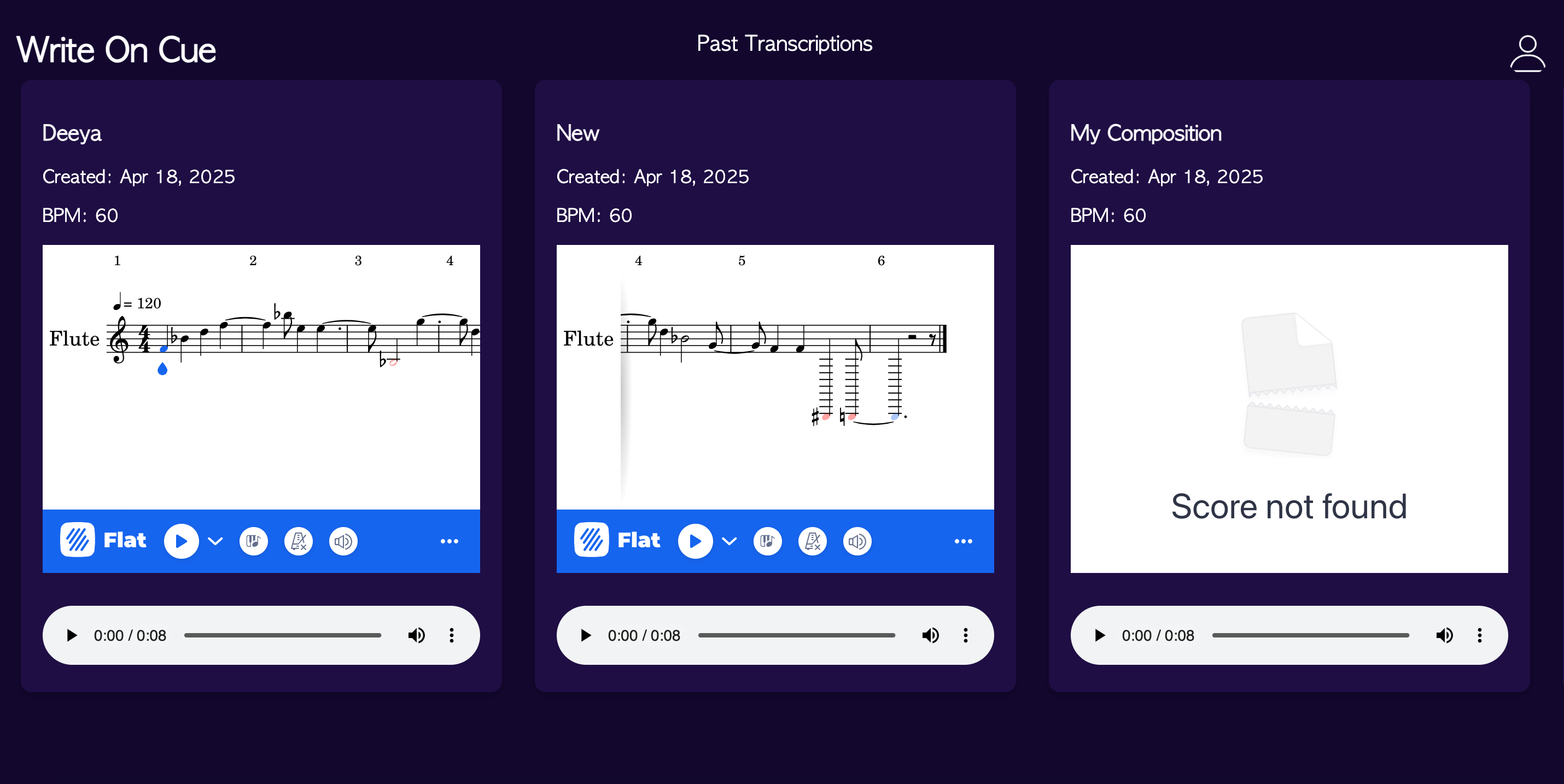

This week I focused on completely finishing up the web app and including all the functionality that it needs. We weren’t able to get reimbursed for the Edit functionality of the Flat.io Embed API so instead if the user wanted to edit the generated score the web app will take them directly to the Flat io website to the where the score is on their account. This way the user is able to edit the score and their changes get reflected onto the website in the Past Transcriptions page. Also on the Past Transcriptions page I added the edit feature on each of the past scores there so any generated score by the user can be edited at any time and the changes are shown on the web app.

Shivi’s Status Report for 4/26

This week, I spent most of the time making our final presentation and poster, as well as wrapped up integration of all our components. Our entire pipeline seems to be working smoothly from end to end, and we are currently working to test everything out on more complex pieces. I will also be writing some compositions in MuseScore with various note types/tempos, playing them back, and uploading a recording of the playback to our web app to test robustness. We are also meeting 1-2 more times with the School of Music flutists this week to stress test our system and receive some more qualitative feedback on the usability of the system. Overall, my progress is on track, and I am excited to continue testing and prepare for our final demo.

Team Status Report for 4/26/25

This week, we as a collective worked on creating a way to store past transcriptions using SQLite on our website as well as let people add, edit, and change anything from the original transcription that was generated. We believed that this followed the ebbs and flows of composition better and we wanted to mimic that. In addition to that, we are now focusing on fine tuning and testing our program further as well as working on some of the final deliverables, like the presentation and the poster.

Unit Tests:

Rhythm Detection/Audio Segmentation: Testing this on different BPM compositions of Twinkle Twinkle Little Star, using songs with tied notes like Ten Little Monkeys and compositions from the school of music, and compositions with rests in them like Hot Cross Buns and additional songs from the school of music.

Overall System Tests: We tested this project on varying difficulty of songs such as easy songs like nursery rhymes (hot cross buns, ten little monkeys, twinkle twinkle little star, etc) and scales, intermediate difficult songs from youtube as well as our team members playing (Telemann – 6 Sonatas for two flutes Op. 2 – no. 2 in E minor TWV 40:102- I. Largo, Mozart – Sonata No. 8 in F major, K. 13 – Minuetto I and II, etc) and more difficult sections like composition from the school of music.

Findings and Design Changes: From these varying songs, we realized that our program struggled more with higher octaves as the filter would accidentally cut off those frequencies, slurred notes (as it wouldn’t see it as the onset of a new note), and rests (especially when trying to differentiate moments of taking a breath from actual rests). These led us to tweaking how we defined a new note to create a segmentation and the boundaries for our filter.

Data Obtained:

| Latency | Rhythm Accuracy | Pitch Accuracy | |

| Scale 1: F Major Scale | 10.08 secs | 100% | 100% |

| Scale 2: F Major Scale W/ Ties | 12.57 secs | 97% | 95% |

| Simple 1: Full Twinkle Twinkle Little Star | 13.09 secs | 100% | 100% |

| Simple 2: Ten Little Monkeys | 14.46 secs | 93% | 100% |

| Simple 3: Hot Cross Buns | 11.78 secs | 91% | 100% |

| Latency | Rhythm Accuracy | Pitch Accuracy | |

| Intermediate 1: Telemann – 6 Sonatas for two flutes Op. 2 – no. 2 in E minor TWV 40:102 | 15.16 secs | 90% | 100% |

| Intermediate 2: I. Largo, Mozart – Sonata No. 8 in F major, K. 13 – Minuetto I and II | 16.39 secs | 87% | 97% |

| Hard 1: Phoebe SOM Composition | 10.99 secs | 91.5% | 100% |

| Hard 2: Olivia SOM Composition | 12.11 secs | 93% | 100% |

Grace’s Status Report for 4/26/25

This week I worked on fine tuning identifying the rests in the segments as well as begun working on things needed for the final demo and presentation. So I worked on my portion of the final presentation, did some additional testing on the segment with more complex compositions, like songs from the school of music students, and begun filling out the final poster.

I additionally tried experimenting with other forms of identifying rests, like using librosa’s package for identifying segments as well as looking into the onset detection algorithms other teams in my section did, but ultimately realized that I would not have enough time to fully realize these and flesh them out, so continued finetuning my originally algorithm to better results.

While creating the audio segmentation for tied notes, we realized that false positives would be better as individuals can go in and delete the note rather than having to add in the note. Fine tuning this further, our algorithm looks a lot better, especially for compositions like hot cross buns.

Deeya’s Status Report for 4/19/25

This week I focused on finishing up all the functionality and integration of the website. With Shivi I worked on adding the key and time signature user input and integrating in the backend for generating the midi file.

I also worked on the Past Transcriptions page where the user can view each transcription they have made with the web app including the composition name which the user names when they input/record an audio, the bpm of the piece, the generated sheet music, the date it was created, and the audio controls to playback the audio.

The only thing left is integrating the edit functionality which is a paid API call. We are waiting to hear back if it is possible to get reimbursed and we will explore another option which is to redirect the user to the Flat.io page to edit the composition directly there instead of on the web app.

Tools/knowledge I have learned throughout the project:

- Developing a real-time collaborative web application leveraging WebSockets and API calls – Youtube videos and reading documentation

- How to get feedback from the flute students and then be able to incorporate it to make the UI more intuitive

- How to incorporate the algorithms Shivi and Grace have worked on with the frontend and being able to update it seamlessly whenever they make any updates – developing strong communication within the team

Shivi’s Status Report for 4/19/25

After the live testing session with flutists on Sunday, we found that when performing two-octave scales, certain higher octave notes were still being incorrectly detected as belonging to the lower octave; after making some adjustments to the HSS pitch detection, they seem to be working correctly now. I also modified the MIDI encoding logic to account for rests. On the web app side, I worked with Deeya to incorporate time and key signature user inputs, and our webapp now supports past transcriptions as well. We also expired ways we could make the sheet music editable directly within the webapp. Since Flat.io API only supports read-only display with the basic subscription and we still have not heard back regarding access to the Premium version, we are planning to redirect users to the full editor in a separate window for now. Finally, I worked on the final presentation that is scheduled for next week.

In terms of the tools/knowledge I’ve picked up throughout the project:

- I learned to implement signal processing techniques/algorithms from scratch. Along the way, I learned a lot about pitch detection and what the flute signal specifically looks like and how we can use its properties to identify notes

- Web app components such as websockets and integration with APIs like Flat.io

- Familiarity with collaborative software workflows with version control, documenting changes clearly, and building clean/maintainable web interfaces with atomic design. We encountered some technical debt in our codebase, so a lot of time also was spent in refactoring for clarity and maintainability

- Conducting user testing for our project and collecting data/feedback to iterate upon our design

Team Status Report for 4/19/25:

This week, we tested staccato and slurred compositions and scales with SOM flute students to evaluate our transcription accuracy. During the two-octave scale tests, we discovered that some higher octave notes were still being misregistered in the lower octave so Shivi worked on fixing this in the pitch detection algorithm. Deeya and Shivi also made progress on the web app by enabling users to view past transcriptions and input key and time signature information. Grace is working on improving our rhythm algorithm to better handle slurred compositions by using Short-Time Fourier Transform (STFT) to detect pitch changes and identify tied notes within audio segments. However, we’re still working on securing access/reimbursement for the Embed AP in Flat.io, which is needed to allow users to edit their compositions. For this week we are preparing for our final presentation, planning on doing two more testing sessions with the flute students, and cleaning up our project.

Grace’s Status Report for 4/19/25

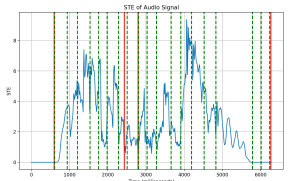

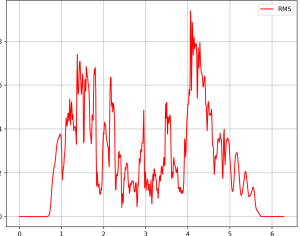

This week has been continuing to fine tune the algorithm for slurred notes and rests. We made the switch last week to STE from RMS and we saw better results as it kept the near zero values closer to zero, however with slurred notes the values still do not get close enough.

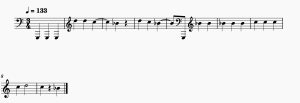

I created an algorithm that would look through the segments from the original algorithm and if it has any times, then it would “flag” the segment as a possible slurred note. Then I would check for pitch changes. I first used spectrogram but found that iw would miss some notes or incorrectly identify where the notes actually change, so I switched to using STFT and having a ratio varying based on BPM to detect smaller note changes with faster tempos with better success. Here is a picture of using the spectrogram

and using the STFT

While there are still some inaccuracies, it is much better than before. Currently working on fine tuning the rest detections as right now it has a tendency to over detect rests. Also looking into using CNNs for classification of slurred notes

While implementing this project, I learned more about signal processing and how some things are much easier to identify manually/visually than coding it up. Additionally, reading up on how much people research into identifying segmentations in music and how different types of instrument can add to more slurs as they tend to be more legato. For this project, I needed new tools on identifying new notes like using STFT and STE. To learn more about these, I would read research papers from other projects and universities on how they approach it and tried to combine aspects of them to get a better working algorithm.

Deeya’s Status Report for 4/12/25

This week I focused on making the web app more intuitive. Shivi had worked on the metronome aspect of our web app which allows the tempo to change as you change the metronome value using Websockets. I integrated her code into the web app and took the metronome value into the backend so that the bpm changes dynamically based on the user. I also tried to get the editing feature of Flat IO but it seems that the free version using iframe doesn’t work. We are thinking of looking into the Premium version so that we can use the Javascript API. The next step is to work on this and add a Past Transcriptions page.

Grace’s Status Report for 4/12/2025

Worked on the issues mentioned during interim demo, which were not being able to accurately detect slurs and not picking up the rests in songs.

First, experimented with modifying the code to Short Time Energy (STE). This helped the code become more “clear” as there were less bumps and more clear near zero values, essentially eliminating some of the noise that stayed with RMS. Should make amplifying the signal a lot easier now. However, still having some difficulty seeing the differences in slurred notes, so doing some additional research in onset detection to detect slurred notes specifically rather than look for the separation of notes in segmentation.

(forgot to change label for the line, but this is a graph for STE – this was taken using audio from a student in the school of music)

(forgot to change label for the line, but this is a graph for STE – this was taken using audio from a student in the school of music)

For rests, modified my rhythm detection algorithm to instead look for zero values after reaching the peak (means the note is done playing) and taking the additional length after the note to count as a rest. Sometimes takes slight moments of silence to distinguish notes as a rest though so need to do some experiments to make it less sensitive.