Team Status Report

Risk Management:

Risk: Comparison algorithm slowing down Unity feedback

Mitigation Strategy/Contingency plan: We plan to reduce the amount of computation required by having the DTW algorithm run on a larger buffer. If this does not work, we will fall back to a simpler algorithm selected from the few we are testing now.

Design Changes:

There were no design changes this week. We have continued to execute our schedule.

Verification and Validation:

Verification Testing

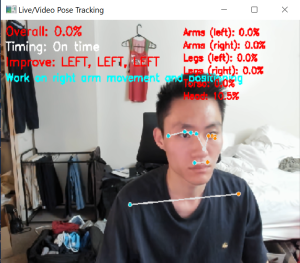

Pose Detection Accuracy Testing

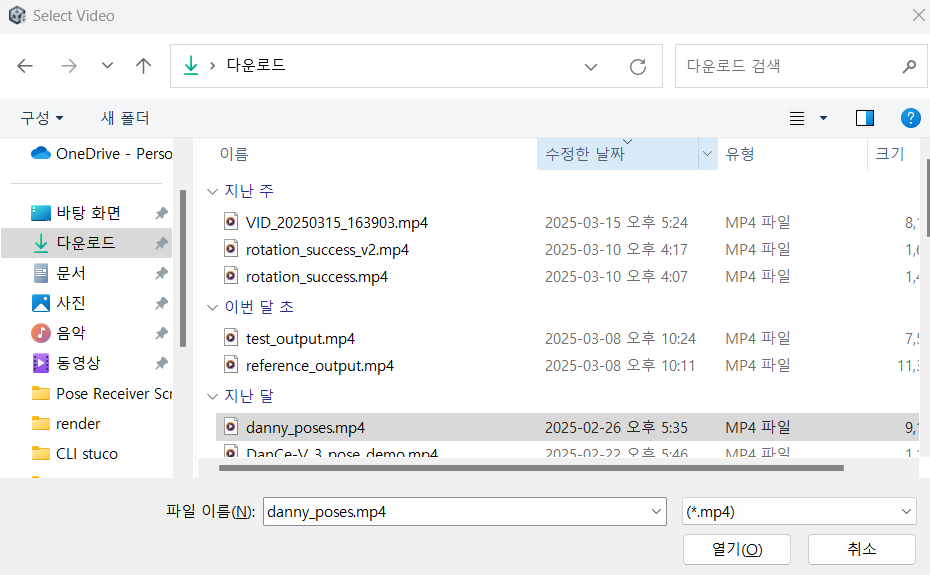

- Completed Tests: We’ve conducted initial verification testing of our MediaPipe implementation by comparing detected landmarks against ground truth positions marked by professional dancers in controlled environments.

- Planned Tests: We’ll perform additional testing across varied lighting conditions and distances (1.5-3.5m) to verify consistent performance across typical home environments.

- Analysis Method: Statistical comparison of detected vs. ground truth landmark positions, with calculation of average deviation in centimeters.

Real-Time Processing Performance

- Completed Tests: We’ve measured frame processing rates in typical hardware configurations (mid range laptop).

- Planned Tests: Extended duration testing (20+ minute sessions) to verify performance stability and resource utilization over time.

- Analysis Method: Performance profiling of CPU/RAM usage during extended sessions to ensure extended system stability.

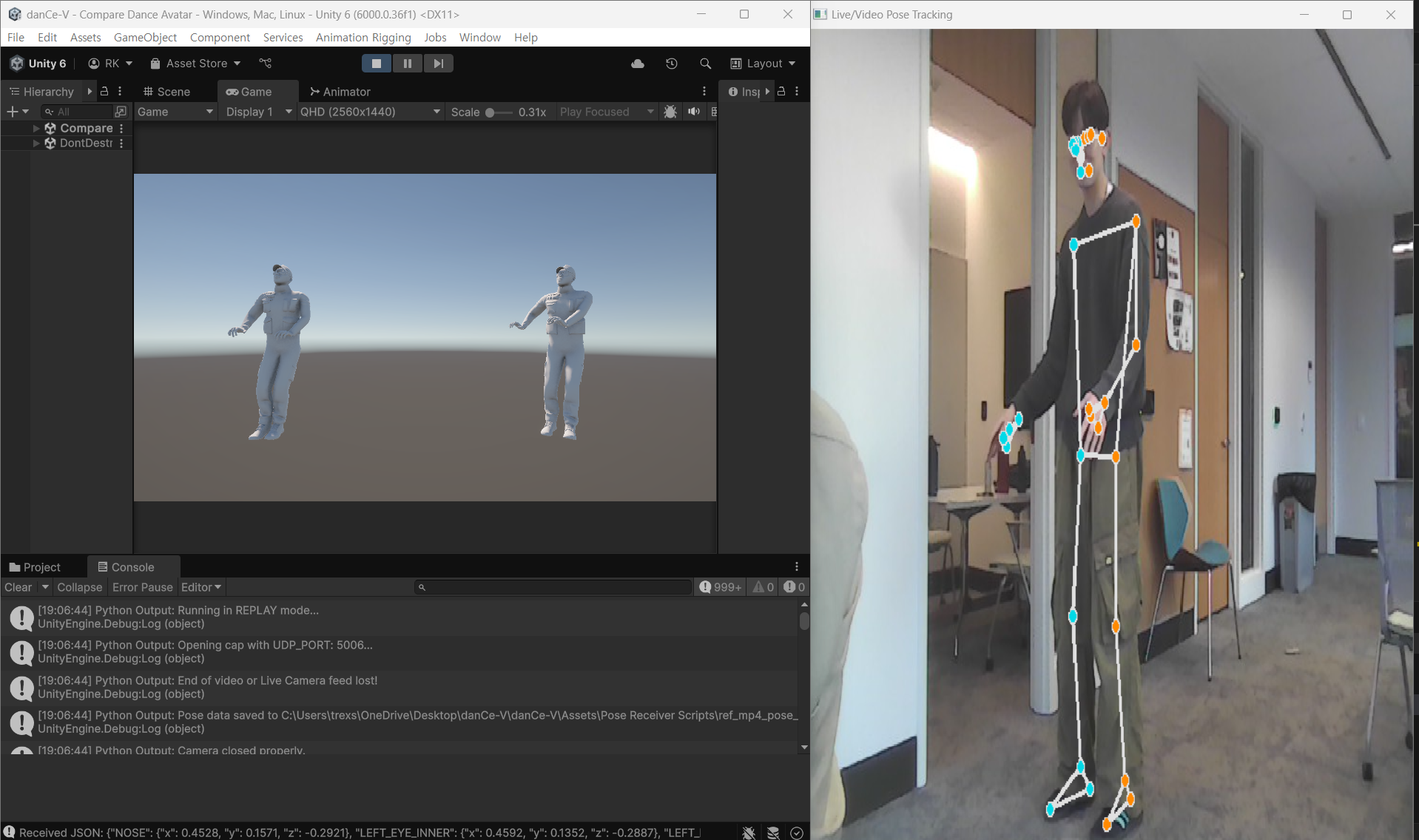

DTW Algorithm Accuracy

- Completed Tests: Initial testing of our DTW implementation with annotated reference sequences.

- Planned Tests: Expanded testing with deliberately introduced temporal variations to verify robustness to timing differences.

- Analysis Method: Comparison of algorithm-identified errors against reference videos, with focus on false positive/negative rates.

Unity Visualization Latency

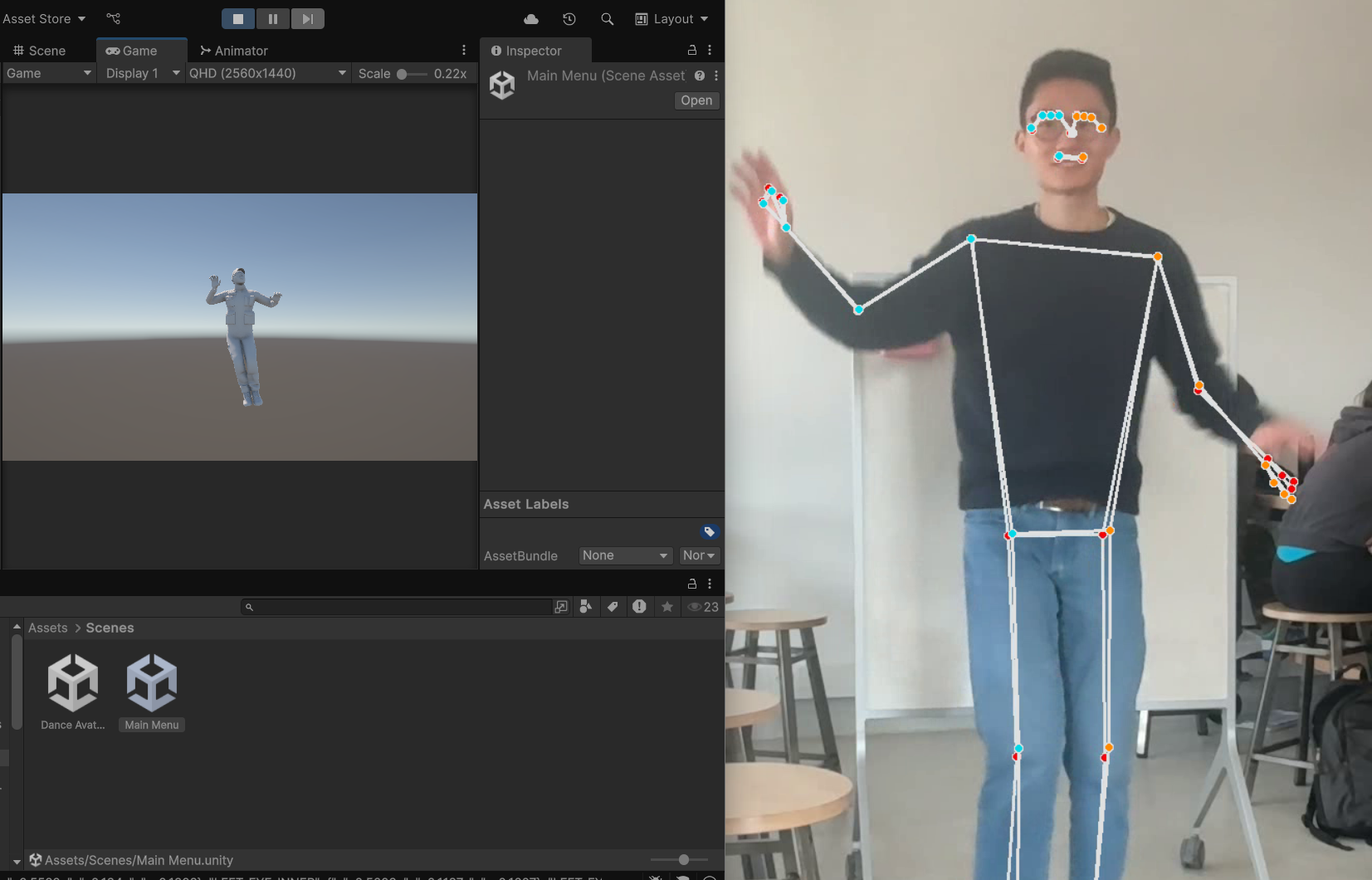

- Completed Tests: End-to-end latency measurements from webcam capture to avatar movement display.

- Planned Tests: Additional testing to verify UDP packet delivery rates.

- Analysis Method: High-speed video capture of user movements compared with screen recordings of avatar responses, analyzed frame-by-frame.

Validation Testing

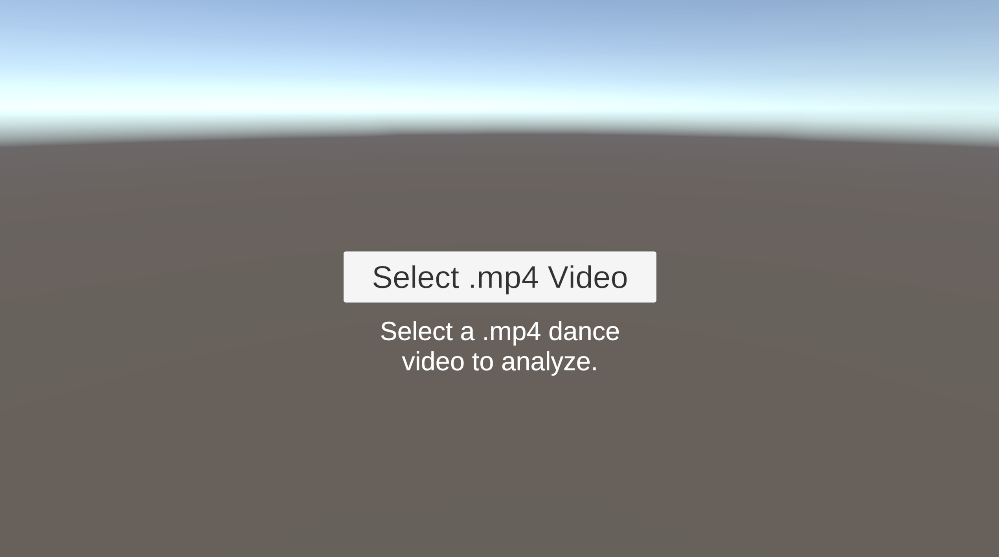

Setup and Usability Testing

- Planned Tests: Expanded testing with 30 additional participants representing our target demographic.

- Analysis Method: Observation and timing of first-time setup process, followed by survey assessment of perceived difficulty.

Feedback Comprehension Validation

- Planned Tests: Structured interviews with users after receiving system feedback, assessing their understanding of recommended improvements.

- Analysis Method: Scoring of users’ ability to correctly identify and implement suggested corrections, with target of 90% comprehension rate.

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

Progress on the project is generally on schedule. While optimizing the avatar processing in parallel took slightly longer than anticipated due to synchronization challenges, the integration of the DTW algorithm proceeded decently once we established the data pipeline. If necessary, I will allocate additional hours next week to refine the comparison algorithm and improve the UI feedback for the player.

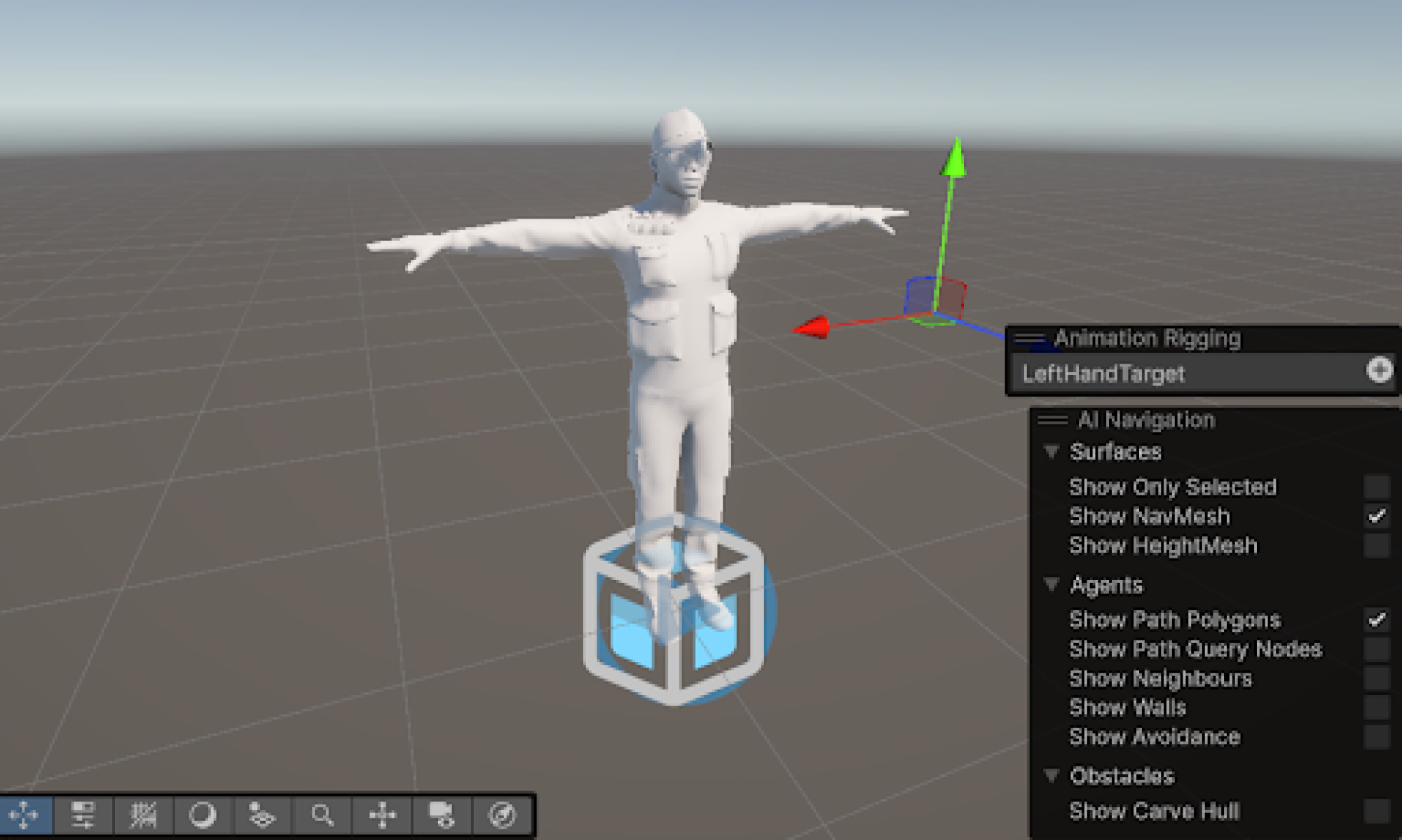

Additionally, I successfully optimized the avatar

Additionally, I successfully optimized the avatar