This week I focused on conducting user experiments with Rex and Akul. We wrote up a form and are in the process of asking people to test out our application and give their feedback. While we don’t have much time to implement drastic changes to the system, it’s still good to have a direction in case we want to make any future changes to make the dance coach even better than it currently is. Other than that, I’ve helped Rex implement a few final features as well as solve remaining bugs, including the problem we encountered where the reference avatar doesn’t fully line up with the reference video playback.

Team Status Report for 4/26

Team Status Report

Risk Management:

All prior risks have been mitigated. We have not identified any new risks as our projects are approaching it’s grand finale. We have done and are continuing to conduct comprehensive testing to ensure that our project specifications meets user requirements.

Design Changes:

There were no design changes this week.

Testing:

For testing, we implemented unit and system tests across the major components.

On the MediaPipe/OpenCV side, we performed the following unit tests:

- Landmark Detection Consistency Tests: Fed known video sequences and verified that the same pose landmarks (e.g., elbow, knee) were consistently detected across frames.

- Pose Smoothing Validation: Verified that the smoothing filter applied to landmarks reduced jitter without introducing significant additional lag.

- Packet Loss Handling Tests: Simulated missing landmark frames and confirmed that the system handled them gracefully without crashing or sending corrupted data.

On the Unity gameplay side, we carried out the following unit and system tests:

- Avatar Pose Mapping Tests: Verified that landmark coordinates were correctly mapped to avatar joints and stayed within normalized bounds as said in design reqs

- Frame Rate Stability Tests: Ensured the gameplay maintained a smooth frame rate (30 FPS or higher) under normal and stressed system conditions

- Scoring System Unit Tests: Tested the DTW (Dynamic Time Warping) score calculations to ensure robust behavior across small variations in player movement

- End-to-End Latency Measurements: Measured the total time from live player movement to avatar motion on screen, maintaining a latency under 100 ms as required in design reqs

From the analysis of these tests, we found that implementing lightweight interpolation during landmark transmission greatly improved avatar smoothness without significantly impacting latency. We also shifted the reference avatar update logic from Unity’s Update() phase to LateUpdate(), which eliminated subtle drift issues during longer play sessions.

Rex’s Status Report for 4/26

This week, I focused on several important optimizations and validation efforts for our Unity-based dance game project that uses MediaPipe and OpenCV. I worked on fixing a synchronization issue between the reference video and the reference avatar playback. Previously, the avatar was moving slightly out of sync with the video due to timing discrepancies between the video frame progression and the avatar motion updates. After adjusting the frame timestamp alignment logic and ensuring consistent pose data transmission timing, synchronization improved significantly. I also fine-tuned the mapping between the MediaPipe/OpenCV landmark detection and the Unity avatar animation, smoothing transitions and reducing jitter for a more natural movement appearance. Finally, I worked with the team on setting up a more comprehensive unit testing framework and began preliminary user testing to gather feedback on responsiveness and overall play experience.

Overall, we are on schedule relative to our project timeline. While the video-avatar synchronization issue initially caused a small delay, the fix was completed early enough in the week to avoid impacting later goals. Going off of what Peiyu and Tamal said, we have created a questionnaire and are testing with users to receive usability feedback. To ensure we stay on track, we plan to conduct additional rounds of user feedback and tuning for both the scoring system and gameplay responsiveness. No major risks have been identified at this stage, and our current progress provides a strong foundation for the next phase, which focuses on refining the scoring algorithms and preparing for a full playtest.

For testing, we implemented unit and system tests across the major components.

On the MediaPipe/OpenCV side, we performed the following unit tests:

- Landmark Detection Consistency Tests: Fed known video sequences and verified that the same pose landmarks (e.g., elbow, knee) were consistently detected across frames.

- Pose Smoothing Validation: Verified that the smoothing filter applied to landmarks reduced jitter without introducing significant additional lag.

- Packet Loss Handling Tests: Simulated missing landmark frames and confirmed that the system handled them gracefully without crashing or sending corrupted data.

On the Unity gameplay side, we carried out the following unit and system tests:

- Avatar Pose Mapping Tests: Verified that landmark coordinates were correctly mapped to avatar joints and stayed within normalized bounds as said in design reqs

- Frame Rate Stability Tests: Ensured the gameplay maintained a smooth frame rate (30 FPS or higher) under normal and stressed system conditions

- Scoring System Unit Tests: Tested the DTW (Dynamic Time Warping) score calculations to ensure robust behavior across small variations in player movement

- End-to-End Latency Measurements: Measured the total time from live player movement to avatar motion on screen, maintaining a latency under 100 ms as required in design reqs

From the analysis of these tests, we found that implementing lightweight interpolation during landmark transmission greatly improved avatar smoothness without significantly impacting latency. We also shifted the reference avatar update logic from Unity’s Update() phase to LateUpdate(), which eliminated subtle drift issues during longer play sessions.

Akul’s Status Report for 4/26

A major focus of my work this week was planning out how our final demo will look. I brainstormed different options for the reference video we want users to dance along to, considering factors like clarity of movements, difficulty, music, and length. We evaluated a few potential clips (a TikTok dance, a fortnite dance, and a dance that we created) and started narrowing down which one would best showcase the capabilities of our system during the final presentation. We plan to showcase two videos during the demo which would first showcase the system on multiple dance reference videos, and also showcase the system’s ability to work with any reference video. Another thing that we may do is allow the spectators during the demo to create their own reference video and we could upload that to our system to showcase its dynamic capability to work with any reference video.

We also wanted to see how people from an outside perspective would interact with our system and the different dances, so we aimed to get additional outside feedback. I asked a few of my friends to come and test the system and learned more about areas where users were confused or struggled. Some common points of confusion included the reference video’s timing being off from the user avatar and the need to add audio to help follow along with the dance moves. Additionally, users mentioned that the TikTok and Fortnite dances were somewhat too difficult to follow as they were more complex, while the recorded reference video we created was much easier for them to learn from. This highlighted the importance of selecting simpler, more accessible dances for the final demo to ensure a smoother user experience. After the sessions, I worked with Rex to understand the feasibility of implementing and fixing these pieces of feedback to ultimately help us improve the user experience, and we were able to iterate on the system to fix these features. These user insights were incredibly valuable in making last-minute tweaks to improve the overall experience.

Progress is currently on schedule. Thinking ahead to the final presentation has actually helped keep us on track by surfacing small issues early. Next week, we will finalize our choices of how the demo will look, implement any last-minute improvements based on further user feedback, record a polished demo video showing the full functionality of the dance coach, and work on final deliverables such as the poster and report.

Akul’s Status Report for 4/19

This week, I focused on testing our comparison algorithm and creating test videos and documentation to help prepare for the final demo and materials.

In terms of testing the comparison algorithms, I first started with creating a new video that was not too difficult to follow but not too simple to follow as well. I aimed to choreograph and film a simpler dance that an average person would be able to follow, still including some complexity so that we could test the effectiveness of the comparison algorithm. This allowed us to test each of the different comparison algorithms that we created and helped us understand where each of them was lacking and where each of them was succeeding. However, we ran into some issues with running just the comparison algorithm without the Unity integration, so our next step was to get a basic integration of the two systems to allow us to get quantitative and effective data on the effectiveness of our game, especially for our use case.

On Monday, we brainstormed ways on how to improve the user experience of the dance game, and one thing that we decided to add was a “ghost” overlay on the user avatar. We thought this would be helpful because the user can directly see what may be off from their dance moves compared to the reference video and also help them correct themselves if they are off from the reference video. After we were able to send each of the 5 comparison algorithm data to the Unity client, we needed to create videos of each of the algorithms in action to see which would be best suited for the final demo.

We used the reference video that I created earlier, and then I used our system to learn the dance and see how our comparison algorithms perform differently. After we were finished, we recorded videos of my dancing with the system, analyzed the outputs to see which comparison algorithm would be best, and described each of the algorithms in detail (these videos are linked in our team status report for this week).

Currently, we have a base integration of our systems, but now we need to focus on the use case for the final demo. One idea we were thinking of to help make our game as helpful as possible for the user is to add a side-view for the reference/user dance moves as well, so the user can have a better understanding of what the reference video is doing in a 3D space. Next week, we will be focusing on improvements such as these for the final demo.

Throughout this semester, I had to employ new strategies to learn how to use the tools for our project. One thing that I feel like I haven’t done much in my coursework was learning how to read the documentation on totally new tools that other programmers have created. For example, earlier in the semester, when we were integrating MediaPipe with our system, I had to learn the documentation on the MediaPipe library to get a better understanding of how we can use it for our project. It was a different, more hands-on style of learning that relied heavily on self-guided exploration and practical experimentation rather than traditional classroom instruction. Another important aspect I had to learn during this project was how to effectively work on a subsection of a larger, interconnected system. Our project was divided into different components that could be developed in parallel, but still required constant communication and a shared understanding of the overall design. I learned the importance of interfacing between subsections and how changes in one part of the system can ripple through and impact others. This experience taught me to think not just about my individual tasks, but about how they fit into and affect the broader project as a whole, which is an extremely relevant skill for my career long term.

Team Status Report for 4/19

Team Status Report

Risk Management:

All prior risks have been mitigated. We have not identified any new risks as our projects are approaching it’s grand finale. We have done and are continuing to conduct comprehensive testing to ensure that our project specifications meets user requirements.

Design Changes:

Comparison Algorithm:

- We have changed our core algorithm to FastDTW, as our testing shows that it resolves the avatar speed issue without sacrificing comparison accuracy too much.

User Interface:

- Added score board: Users can now easily visualize their real-time scores in the Unity interface

- Added reference ghost: Users now have a reference ghost avatar overlaid with their real time avatar so that users can know where they should be at all times during the dance

- Added reference video playback: Now instead of following a virtual avatar, the user can follow the real reference video, played back in the Unity interface.

Testing:

We have conducted a thorough analysis and comparison of all five comparison algorithms implemented. Here are their descriptions and here are the videos comparing their differences.

Danny’s Status Report for 4/19

This week I focused on testing the finalized comparison algorithm and collecting data to make an informed decision as to which algorithm to use for the final demo. We ran comprehensive testing on five different algorithms (DTW, FastDTW, SparseDTW, Euclidean Distance, Velocity) and collected data on the performance of these algorithms on capturing different aspects of movement similarities and differences.

Throughout this project, two major things I had to learn was Numpy and OpenCV. These tools were completely new to me and I had to learn them from scratch. OpenCV was used to process our input videos and provide us with the 3D capture data, and Numpy was a necessary library that made implementing the complex calculations involved in our comparison algorithms much easier than it otherwise would have been. For OpenCV, I found the official website extremely useful, with detailed tutorials walking users through the implementation process. I also benefited greatly from the code examples they posted on the website, since those provided a good starting point a lot of the time. In terms of Numpy, I resorted to a tool that I have often used when trying to learn a new programming language or library: W3Schools. I found this website to have a well laid out introduction to Numpy, as well as numerous specific examples. With all those resources available, I was able to pick up the library and put it to use relatively quickly.

Rex’s Status Report for 4/19

This week, I focused on improving the integration and user experience of our Unity-based CV dance game, and testing the game overall. I overhauled the game interface by merging the previous dual-avatar system into a single avatar, with the reference motion displayed as a transparent “ghost” overlay for intuitive comparison. I also implemented in-game reference video playback, allowing players to follow along in real time. Additionally, I improved the UI to display scoring metrics more clearly and finalized the logic for warning indicators based on body part similarity thresholds. This involved refining how we visualize pose comparison scores and ensuring error markers appear dynamically based on performance. Our team also coordinated to produce demo videos, highlighting the visual interface upgrades and the functionality of our comparison algorithms for the TA and Professor.

I also dedicated hours this week to tasks including Unity UI restructuring, real-time video rendering, and debugging synchronization issues between the user and reference avatars. Progress is mostly on track, but a few visual polish elements still need refinement as well as a reference playback speed issue. To stay on schedule, I plan to spend additional time finalizing UI. For next week, I aim to finish integrating all comparison algorithm outputs into the UI pipeline, improve performance on lower-end machines, and prepare a final demonstration-ready build.

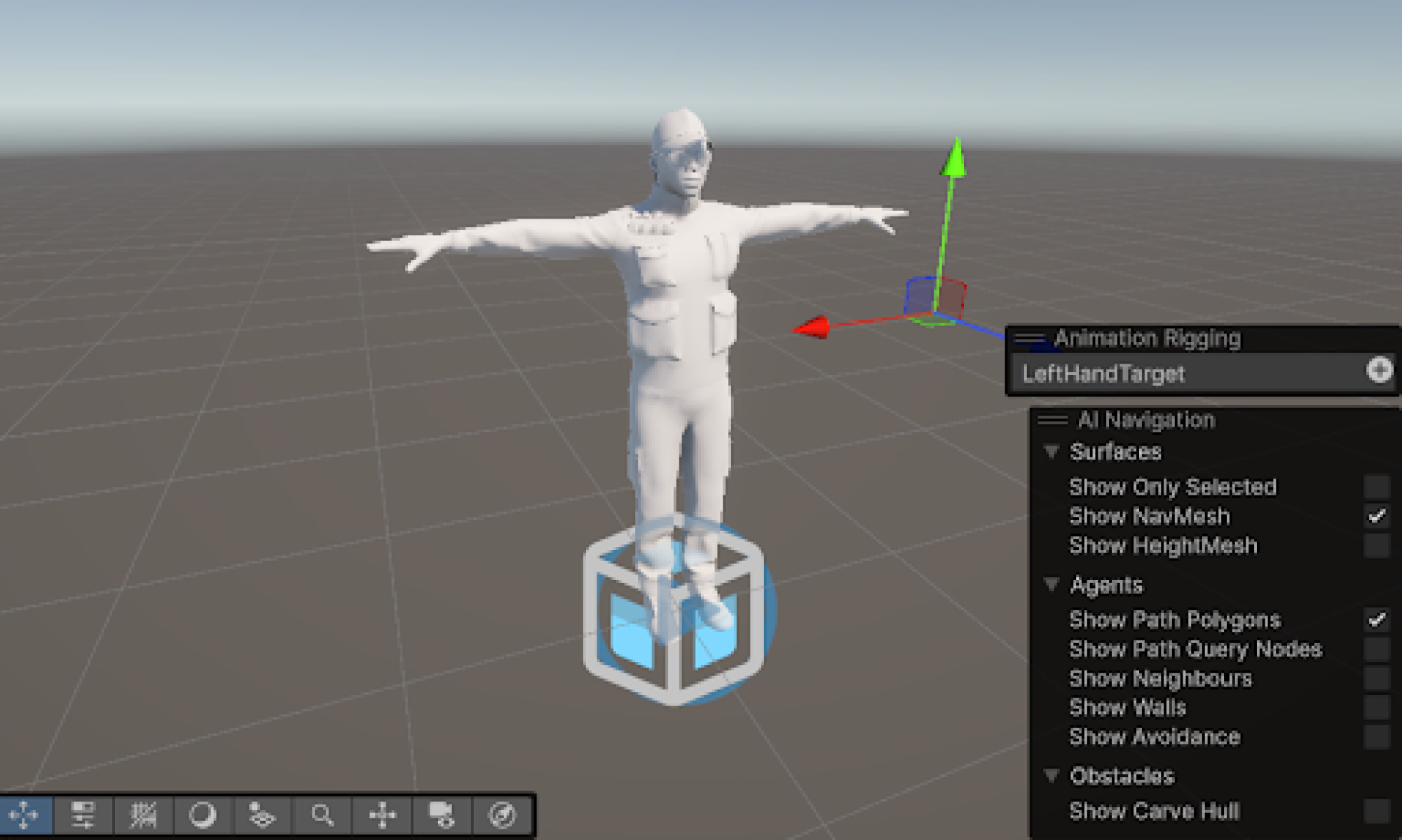

In terms of new knowledge, I had to dive deep into Unity UI systems, learning how to work with Canvas, RawImage, VideoPlayer, transparency materials, and hierarchical component references. I also read research papers and online resources to understand dance comparison algorithms and the best ways to model human joints from MediaPipe input. Most of my learning came from informal strategies: watching Unity tutorials on YouTube, browsing Stack Overflow, and experimenting directly in the Unity editor. The most challenging part was translating 2D joint data into meaningful 3D avatar motion. This pushed me to study human joint kinematics, including the use of quaternions, Euler angles, and inverse kinematics to replicate realistic movement. I researched how others approached rigging, learned how to apply constraints to joints, and explored interpolation and filtering techniques to smooth noisy input. Through trial-and-error debugging and visualization of bone rotations, I developed a deeper understanding of the math and physics behind body motion, and how to convey fluid, believable movement within the constraints of Unity’s animation system.

Akul’s Status Report for 4/12

This week I worked on developing multiple unique comparison algorithms, with the goal of iterating upon our existing comparison algorithm, trying new features, and being intentional about the design decisions behind the algorithm. For the interim demo, we already had an algorithm that utilizes dynamic time warping, analyzes 30 frames every 0.1 seconds, and computes similarity based on each joint individually. This week I focused on making 5 unique comparison algorithms, allowing me to compare the accuracy of how different parameters and features of the algorithm can improve the effectiveness of our dance coach. The following are the 5 variations I created, and I will compare these 5 with our original to help find an optimal solution:

- Frame-to-frame comparisons: does not use dynamic-time warping, simply creates a normalization matrix between the reference video and user webcam input and compares the coordinates on each frame.

- Dynamic-time-warping, weighted similarity calculations: builds upon our algorithm for the interim demo to calculate the similarity between different joints to relatively weigh more than other joints.

- Dynamic-time-warping, increasing analysis window/frame buffer: builds upon our algorithm for the interim demo to increase the analysis window and frame buffer to get a more accurate DTW analysis.

- Velocity-based comparisons: similar to the frame-to-frame comparisons, but computes the velocity of joints over time as they move, and compares those velocities to the reference video velocities in order to detect not exactly where the joints are, but how the joints move over time.

- Velocity-based comparisons with frame-to-frame comparisons: iterates upon the velocity comparisons to utilize both the velocity comparisons and the frame-to-frame joint position comparisons to see if that would provide an accurate measurement of comparison between the reference and user input video.

I have implemented and debugged these algorithms above, but starting tomorrow and continuing throughout the week, I will conduct quantitative and qualitative comparisons between these algorithms to see which is best for our use case and find further points to improve. Additionally, I will communicate with Rex and Danny to see how I can make it as easy as possible to integrate the comparison algorithm with the Unity side portion of the game. Overall, our progress seems to be on schedule; if I can get the comparison algorithm finalized within the next week and we begin integration in the meantime, we will be on a good track to be finished by the final demo and deadline.

There are two main parts that I will need to test and verify for the comparison algorithm. First, I aim to test the real-time processing performance of each of these algorithms. For example, the DTW algorithm with the extended search video may require too much computation power to allow for real-time comparisons. On the other hand, the velocity/frame-to-frame comparison algorithms may have space to add more complexity in order to improve the accuracy of the comparison without resulting in problems with the processing performance.

Second, I am to test the accuracy of each of these comparison algorithms. For each of the algorithms described above, I will run the algorithm on a complex video (such as a TikTok dance), a simpler video (such as a video of myself dancing to a slower dance), and a still video (such as me doing a T-pose in the camera). With this, I will record the output after I actively do the dance, allowing me to watch the video back and see how each algorithm does. After, I will create a table that allows me to note both quantitative and qualitative notes I have on each algorithm, seeing what parts of the algorithm are lacking and performing well. This will allow me to have all the data I need in front of me when deciding what I should do to continue iterating upon the algorithm.

With these two strategies, I believe that we will be on a good track to verify the effectiveness of our dance coach and create the best possible comparison algorithm we can to help our users.

Danny’s Status Report for 4/12

This week I focused on integrating our comparison algorithm with the Unity interface, collaborating closely with Rex and Akul. We established a robust UDP communication protocol between the Python-based analysis module and Unity. We encountered initial synchronization issues where the avatars would occasionally freeze or jump, which we traced to packet loss during high CPU utilization. We implemented a heartbeat mechanism and frame sequence numbering that improved stability significantly.

We then collaborated on mapping the comparison results to the Unity visualization. We developed a color gradient system that highlights body segments based on deviation severity. During our testing, we identified that hip and shoulder rotations were producing too many false positives in the error detection. We then tuned the algorithm’s weighting factors to prioritize key movement characteristics based on dance style, which improved the relevance of the feedback.

As for the verification and validation portion, I am in charge of the CV subsystem of our project. For this subsystem specifically, my plans are as follows:

Pose Detection Accuracy Testing

- Completed Tests: We’ve conducted initial verification testing of our MediaPipe implementation by comparing detected landmarks against ground truth positions marked by professional dancers in controlled environments.

- Planned Tests: We’ll perform additional testing across varied lighting conditions and distances (1.5-3.5m) to verify consistent performance across typical home environments.

- Analysis Method: Statistical comparison of detected vs. ground truth landmark positions, with calculation of average deviation in centimeters.

Real-Time Processing Performance

- Completed Tests: We’ve measured frame processing rates in typical hardware configurations (mid range laptop).

- Planned Tests: Extended duration testing (20+ minute sessions) to verify performance stability and resource utilization over time.

- Analysis Method: Performance profiling of CPU/RAM usage during extended sessions to ensure extended system stability.