What are the most significant risks that could jeopardize the success of the project? How are these risks being managed? What contingency plans are ready?

The main risk we are facing right now is implementing some form of SLAM to allow our hexapods to localize and determine where other hexapods are in the area. Our contingency plan for this risk is to utilize a prebuilt Isaac-ROS april tags library to use april tags for pose estimation of our hexapods instead of having a full SLAM implementation. We think that this would be a lot easier to implement though we have to constrain our scope a bit more.

Were any changes made to the existing design of the system (requirements, block diagram, system spec, etc)? Why was this change necessary, what costs does the change incur, and how will these costs be mitigated going forward?

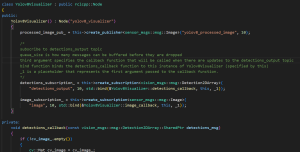

One of the changes made to the existing design of the system is that we’re now using Isaac-ROS on our Jetson Orin Nano. This is because Isaac-ROS supports our Yolov8 object detection algorithm and is already hardware accelerated with the NITROS nodes that it provides. This hardware acceleration is very important for us because we want to make our object detection fast.

Part A: … with consideration of global factors. Global factors are world-wide contexts and factors, rather than only local ones. They do not necessarily represent geographic concerns. Global factors do not need to concern every single person in the entire world. Rather, these factors affect people outside of Pittsburgh, or those who are not in an academic environment, or those who are not technologically savvy, etc.

With consideration of global factors, the main “job to be done” for our design is to provide accessible search and rescue options for countries around the world to deploy. With the rise of natural disasters and various conflicts around the globe, it’s important that we are armed with the appropriate, cost effective, and scalable solutions. Our hexapod swarm will also be trained with a diverse dataset ensuring that we can account for all kinds of people from around the world. Our hexapod’s versatility and simplicity will allow it to be deployed around the world by people with limited technology ability. (Written by Kobe)

Part B: … with consideration of cultural factors. Cultural factors encompass the set of beliefs, moral values, traditions, language, and laws (or rules of behavior) held in common by a nation, a community, or other defined group of people.

With consideration of cultural factors, it is part of our moral belief and instinct to help those in times of need, such that we are also able to receive help when we are vulnerable ourselves. Our product solution is designed to address this common moral, across all different cultures and backgrounds. We will train the object detection models such that it can recognize people from all cultures and backgrounds, and design the product generally so that it can be deployed in many versatile places. (Written by Casper)

Part C: … with consideration of environmental factors. Environmental factors are concerned with the environment as it relates to living organisms and natural resources.

With respect to environmental factors the reason we chose a hexapod robot is so that the robot can traverse a lot of different terrains. We have to do physical testing to ascertain this. We also will account for domestic pets like dogs and cats and have that in our training dataset so we can identify and rescue them as well. We also will test our model with dolls and humanoid lookalikes to check if it doesn’t get confused by them. We have a fault tolerant requirement to account for changing environments that could compromise robots such as water damage or collapsing infrastructure. (Written by Akash)