Work Done

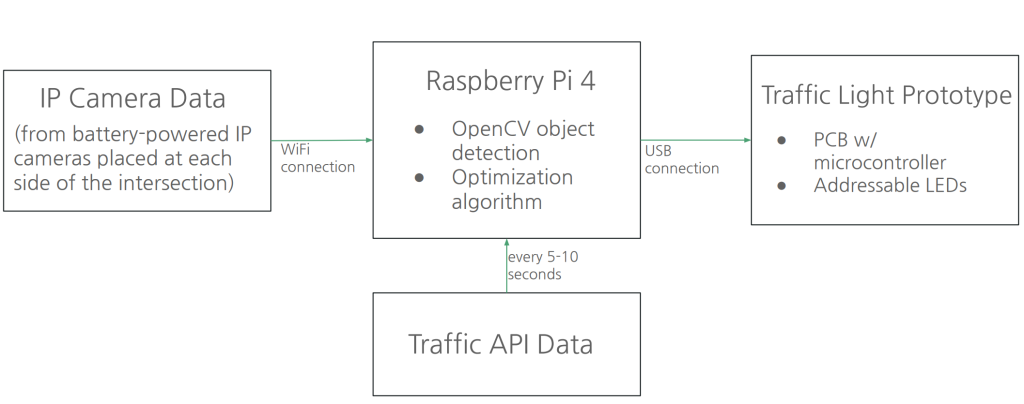

This week I worked on the Design Review presentation as well as doing additional research on our solution design, specifically on the APIs we will be using as well as the optimization algorithm.

I have finalized the APIs we will be using to be the TomTom Traffic API and the HERE Traffic API. I chose these APIs because they update frequently (every 30 seconds and every minute, respectively) and they are cost efficient. With our current usage plans, we will be able to operate under the free plans for both APIs. Both APIs provide similar data, however the HERE API offers more data on specific lanes and the TomTom API offers data at a faster rate, so we will try to incorporate both APIs and average the data for the best results.

I also finalized the type of machine learning algorithm we will be using for our solution and researched existing papers. I found a paper that attempted to approach the same problem we are trying to solve and it uses Q-learning and an application called SUMO for testing.

I decided that q-learning would be a good algorithm to use because we can measure future times and use the time as a reward function relatively easily. I also am more familiar with Q-learning than other algorithms since it was taught in Intro to ML, although I will still have to learn how Deep Q-Learning differs from what was covered in class.

SUMO is a great application for our purposes because we wanted to create a rudimentary simulation originally, but now we can use a more well developed simulation. I looked into the application and they have a tutorial for importing a path from a map, so I think we can simulate Pittsburgh, or at least the area near CMU pretty easily. Another great feature SUMO has is the ability to take simulation data and import it into Python using TraCI, so this is also perfect for our ML model, since we can use an on-line model.

I started setting up our repo and installed a package manager for Python called Poetry, because I have found it difficult to work on Python code without a package manager in the past. I also started the code for making the API calls and will be finishing it up tomorrow.

Schedule

I made changes to the schedule since we no longer need training data and instead will be using SUMO for simulation purposes. I instead added a task for creating the SUMO simulation and shifted the time for the optimization algorithm back a week to make up for it since it seems more complex than I originally anticipated and I now have to create the simulation myself instead of using external training data. However, this time is made up for since we no longer have to design our own application for simulating traffic and can use SUMO for demonstration purposes as well, which we allotted 2 weeks for originally.

Tasks This Week

- Finish traffic API methods

- Configure SUMO to simulate Fifth and Craig intersection and nearby roads so we can begin creating training data

- Build optimization algorithm infrastructure