Work Done

I ordered the new IP camera/portable batteries and they came in this week, so after getting the demo ready for pre-recorded videos at the intersection I will test the object detection model on the live video feed (after looking at tutorials/examples online that have used this exact camera and been able to access the RTSP URL, I think this will be possible.)

Kaitlyn and I also took concurrent video of all 4 sides of the intersection with the help of some friends, but the problem is that the videos are all quite shaky and thus clear lane boundaries cannot be defined. I tried to use Canny edge detection and Hough transforms to detect them, but due to the fact that cars often pass through the camera’s field of view and block the lanes from being shown in the video the code does not work reliably at the moment. I ordered some tripods that should ideally keep our phones stable when filming; we will re-record the videos sometime next week (reasonably before the demo) and if I can’t get the lane detection code working in time I will just hardcode the lane boundaries as I did with one of Zina’s videos that was taken with a Go-Pro stabilized with a tripod.

As expected, the object detection model runs much slower on the Raspberry Pi than it does on my computer (4 s compared to 0.4 s) but it is still within our defined latency requirements for the object detection model so it should be fine. I refactored the code so that the video continues to run at the expected frame rate as a frame is being processed, so that it grabs the next available frame of the video to process rather than grabbing the sequential next frame (and slowing down the video play considerably.) For example, before, the code would process the first frame of video (and take 4 seconds), then process the second frame, and so on (so the video would be slowed down drastically.) Now, the code will process each frame of the video in the background as I continue to iterate through the video’s frames in the foreground, and grab the next available frame to process from the foreground (so the video will continue to play at its correct speed.)

I also wrote code for the RPi to send the Arduino/PCB the current light state over a serial connection. Zina, Kaitlyn, and I tested it this week, and while the PCB needs to be modified slightly, the Arduino is decoding the light states properly and the code on the RPi is ready to go.

Schedule

We need to prepare our final presentation, so that is what we will be working on this weekend. Leading up to the final demo, I will take the stable intersection videos so we can at least have hardcoded lane boundaries for demo purposes, and fine-tune that code to provide accurate and clear vehicle and pedestrian counts that match the input format of Kaitlyn’s optimization algorithm and simulation. I will also assist with any integration that is required between the optimization and traffic light circuit subsystems.

Learning Strategies

Throughout the course of this project, there have been many setbacks with setup and installation issues, as well as hardware that is incompatible with our project goals (for example the various IP camera issues that we have been having, where the RTSP URL is not accessible.) A lot of the learning strategies I have adapted to deal with these issues have been to scour through forums on the Internet (since most issues that I run into have probably been experienced by someone else in the past) and find example projects online that achieve something similar to what I want (for example, projects that allow you to view an IP camera feed from a Raspberry Pi.) I then look at the parts and software used for those projects and order similar (if not the same) parts for our project’s purposes.

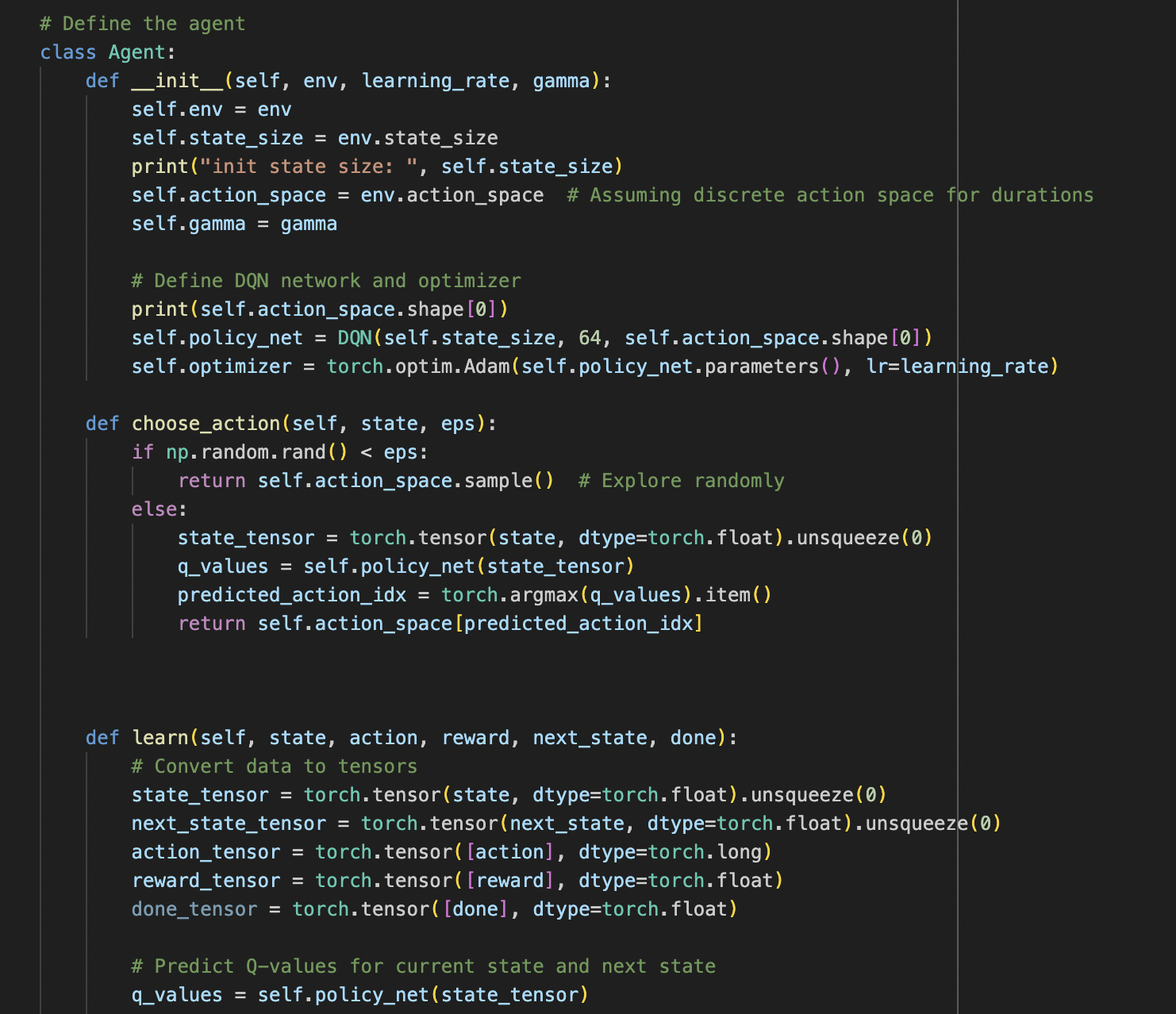

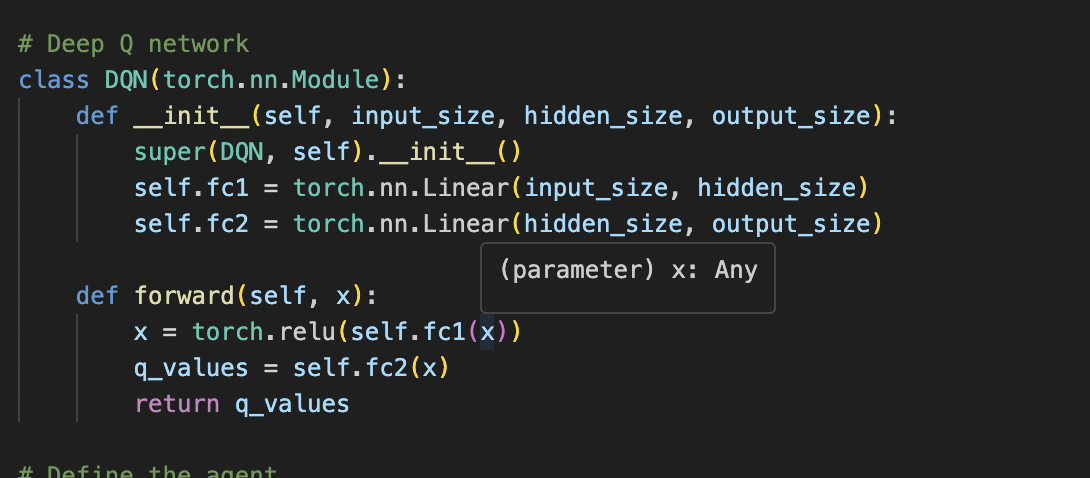

When determining which model to use for the object detection, I ran into a lot of problems setting up the correct environment. Initially, I tried to run the models I found on GitHub locally — and when I was still planning on using Haar cascades, I tried training the model locally as well — but due to version mismatch issues and conflicts with other software I have installed on my computer I had to pivot to using Anaconda and sometimes even Colab to test the models and see how accurate they were on our sample intersection videos. In these cases, I used ChatGPT to help me diagnose some of the problems I was facing and brainstorm solutions to fix them (for example, I wasn’t sure how to make sure that any existing installations of OpenCV I had were uninstalled in my Anaconda environment and how to install a specific version, and ChatGPT gave me clearly outlined steps for how to do it — which worked!)

Deliverables

By the week of the demo (Monday 4/29), I will:

- Take updated intersection videos using the tripods

- Put together hardcoded lane boundaries using these videos in order to output accurate vehicle and pedestrian counts for each side of the intersection

- Assist with further integration between the Raspberry Pi and Arduino/PCB