Accomplished Tasks

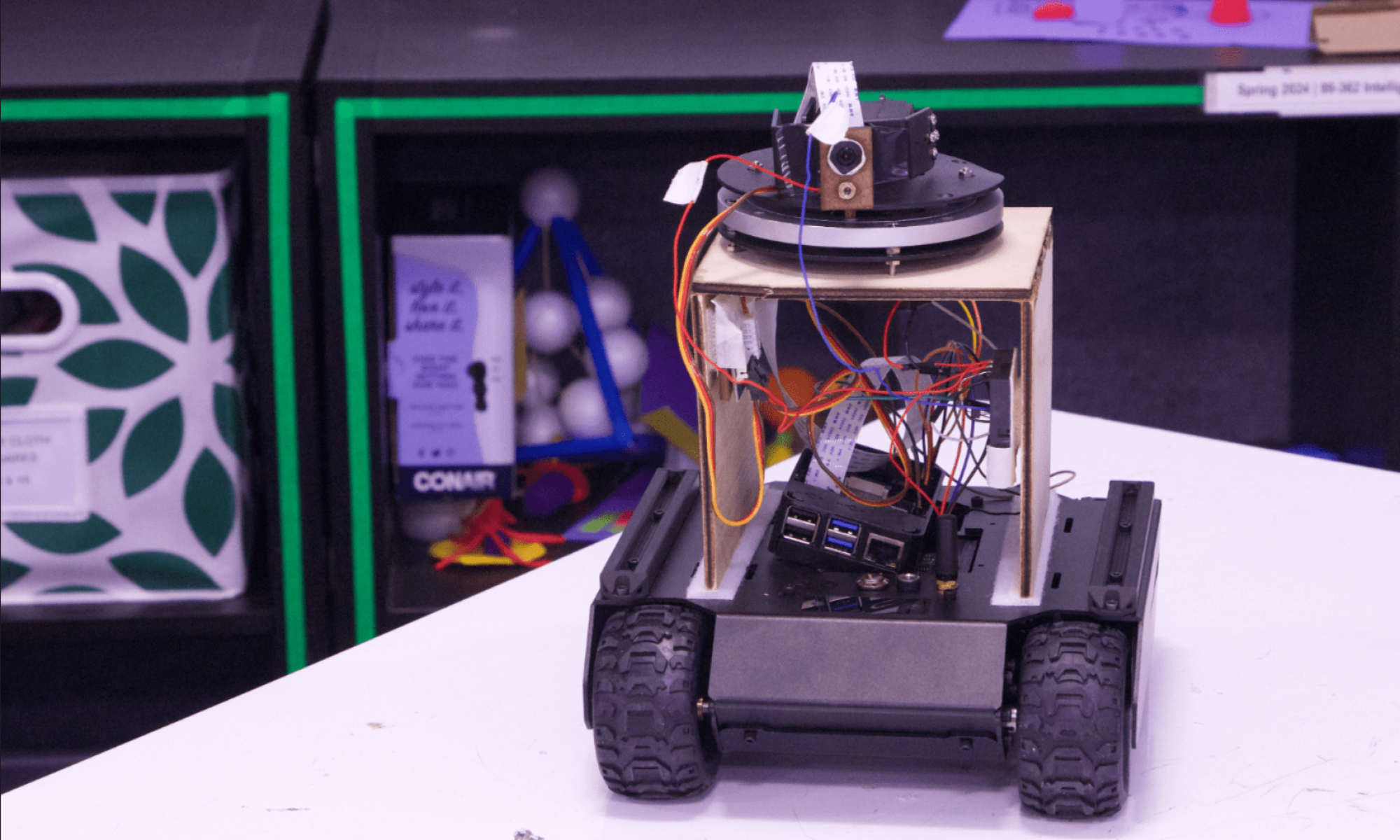

This week, I was able to get a TCP stream running on my personal computer rather than just having the camera stream show on the RaspberryPi desktop by running a libcamera-vid script. I did so through the use of picamera2 documentation that allowed me to capture the video stream and point it to a web browser. In addition, I embedded this stream link using iframe into my website application to introduce formal monitoring of where our rover will be searching. Furthermore, I was able attach and implement a separate imx219 camera to our rover using the ribbon cable but still keep the i2c connections for the PTZ gimbal. This way, we can still have an ongoing camera feed but also have the ability to move our camera around for 180 degree panning.

Progress

Currently, I am working on introducing more features to my web application such as incorporating keypresses that allow for manual movement of the camera PTZ gimbal, however, I am struggling with communication across devices since the keypresses must register on the RaspberryPi desktop. In addition, I am trying to implement concurrent ability to move and stream from the camera. Right now, I am only able to have mutually exclusive use of the two features since both requires the camera. However, I am planning on using threading to possibly have 2 threads spawning in order for the camera to work or add an additional RaspberryPi for one of the features and a power bank to power it separately.

Next Week’s Deliverables

Next week, I will be working on the camera mount design since we finally have the camera working. After inquiring about the 3D print and seeing how expensive it may be, I will likely be working with laser cutting a mount for the camera, laser, and gimbal combo in order to attach to the rover. Additionally, I will work on the frontend portion of the web application and get it hosted on an AWS server where I will further work on the security of the website.

Verification

Since I will be working on the verification of the camera stream latency as well as the security of the monitoring site to make it resistant to hacker attacks, I will be checking for the real-time processing of stream data to my website application and ensure it is under 50ms to minimize communication delay of where a person is to the object detection server. To do so, I will use Ping, a simple command-line tool that sends a data packet to a destination and measures the round-trip time (RTT). I will ensure the live camera feed from the RPi to the stream on my website will be under 50ms through this method. Furthermore, I will be checking the security of the website by using vulnerability scanning tools that will check for site insecurities such as cross-site scripting, SQL injection, command injection, path traversal and insecure server configuration. I will use Acunetix, a website scanning tool, that performs these tests in order to formally check the site. In addition, to prevent unwanted users from accessing the site, I will use Google OAuth to authenticate proper users (rescue workers) to be the only ones to access the site. I will get my friends to test or “break” the site by asking them to perform a series of GET and POST requests to see if they can access any website data as an authorized user. To prevent these from happen, I will introduce cross-site request forgery tokens to ensure data will not be improperly accessed.