Accomplished Tasks

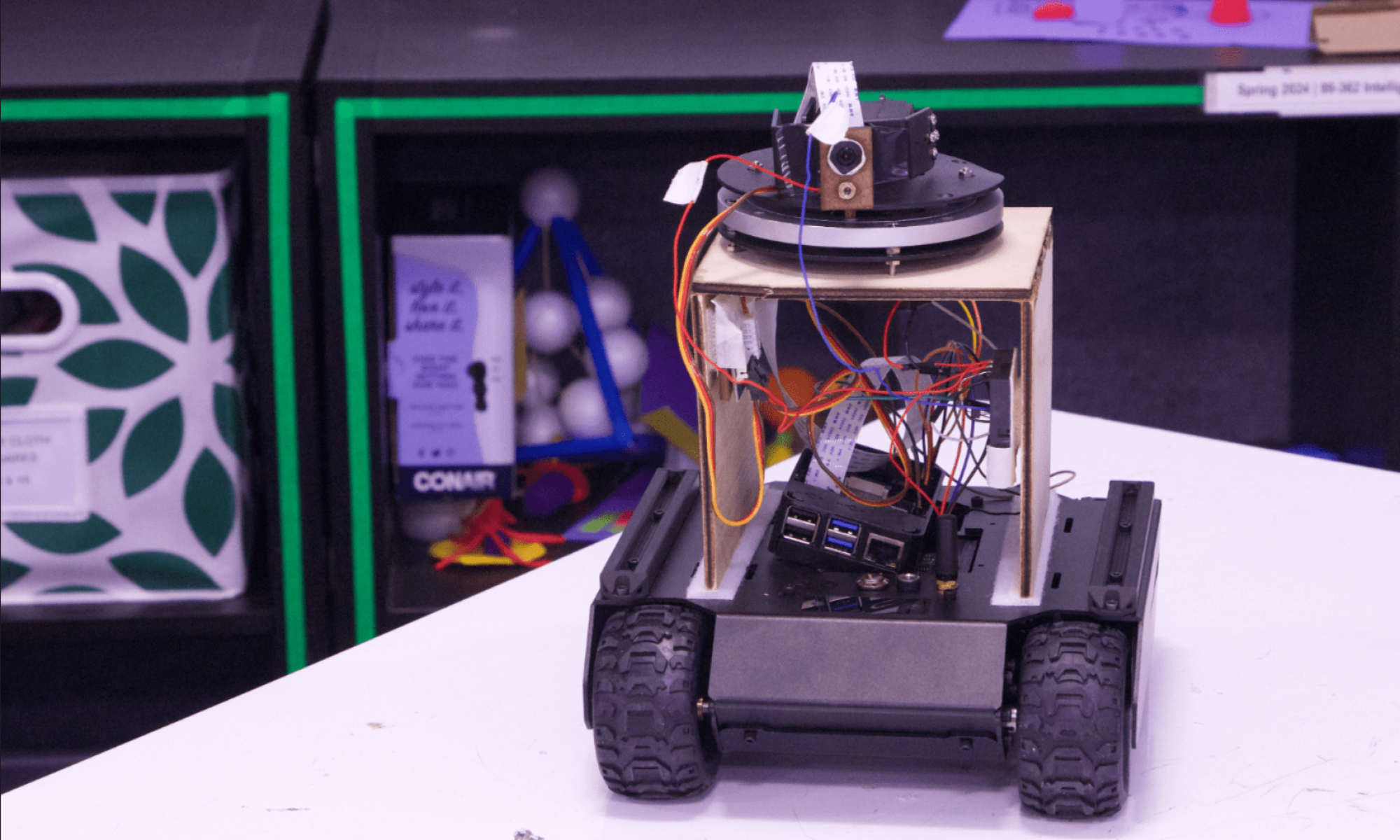

This week was the week of the Interim Demo. In regards to this, I managed to present the biggest success I had this project: getting the rover to move under my command through my own code! It involved a lot of hours of work, running into trouble after trouble. The inconsistency of documentation certainly did not help, since the JSON commands were unclear. However, even worse was the number of Raspberry Pi errors. My original Raspberry Pi had a broken TX pin that caused the JSON commands to be unable to be sent over UART communication; this was only debugged with TA Aden’s help (THANK YOU!). Another RPi I tried struggled to connect to the Internet despite visibly being connected. Fortunately, the last RPi I tried (the FIFTH one) managed to work, and I was able to control the rover through the RPi. As a reminder, this means that I am now able to control the rover through a computer connected to CMU wifi, effectively allowing for the system communication to all come together.

In order to verify the rover movement system works correctly, I have implemented benchmark testing to get a better understanding as to how much power needs to be given for the rover to move as directed (eg. how much power is needed to turn left). I also test whether or not the rover can drive straight. This involves testing the rover with predetermined simple paths (like a rectangle), and seeing if it can return to the original position, and facing in the right way.

See the rover move!

Progress

My progress is quite on track, though the end-to-end demonstration has not been put together yet. We had ideally wanted this to work a week or so ago, so all my focus will be on how to put everything together, including system communication and the infrastructure on the rover.

Next Week’s Deliverables

Next week, I plan to have the communication working out between the CV servers and the rover controlling system. This involves investigating the threading abilities of the RPi, along with figuring out how to translate the CV-detected person to directional commands. Working these out would finalize communication amongst the whole system, enabling everything to be put together.