This week, as discussed I focused mainly on tying up some loose ends in my parts of the system and also switched gears to focus on validation testing. Also, I spent a lot of time working on the final presentation that we had this week.

I primarily worked on robustness improvements, such as handling failure cases. This includes being able to smoothly handle the situation where a user input is not found in Spotify resources (ie. the semantic match fails), in which we now handle by removing the item from the queue and having the use try again. This makes the overall user experience better because while our semantic match handles legitimate input very accurately, there is always the chance the user tries to queue something that isn’t even a song or something along those lines. In addition to that, I helped with the rest of the system integration with Matt and Tommy.

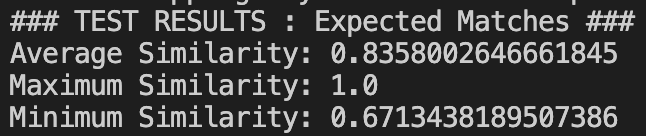

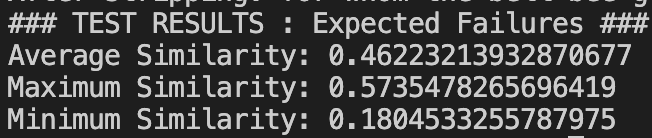

In terms of unit and validation tests, I focused on a few things. For one, we needed an accurate metric for semantic matching accuracy, which I tested on Sunday prior to the presentation. This included the following results on 30 test cases, each carefully structured to test a common situation arising from the use or misuse of the system by users.

In addition to this, I worked on a survey to ask users how our system recommendations compare to Spotify’s traditional recommendations. I am still collecting the results but will finish that process tomorrow.

In terms of schedule, we are approaching the final few days of the project where we will continue to collect results from our validation tests and will put the finishing touches on the robustness of the system.