Progress

This week I worked on tasks related to preparing for the interim demo on Wednesday, namely, the video stream latency optimization and controller integration.

- PC side optimization attempts

- Use a Unity WebcamTexture instead of Vuforia – 140ms faster

- Use async functions or threads to get frames from OpenCV – no noticeable change since the bottleneck is the library’s processing of frames

- Pi side optimization attempts

- Changing camera framerate to 30/40/60 – lowest latency (10-20ms faster) with 40fps, the fastest rendering rate on the PC

- Use raspivid and netcat as video server instead of picamera Python library – ~10ms faster but fewer config options

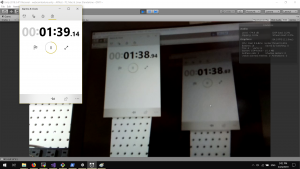

The final result was a latency of 200ms. Unfortunately, this might be the best latency we can achieve with OpenCV/ffmpeg. An experiment I ran with a few minimal lines of OpenCV code that displayed frames as fast as possible still took 170ms to decode and display frames, and Unity is a little slower because it has to do other work.

The other component I worked on was setting up the Xbox controllers in Unity and writing code on the PC to connect to Pis and stream controller inputs and data. We decided that Unity’s native support for Xbox controllers was enough for our purposes, so we didn’t use the XInput.NET library. For communication between the PC and the Pis, I used the .Net frameworks’ TcpClient and NetworkStream.

Schedule

After receiving more information about the interim demo, we all shifted our tasks around to have a more demoable product by next week. I prioritized the video feed and control for a single car, and didn’t work on the race logic, but I should still be on track because communication with the Pi was brought forward and the game was pushed back.

Deliverables for next week

Game logic and communication. Should be able to walk through a race when the methods are called on the three PCs.