A lot of time this week was spent preparing for our design review presentation. This meant refining and formalizing our ideas for what the software and hardware would both look like. This is a good starting point for us as we write our design report document next week.

One of our main risks right now is the interdependency of different parts of the project. Since there are many different subsystems that are all reliant on others in different ways, it would be difficult to develop some of them without considering how or if others are working. To combat this risk, we have been working on finding ways to divide the projects into more explicit sections, at least for initial design. For example, we expect to do initial testing of the eye-tracking accuracy via sub-sections of the screen, which does not require the UI to be completely finalized, and initial programming of the UI with buttons to create backend functionality without needing eye-tracking to be perfectly accurate (see Fiona’s Report for more on this). We will continue to consider this in our design going forward.

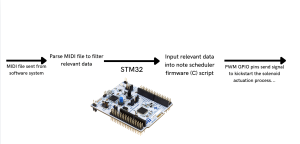

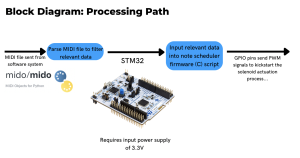

We’ve always known that we need to convert the MIDI file output from the eye-tracking system to a format that is suitable for STM32, but only fleshed out the details on this while preparing for our design presentation this week. This step (the very first step in the “processing path” diagram below) isn’t just a trivial file reformatting or conversion into a different language. We need to process and extract key information, like the scheduling of solenoid on/off events and duration of each note. We will also need to filter out anything we won’t be implementing with our solenoid actuation, like note dynamics, which may have had default values assigned in the MIDI file. For the most efficiency, we want to ensure that the STM32 handles execution, not computation, meaning it should only receive the most essential data. So, instead of parsing the raw MIDI file on the STM32, a task that will be somewhat computationally heavy, we will use Python’s Mido library on our local machine.

Last week we placed an order for two push-pull solenoids (Adafruit 412) for testing. We expected them to arrive this week but they have been delayed. When they do arrive, which is hopefully sometime this week, we will continue with the plan identified in our last weekly report: determining if they are sufficient for our project requirements and ordering more if they are.

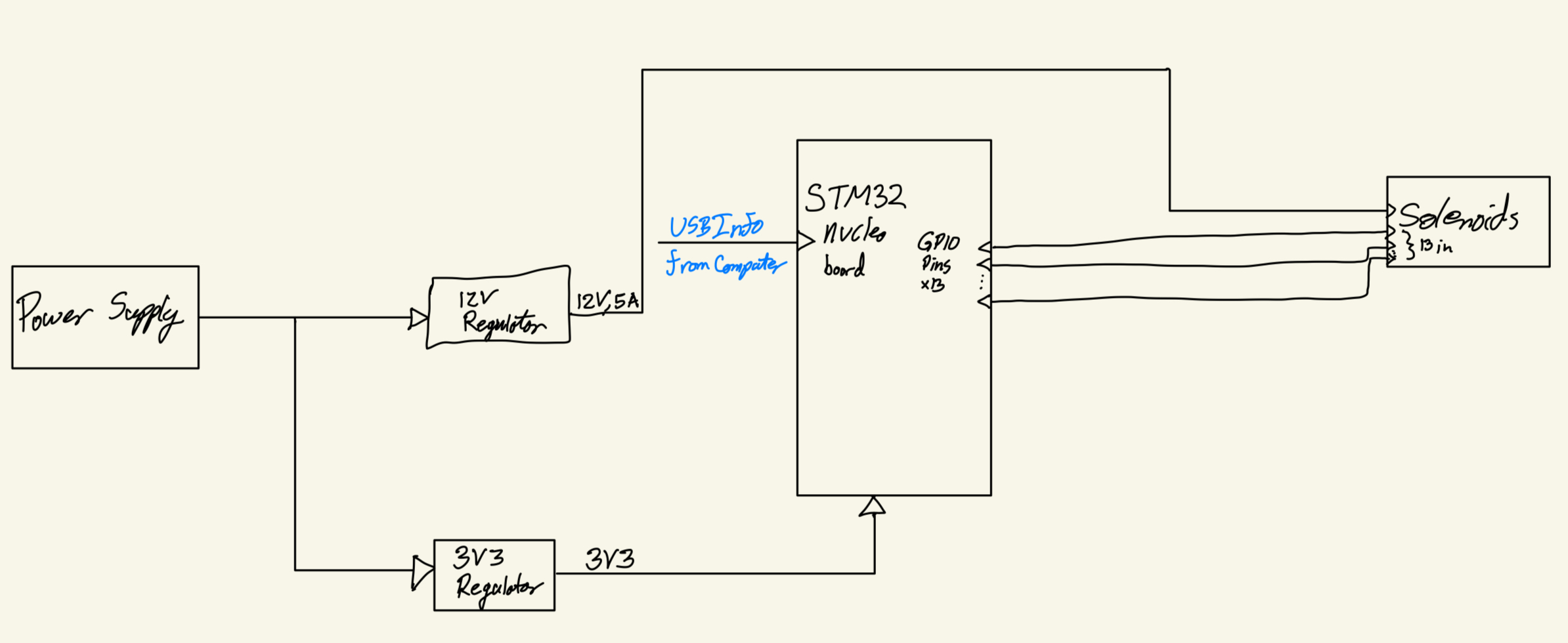

Figure 1: Hardware Block Diagram

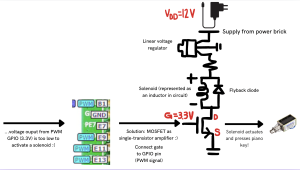

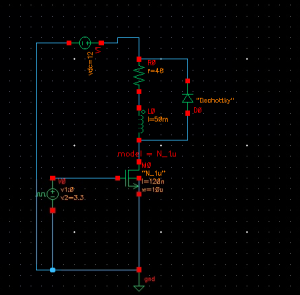

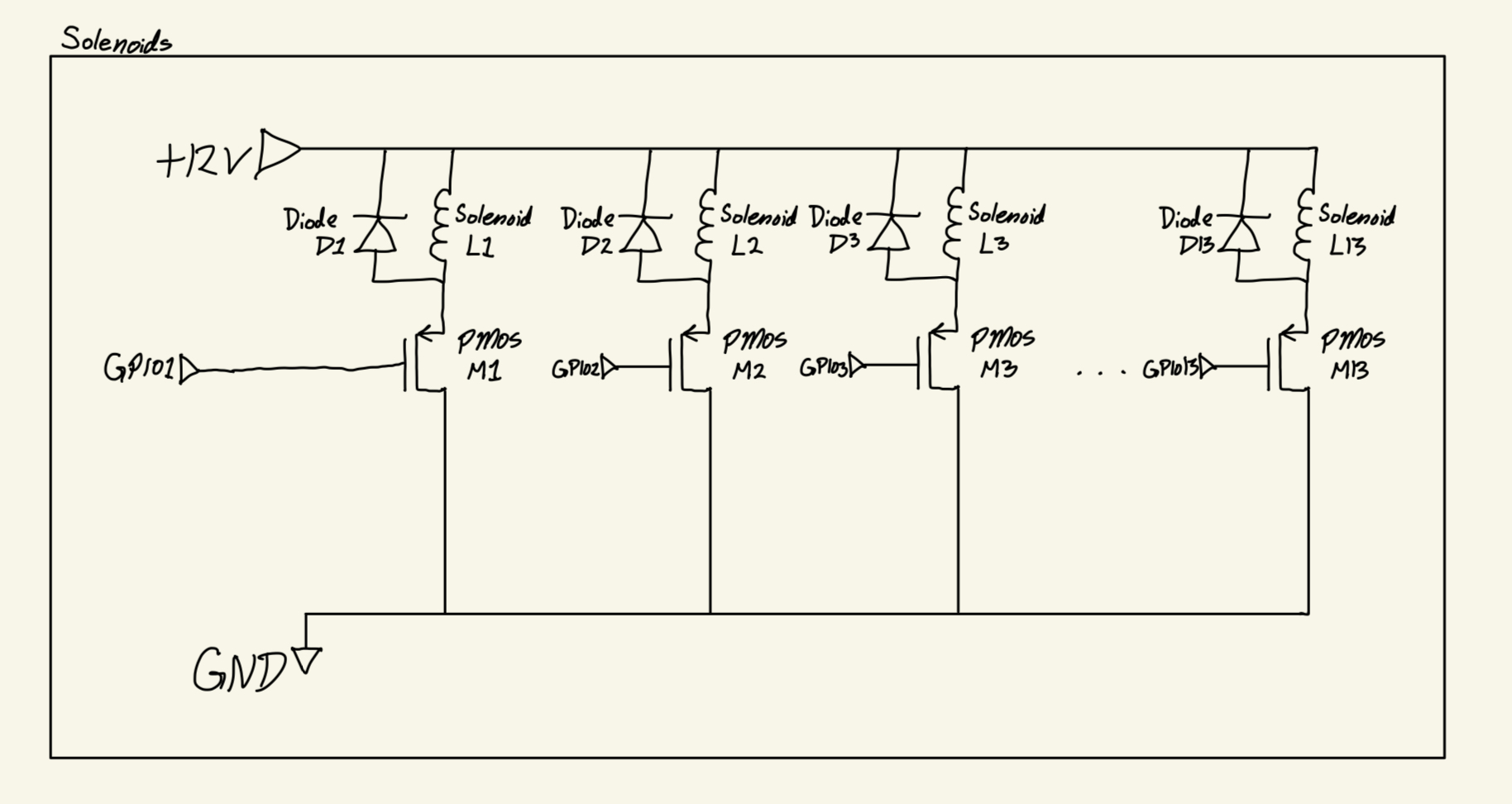

Figure 1: Hardware Block Diagram Figure 2: Solenoids Schematic

Figure 2: Solenoids Schematic